Tencent has released the technical report for its flagship large language model, TurboS, showcasing the core innovations and powerful capabilities of the model.

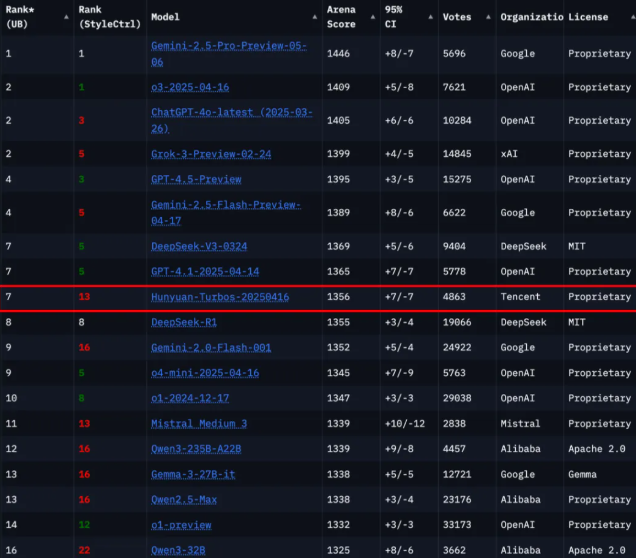

According to the latest rankings on Chatbot Arena, a globally authoritative platform for evaluating large language models, the Hunchun TurboS ranks seventh among 239 participating models, becoming one of the top models in China after Deepseek, and internationally trailing only Google, OpenAI, and xAI.

The architecture of the Hunchun TurboS model adopts an innovative Hybrid Transformer-Mamba structure. This novel design combines the efficiency of the Mamba architecture in handling long sequences with the advantages of the Transformer architecture in understanding context, achieving a balance between performance and efficiency. The model consists of a total of 128 layers, with activation parameters amounting to 56 billion, making it the first large-scale deployed Transformer-Mamba expert hybrid model (MoE) in the industry. Through such architectural innovation, TurboS achieved an overall high score of 1356 in international authoritative evaluations.

To further enhance the model's capabilities, the Hunchun TurboS introduced an adaptive chain-of-thought mechanism that can automatically switch response modes based on the complexity of the questions. This mechanism allows the model to respond quickly to simple problems while deeply analyzing and providing highly accurate answers for complex ones. Additionally, the team designed a post-training process involving four key modules, including supervised fine-tuning and adaptive short/long CoT fusion, which further enhanced the model's performance.

In the pre-training phase, the Hunchun TurboS was trained on a corpus of 16 trillion tokens, ensuring the quality and diversity of the model's data. Its core architecture includes Transformer, Mamba2, and feedforward neural network (FFN) components, with a reasonable hierarchical structure that maximizes training and inference efficiency.

The release of this technical report not only demonstrates Tencent's technological strength in the field of large language models but also provides new ideas and directions for future development in this area.

Paper link: https://arxiv.org/abs/2505.15431

Key points:

🌟 The TurboS model ranked seventh on Chatbot Arena, demonstrating strong competitiveness.

💡 The innovative Hybrid Transformer-Mamba architecture achieves the best balance between performance and efficiency.

🔍 The adaptive chain-of-thought mechanism enhances the model's ability to respond to problems of varying complexities.