DeepSeek has recently made a significant update to its high-performance inference AI model, DeepSeek-R1, greatly enhancing the model's performance in code generation and complex reasoning tasks, drawing widespread attention from the artificial intelligence community. Based on publicly available information and the latest developments, this article comprehensively analyzes the key highlights of this update.

Update to R1 Model: Significant Improvement in Code Generation Capability

The latest update to DeepSeek-R1 has achieved notable breakthroughs in code generation capabilities. Tests show that the new version of R1 demonstrates higher accuracy and stability when handling complex code tasks compared to earlier versions, marking a qualitative leap forward. It is rumored that this update may have been optimized through training based on the latest version of DeepSeek-V3 (V3-0324), further solidifying R1's competitive edge in the programming domain, particularly when compared with top-tier reasoning models like OpenAI o1.

Open-source Strategy and Performance对标OpenAI o1

Since its release on January 20, 2025, DeepSeek-R1 has drawn significant attention due to its open-source nature and outstanding performance. The R1 model achieves performance comparable to OpenAI o1’s official version in mathematical, code generation, and natural language reasoning tasks using only minimal labeled data through large-scale reinforcement learning (RL) post-training. R1 follows the MIT License and is fully open-source, allowing developers to train smaller models via model distillation techniques to meet diverse application needs. This open strategy significantly lowers the technological barrier for use and promotes the popularization and innovation of AI technologies.

Community Influence: Unmoderated Version and Industry Response

The flexibility and community influence of DeepSeek-R1 are noteworthy. Recently, Perplexity AI launched an unmoderated version, R11776, based on R1 by removing approximately 1,000 "backdoors" through later training, providing more fair and truthful information on sensitive topics while remaining open-source. This move further highlights the openness and collaborative potential of the R1 model.

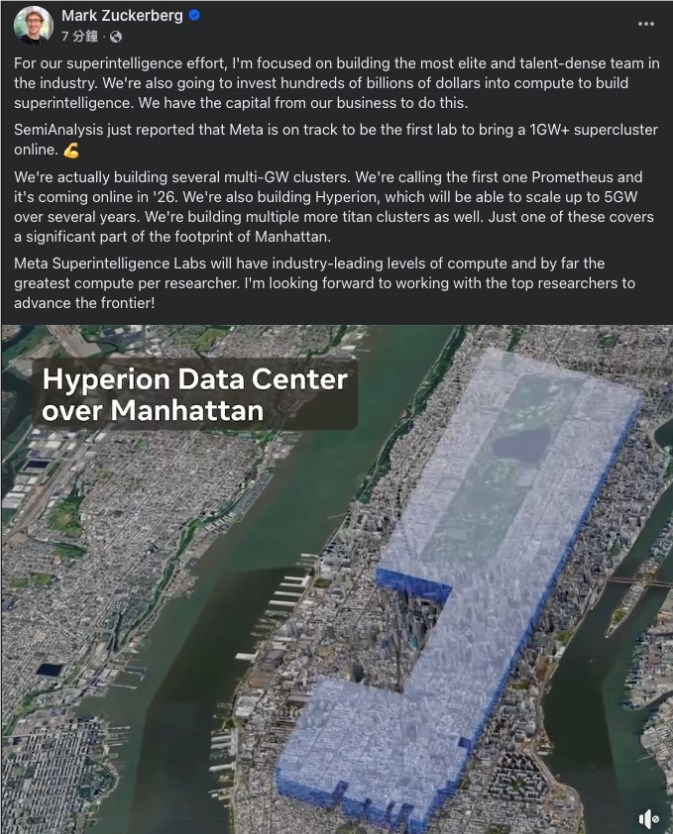

In addition, R1’s excellent performance has had a profound impact on the industry. It is reported that its performance and open-source strategy have garnered high attention from companies like Meta, which has established a dedicated research team to analyze R1’s working principles to optimize its Llama model. R1’s success has also earned recognition from OpenAI, which acknowledges it as an independently developed o1-level reasoning model, showcasing DeepSeek’s technical prowess on a global scale.

Technical Highlights: Pure Reinforcement Learning and Cost Efficiency

DeepSeek-R1’s success is due to its innovative training methods. The model skips the traditional supervised fine-tuning (SFT) stage, directly initiating "cold start" training on DeepSeek-V3-Base using pure reinforcement learning (RL) technology. This approach significantly reduces data labeling costs while endowing the model with the ability for self-reflection and re-evaluation of reasoning steps.

R1’s training costs are also highly competitive. The training cost for its 671 billion-parameter mixture-of-experts (MoE) model is approximately $5.5 million, a substantial reduction compared to traditional large models. Combined with support from NVIDIA GeForce RTX50 series GPUs, R1 achieves low latency and high privacy protection during local deployment, making it suitable for research and enterprise scenarios. Recently, NVIDIA announced a fourfold increase in R1’s inference speed, further establishing a new benchmark for inference AI.

Industry Competition and Future Prospects

DeepSeek-R1’s update aligns with OpenAI o1 in both technical performance and cost advantages. Its API pricing is $1-$4 per million input tokens and $16 per million output tokens, far lower than OpenAI o1’s $15 (input) and $60 (output) pricing, demonstrating significant cost-effectiveness.

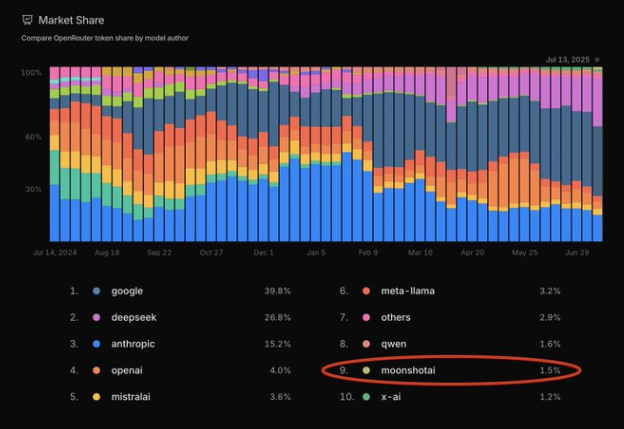

Domestic AI competition is intensifying. Recently, Alibaba released the QwQ32B inference model, claiming comparable performance to R1 while integrating thought functionality during tool usage. This indicates that domestic inference model competition has reached a fever pitch, and DeepSeek-R1’s leading position will face more challenges.

Conclusion

DeepSeek-R1’s latest update further solidifies its leading position in the global AI inference domain. Through reinforcement learning, open-source strategies, and cost advantages, R1 excels in code generation, mathematical reasoning, and natural language processing tasks while promoting the democratization and community collaboration of AI technologies. In the future, as DeepSeek continues to optimize model performance and expand application scenarios, R1 is expected to play a greater role in scientific research, education, and enterprise intelligent upgrades.