Recently, the Qwen VLo multimodal large model was officially launched. The model has made significant progress in image content understanding and generation, providing users with a brand-new visual creation experience.

According to the introduction, Qwen VLo has been comprehensively upgraded based on the advantages of the original Qwen-VL series models. The model not only can accurately "understand" the world but also perform high-quality re-creation based on its understanding, truly achieving a leap from perception to generation. Users can now directly experience this new model on the Qwen Chat (chat.qwen.ai) platform.

The unique feature of Qwen VLo lies in its progressive generation method. When generating images, the model uses a step-by-step construction strategy from left to right and top to bottom, continuously optimizing and adjusting the predicted content during the process to ensure the final result is harmonious and consistent. This generation mechanism not only improves visual effects but also provides users with a more flexible and controllable creative process.

In terms of content understanding and re-creation, Qwen VLo demonstrates strong capabilities. Compared to previous multimodal models, Qwen VLo can better maintain semantic consistency during the generation process, avoiding issues such as misgenerating cars into other objects or failing to retain key structural features of the original image. For example, when a user provides a photo of a car and requests a color change, Qwen VLo can accurately identify the car model, retain the original structural features, and naturally transform the color style, making the generated result both as expected and realistic.

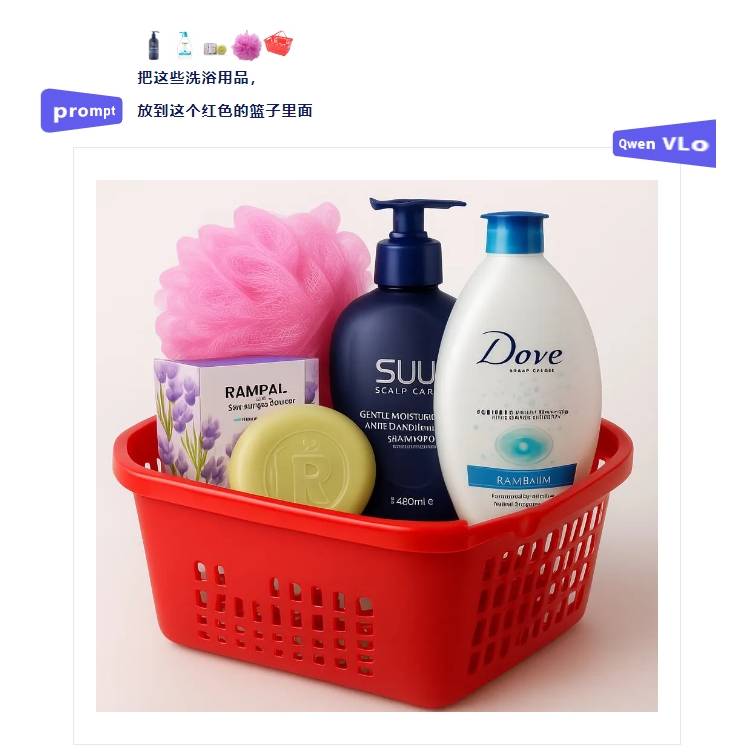

Additionally, Qwen VLo supports open instruction editing and modification. Users can propose various creative instructions through natural language, such as changing the art style, adding elements, or adjusting the background. The model can flexibly respond to these instructions and generate results that meet user expectations. Whether it's art style transfer, scene reconstruction, or detail refinement, Qwen VLo can easily handle them.

Notably, Qwen VLo also has the ability to support multilingual instructions. The model supports instructions in multiple languages, including Chinese and English, providing a unified and convenient interaction experience for global users. No matter which language users use, they just need to briefly describe their needs, and the model can quickly understand and output the desired results.

In practical applications, Qwen VLo demonstrates diverse functions. It can directly generate and modify images, such as replacing backgrounds, adding subjects, or performing style transfers. At the same time, the model can also complete large-scale modifications based on open instructions, including visual perception tasks such as detection and segmentation. In addition, Qwen VLo supports input and generation of multiple images, as well as image detection and annotation functions.

Aside from cases where text and images are input simultaneously, Qwen VLo also supports direct image generation from text, including general images and Chinese and English posters. The model uses dynamic resolution training and supports image generation at any resolution and aspect ratio, allowing users to generate image content suitable for different scenarios according to actual needs.

Currently, Qwen VLo is still in the preview stage. Although it has shown powerful capabilities, there are still some shortcomings. For example, there may be situations during the generation process that do not conform to facts or are not completely consistent with the original image. The development team stated that they will continue to iterate the model, constantly improving its performance and stability.

Experience address: chat.qwen.ai