Recently, Baidu officially announced the open-sourcing of its ERNIE Bot 4.5 series, launching a total of ten models, including a mixture-of-experts (MoE) model with 47B and 3B activated parameters, as well as a dense model with 0.3B parameters. This open-source initiative not only fully releases the pre-training weights but also provides inference code, marking a significant advancement in Baidu's efforts in large model technology.

These newly released models can be downloaded and deployed on platforms such as PaddlePaddle Starry Community and Hugging Face. Additionally, Baidu Intelligent Cloud's Qianfan Large Model Platform offers corresponding API services. This move has made Baidu another major tech company in China, following Tencent, Alibaba, and ByteDance, that actively participates in open source, demonstrating its determination in the era of large model applications.

Image source note: The image is AI-generated, and the image licensing service provider is Midjourney.

Earlier this February, Baidu had already revealed the launch plan for the ERNIE Bot 4.5 series and announced the open-sourcing by June 30th. Although the upgraded version ERNIE Bot 4.5 Turbo was not included in this open-source list, it still sparked discussions among developers. Many developers believe that smaller parameter models are very suitable for memory-constrained configurations and perform well in terms of performance, which could compete with other large models like DeepSeek V3 and Alibaba Qwen.

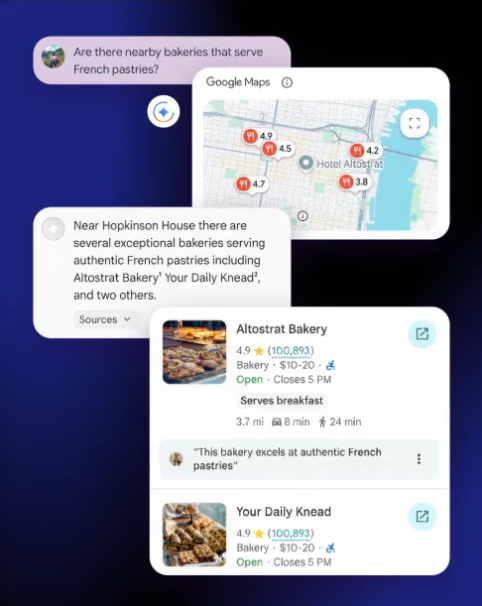

The ERNIE Bot 4.5 series is a native multi-modal foundation model. In multiple tests, it has shown better performance than competitors such as GPT-4o. The model can not only understand text but also process various visual information such as photos and videos, demonstrating its strong capabilities in multi-modal understanding and generation.

Baidu's open-sourcing of the ERNIE Bot 4.5 series is mainly based on three key technological innovations: first, multi-modal heterogeneous MoE pre-training, enabling the model to effectively capture information from both text and visual modalities; second, an efficient infrastructure to achieve fast training and inference; and third, post-training tailored for specific modalities, allowing the model to perform better in diverse practical applications.

As the global competition in large model markets intensifies, Baidu's open-source initiatives undoubtedly put pressure on other closed-source model providers, raising the overall technical standards of the industry. This action also provides more freedom for developers and researchers, helping them iterate and apply models more quickly, promoting the advancement of artificial intelligence.