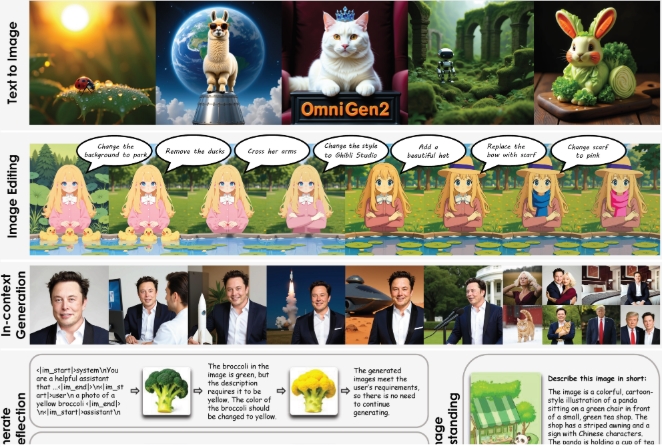

Recently, the Beijing Institute of Artificial Intelligence launched a new open-source system - OmniGen2. This system focuses on text-to-image generation, image editing, and contextual image creation.

Compared to the first generation OmniGen released in 2024, OmniGen2 adopts two independent decoding paths: one for text generation and another for image generation, each with its own parameters and decoupled image tokenizers. This design allows the model to maintain text generation capabilities while effectively improving the performance of multimodal language models.

The core of OmniGen2 is a large multimodal language model (MLLM) based on the Qwen2.5-VL-3B transformer. In terms of image generation, the system uses a custom diffusion transformer with approximately 4 billion parameters. The model automatically switches to image generation mode when encountering the special "<|img|>" tag. It is worth noting that OmniGen2 can handle various prompts and artistic styles, but its photo-realistic images still need improvement in clarity.

To train OmniGen2, the research team used about 140 million images from open-source datasets and proprietary collections. In addition, they developed new technologies to extract similar frames from videos (such as a smiling and non-smiling face) and use a language model to generate corresponding editing instructions.

Another major highlight of OmniGen2 is its reflection mechanism, which allows the model to self-assess the generated images and improve them over multiple rounds. The system can identify defects in the generated images and propose specific correction suggestions.

To evaluate the system's performance, the research team introduced the OmniContext benchmark test, including three categories: characters, objects, and scenes, each with eight subtasks and 50 examples. The evaluation was conducted using GPT-4.1, with the main scoring criteria including the accuracy of the prompt and the consistency of the theme. OmniGen2 scored 7.18, surpassing all other open-source models, while GPT-4o scored 8.8.

Although OmniGen2 performs well in multiple benchmark tests, it still has some shortcomings: the effect of English prompts is better than Chinese, the changes in body shape are relatively complex, and the output quality is also affected by the input image. For ambiguous multi-image prompts, the system needs clear object placement instructions.

The research team plans to release the model, training data, and construction pipeline on the Hugging Face platform.

Key points:

🌟 OmniGen2 is an open-source text and image generation system that uses independent text and image decoding paths.

🎨 It can handle image generation in various artistic styles and has self-reflection and improvement functions.

📈 OmniGen2 performs well in multiple benchmark tests, especially setting a new record for open-source models in image editing.