In the field of artificial intelligence, especially with continuous advancements in generative adversarial networks (AIGC), voice interaction has become an important research direction. Traditional large language models (LLMs) mainly focus on text processing and cannot directly generate natural speech, which to some extent affects the smoothness of human-computer audio interaction.

To break through this limitation, the Step-Audio team has open-sourced a new end-to-end speech large model — Step-Audio-AQAA. This model can directly generate natural and fluent speech from raw audio input, making human-computer communication more natural.

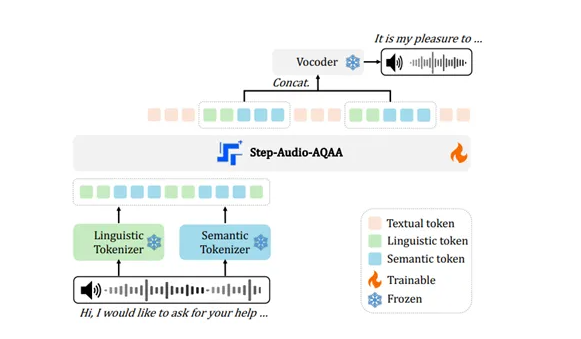

The architecture of Step-Audio-AQAA consists of three core modules: a dual-codebook audio tokenizer, a backbone LLM, and a neural vocoder. The dual-codebook audio tokenizer is responsible for converting input audio signals into structured token sequences. This module is divided into a language tokenizer and a semantic tokenizer. The former extracts structured features of language, while the latter captures paralinguistic information such as emotion and tone in speech. Through this dual-codebook design, Step-Audio-AQAA can better understand complex information in speech.

Next, these token sequences are sent to the backbone LLM, which is Step-Omni. This is a pre-trained multimodal model with 130 billion parameters, capable of processing text, speech, and images. The model uses a decoder architecture, which can efficiently process the token sequences from the dual-codebook audio tokenizer. Through deep semantic understanding and feature extraction, it prepares for the subsequent generation of natural speech.

Finally, the generated audio token sequences are sent to the neural vocoder. This module's role is to synthesize high-quality speech waveforms from discrete audio tokens, using a U-Net architecture to ensure efficiency and accuracy in audio processing. Through this innovative architectural design, Step-Audio-AQAA can quickly synthesize natural and fluent speech responses after understanding audio questions, providing users with a better interactive experience.

This technological development represents an important advancement in human-computer audio interaction. The open-source Step-Audio-AQAA not only provides researchers with a powerful tool but also lays a solid foundation for future intelligent voice applications.

Open source address: https://huggingface.co/stepfun-ai/Step-Audio-AQAA

Key points:

🔊 The Step-Audio team's open-source Step-Audio-AQAA can directly generate natural speech from audio input, enhancing the human-computer interaction experience.

📊 The model architecture consists of three modules: a dual-codebook audio tokenizer, a backbone LLM, and a neural vocoder, which can efficiently capture complex information in speech.

🎤 The release of Step-Audio-AQAA marks an important advancement in voice interaction technology, providing new ideas for future intelligent voice applications.