In the field of artificial intelligence, another major development has emerged. This morning, the renowned large model training platform Together.ai and Agentica jointly launched the open-source AI agent framework DeepSWE. This innovative system is based on Alibaba's latest open-source Qwen3-32B model and was fully trained using reinforcement learning.

The open-source information for DeepSWE can be found on Hugging Face. In addition to model weights, all related content such as training methods, logs, and datasets are also publicly available, aiming to help developers better learn and improve this agent system.

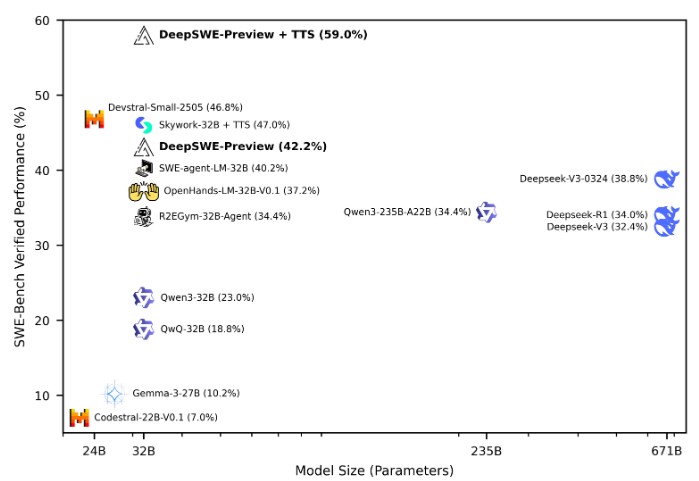

According to the SWE-Bench-Verified test results, DeepSWE was evaluated with a maximum context length of 64k and a maximum of 100 environment steps. After 16 runs, the Pass@1 accuracy reached 42.2%. After mixed testing, its performance even improved to 59%, placing it at the top among all open-source agent frameworks.

DeepSWE's training utilized the rLLM framework, a system specifically designed for later-stage training of language agents. It was trained for six days on 64 H100 GPUs using 4,500 real-world software engineering tasks from the R2E-Gym training environment. These tasks included solving GitHub issues, implementing new code features, and debugging, demonstrating the diversity of real-world software engineering.

During the training process, DeepSWE learned how to navigate extensive codebases, perform targeted code edits, run shell commands for building and testing, and optimize solutions when handling actual pull requests. For dataset management, 4,500 problems from the R2E-Gym subset were used, ensuring the purity and relevance of the training data.

The training environment was built around R2E-Gym, supporting scalable and high-quality executable software engineering environments. The reward mechanism uses a sparse result reward model, providing positive rewards only when the generated patch passes all tests, promoting more effective learning.

DeepSWE's training also adopted an improved GRPO++ algorithm, which achieved a more stable and efficient training process through multiple innovations. Additionally, researchers found that increasing the number of output tokens had limited effects on software engineering tasks, while expanding the rolling number significantly improved model performance.

This series of efforts has made DeepSWE a highly promising AI agent system, driving progress in the practical application of reinforcement learning.

Open source address: https://huggingface.co/agentica-org/DeepSWE-Preview

Key points:

🌟 DeepSWE is based on the Qwen3-32B model and was fully trained using reinforcement learning. Its open-source information has been fully released.

🏆 In the SWE-Bench-Verified test, DeepSWE performed excellently, achieving a Pass@1 accuracy of 59%, making it the top performer among all open-source agents.

💡 Using the rLLM framework and the improved GRPO++ algorithm, DeepSWE demonstrated its strong learning capabilities and application potential in real software engineering tasks.