A new open platform called SciArena is now live, aiming to evaluate the performance of large language models (LLMs) in scientific literature tasks through human preference assessments. Early results have revealed significant performance gaps between different models.

SciArena was developed by researchers from Yale University, New York University, and the Allen Institute for AI, aiming to systematically assess the effectiveness of both proprietary and open-source LLMs in handling scientific literature tasks, filling a gap in systematic evaluation in this field.

Differing from traditional benchmark tests, SciArena follows the approach of Chatbot Arena, relying on real researchers for evaluation. After users submit scientific questions, they receive two long-form answers generated by models with references, and then decide which answer is better. Relevant literature is retrieved through a customized ScholarQA process.

To date, the platform has collected over 13,000 evaluations from 102 researchers in natural sciences, engineering, life sciences, and social sciences, covering topics such as concept explanations and literature retrieval.

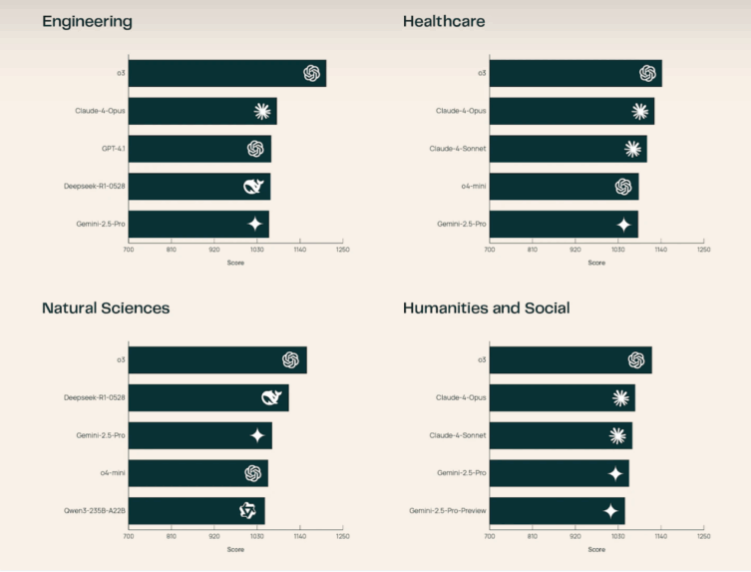

In the current rankings, OpenAI's o3 model is at the top, followed by Claude-4-Opus and Gemini-2.5-Pro. Among open-source models, Deepseek-R1-0528 stands out, with performance even surpassing several proprietary systems. The research team noted that o3 performs particularly well in natural sciences and engineering sciences. Researchers also found that users pay the most attention to whether citations match the statements correctly, rather than just the number of citations. Factors such as answer length have less impact on SciArena compared to platforms like Chatbot Arena or Search Arena.

Despite these advances, automated evaluation remains a challenge. The team also launched a new benchmark test called SciArena-Eval, to test a language model's ability to judge other models' answers. However, even the best-performing models agree with human preferences only about 65% of the time, highlighting the limitations of current LLM-as-a-Judge systems in the scientific field.

SciArena is publicly accessible, with its code, data, and the SciArena-Eval benchmark available in an open-source format. The platform aims to support the development of models that better meet human needs in scientific information tasks. In the future, SciArena plans to add evaluation features for agent-based research systems.