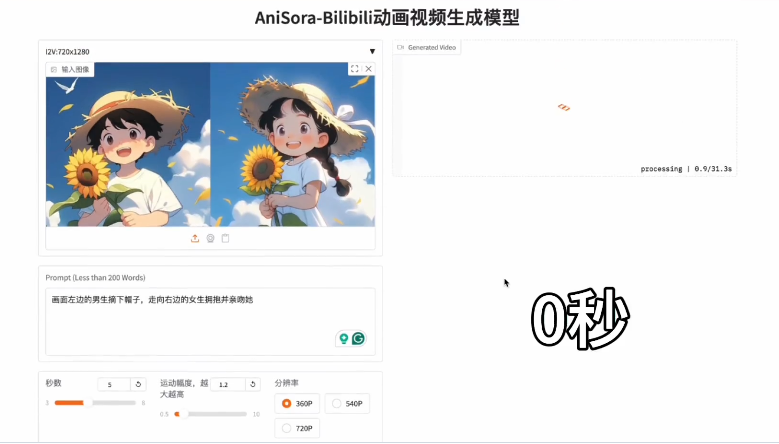

In July 2025, Bilibili (B站) announced a major update to its open-source anime video generation model AniSora, officially releasing AniSora V3. As part of the Index-AniSora project, the V3 version further optimizes generation quality, motion smoothness, and style diversity on the basis of previous versions, providing creators in the anime, manga, and VTuber fields with more powerful tools. AIbase deeply interprets the technical breakthroughs, application scenarios, and industry impact of AniSora V3.

Technical Upgrades: Higher Quality and Precise Control

AniSora V3 is based on Bilibili's previously open-sourced CogVideoX-5B and Wan2.1-14B models, combined with the Reinforcement Learning with Human Feedback (RLHF) framework, significantly improving the visual quality and motion consistency of generated videos. It supports one-click generation of various styles of anime video scenes, including anime clips, domestic original animations, manga video adaptations, and VTuber content, etc.

Core upgrades include:

- Optimization of the Spatiotemporal Mask Module: V3 enhances spatiotemporal control capabilities, supporting more complex animation tasks such as fine-grained character expression control, dynamic camera movement, and localized image-guided generation. For example, the prompt "five girls dancing while the camera zooms in, raising their left hands to the top of their heads and then lowering them to their knees" can generate a smooth dance animation with synchronized camera and character movements.

- Dataset Expansion: V3 continues to train on over 10 million high-quality anime video clips (extracted from 1 million original videos), adding a new data cleaning pipeline to ensure style consistency and detailed richness of the generated content.

- Hardware Optimization: V3 adds native support for Huawei Ascend910B NPU, completely trained on domestic chips, with a 20% improvement in inference speed, generating a 4-second high-definition video in just 2-3 minutes.

- Multi-task Learning: V3 enhances multi-task processing capabilities, supporting functions such as video generation from single-frame images, keyframe interpolation, and lip synchronization, especially suitable for manga adaptations and VTuber content creation.

In the latest benchmark tests, AniSora V3 achieved top industry levels (SOTA) in role consistency and motion smoothness in both VBench and double-blind subjective tests, particularly excelling in complex actions (such as exaggerated anime actions that violate physical laws).

Open Source Ecosystem: Community-driven and Transparent Development

The complete training and inference code for AniSora V3 was updated on GitHub on July 2, 2025. Developers can access model weights and a dataset of 948 animated videos via Hugging Face. Bilibili emphasized that AniSora is an "open-source gift to the anime world," encouraging community collaboration to optimize the model. Users need to fill out an application form and send it to a specified email (e.g., yangsiqian@bilibili.com) to obtain V2.0 weights and full dataset access rights.

V3 also introduced the first RLHF framework for anime video generation, fine-tuning the model using tools such as AnimeReward and GAPO to ensure outputs align with human aesthetics and anime style requirements. Community developers have already begun developing custom plugins based on V3, such as enhancing the generation effect of specific anime styles (e.g., Studio Ghibli style).

Application Scenarios: From Creativity to Business

AniSora V3 supports various anime styles, including Japanese anime, domestic original animation, manga adaptations, VTuber content, and parody animations (guchu animations), covering 90% of anime video application scenarios. Specific applications include:

- Single Image to Video: Users upload a high-quality anime image and provide text prompts (e.g., "the character waves while sitting in a moving car, with hair blowing in the wind"), and a dynamic video can be generated, maintaining consistent character details and style.

- Manga Adaptation: Generate animated videos with lip synchronization and actions from comic frames, suitable for quickly creating trailers or short animations.

- VTuber and Games: Supports real-time generation of character animations, helping independent creators and game developers quickly test character movements.

- High-resolution Output: The generated video supports up to 1080p, ensuring professional presentation on social media and streaming platforms.

AIbase testing shows that V3 reduces about 15% of artifact issues when generating complex scenes (such as multi-character interactions and dynamic backgrounds), with an average generation time of 2.5 minutes (for a 4-second video).

The release of AniSora V3 further lowers the barrier to anime creation, enabling independent creators and small teams to achieve high-quality animation production at low cost. Compared to general video generation models like OpenAI's Sora or Kling, AniSora V3 focuses on the anime field, filling a market gap. Compared to ByteDance's EX-4D, AniSora V3 focuses more on 2D/2.5D anime styles rather than 4D multi-angle generation, showcasing a different technical approach.

Project: https://t.co/I3HPKPvsBV