ByteDance announced the open source of VINCIE-3B, a 300 million parameter model that supports context-aware image editing, developed based on its internal MM-DiT architecture. This model breaks through the limitations of traditional image editing and is the first to achieve context-aware image editing capabilities from a single video data without relying on complex segmentation or restoration models to generate training data. The release of VINCIE-3B brings new possibilities to the fields of creative design, film post-production, and content generation. AIbase deeply analyzes its technical highlights, application scenarios, and industry impact.

Technical Breakthrough: From Video to Contextual Editing

Traditional image editing models usually rely on task-specific data pipelines, requiring expert models (such as segmentation or restoration) to generate training data, which is costly and complex. VINCIE-3B innovatively learns directly from videos by converting them into interleaved multimodal sequences (text + images), achieving context-aware image editing. Specific technical highlights include:

- Video-driven Training: VINCIE-3B uses continuous frames from videos to automatically extract text descriptions and image sequences, building multimodal training data. This method avoids reliance on traditional expert models, significantly reducing data preparation costs.

- Block-Causal Diffusion Transformer: The model adopts a block-causal attention mechanism, enabling causal attention between text and image blocks, while using bidirectional attention within blocks. This design ensures efficient information flow while maintaining causal consistency in time series.

- Triple Agent Task Training: VINCIE-3B is trained using three tasks: next-frame prediction, current-frame segmentation prediction, and next-frame segmentation prediction, enhancing the model's understanding of dynamic scenes and object relationships.

- Combination of Clean and Noisy Conditions: To address the issue of noise image inputs in diffusion models, VINCIE-3B simultaneously inputs clean and noisy image tokens, using attention masks to ensure that noise images are conditionally generated based only on clean contexts, improving editing quality.

In performance tests, VINCIE-3B achieved industry-leading levels (SOTA) on KontextBench and a new multi-round image editing benchmark, especially excelling in text following, character consistency, and complex scene editing (such as moving dynamic objects). The average time to generate a high-quality edited image is about 4 seconds, with inference efficiency 8 times faster than similar models.

Open Source Ecosystem: Empowering Global Developers

The complete code, model weights, and training data processing workflow of VINCIE-3B were released on GitHub and arXiv on June 14, 2025. Developers can apply to obtain the full dataset (contact email: yangsiqian@bilibili.com). The model is initialized based on ByteDance's MM-DiT (3B and 7B parameter versions) and is licensed under Apache 2.0, supporting non-commercial use. For commercial applications, contact ByteDance for permission.

ByteDance also launched a multi-round image editing benchmark, containing real-world use cases, encouraging the community to verify and optimize model performance. On social media, developers welcomed the open source of VINCIE-3B, believing that its "learning from video" approach opens a new path for low-cost AI content creation.

Application Scenarios: A Win-Win for Creativity and Productivity

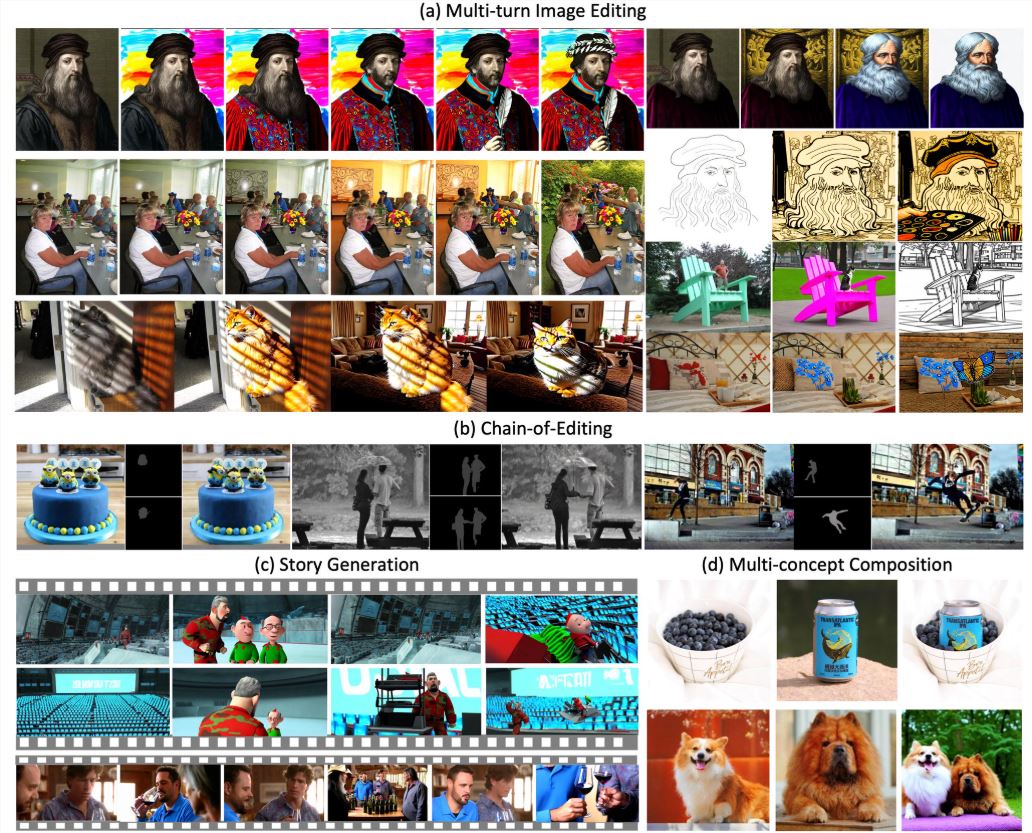

VINCIE-3B supports continuous editing based on text and previous images, suitable for various scenarios:

- Film Post-Production: Extract characters or objects from video frames for continuous editing to fit different scenes, such as moving a character from indoors to outdoors, maintaining consistent lighting and perspective.

- Brand Marketing: Place products or logos in different backgrounds (e.g., coffee shops, outdoor billboards), automatically adjusting lighting, shadows, and perspective to simplify multi-scene promotional material production.

- Games and Animation: Adjust character actions or scene elements via text instructions, supporting rapid prototyping and animation previews.

- Social Media Content: Creators can generate dynamic sequences from a single image, such as turning a static character image into a dynamic meme.

For example, the prompt "Move the girl in a red dress from the park to the beach, keep the dress texture, adjust to sunset lighting" can generate a naturally blended image with highly realistic details and lighting effects. AIbase testing shows that VINCIE-3B maintains over 90% character consistency in multi-round editing, outperforming FLUX.1Kontext [pro] in complex scenarios.

Limitations and Challenges

Despite its excellent performance, VINCIE-3B still has some limitations:

- Multi-Round Editing Limitations: Excessive rounds of editing may introduce visual artifacts, leading to a decrease in image quality. It is recommended that users complete editing within five rounds to maintain optimal results.

- Language Support: Currently, the model mainly supports English prompts, and the text following capability for Chinese and other languages is slightly lower. ByteDance plans to optimize multilingual capabilities in future versions.

- Copyright Issues: The training data partially comes from public videos, which may involve potential copyright disputes. Users must ensure content compliance in commercial applications.

AIbase recommends that users test VINCIE-3B using the provided KontextBench dataset to optimize prompt design. For commercial users, it is advisable to contact ByteDance to clarify licensing terms.

Industry Impact: Redefining the Image Editing Paradigm

The release of VINCIE-3B marks a paradigm shift in image editing from static to dynamic, and from single to context-aware continuous editing. Compared to Black Forest Labs' FLUX.1Kontext (which focuses on static image editing), VINCIE-3B achieves stronger dynamic scene understanding through video learning, making it particularly suitable for applications requiring temporal sequence consistency. Compared to Bilibili's AniSora V3 (which focuses on anime video generation), VINCIE-3B is more general, covering both real-world scenarios and virtual content generation.

ByteDance's open source strategy further solidifies its leadership in the field of AI creative tools. AIbase believes that VINCIE-3B's "video-to-image" training method may inspire other companies to explore similar paths, reduce the cost of AI model development, and promote the democratization of the creative industry.

huggingface:https://huggingface.co/ByteDance-Seed/VINCIE-3B