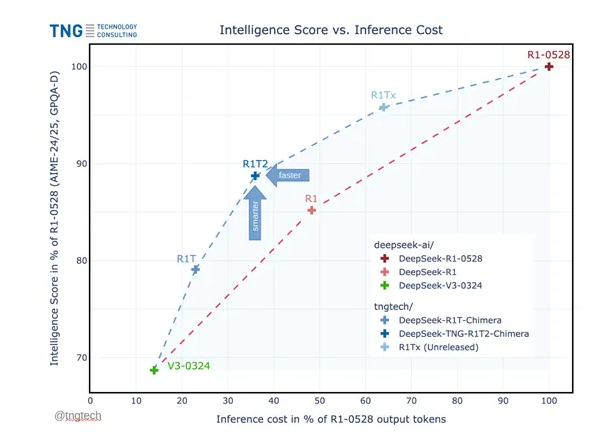

Recently, the renowned German technology consulting company TNG released an enhanced version of DeepSeek — DeepSeek-TNG-R1T2-Chimera, marking another major breakthrough in the reasoning efficiency and performance of deep learning models. This new version not only improves reasoning efficiency by 200%, but also significantly reduces reasoning costs through the innovative AoE architecture.

Innovative AoE Architecture

The Chimera version is a hybrid development based on three models of DeepSeek: R1-0528, R1, and V3-0324, and it adopts a new AoE (Adaptive Expert) architecture. This architecture finely optimizes the mixture-of-experts (MoE) architecture, allowing efficient use of model parameters, thereby enhancing reasoning performance and saving token output.

In various mainstream benchmark tests (such as MTBench and AIME-2024), the Chimera version outperforms the standard R1 version, demonstrating significant reasoning capabilities and cost-effectiveness.

Advantages of MoE Architecture

Before understanding the AoE architecture, we need to understand the mixture-of-experts (MoE) architecture. The MoE architecture divides the feed-forward layer of Transformer into multiple "experts," and each input token is only routed to a subset of experts. This method effectively improves the efficiency and performance of the model.

For example, the Mixtral-8x7B model launched by Mistral in 2023, although it only activates 1.3 billion parameters, can rival the LLaMA-2-70B model with 70 billion parameters, achieving six times higher reasoning efficiency.

The AoE architecture utilizes the fine-grained characteristics of MoE, allowing researchers to build sub-models with specific capabilities from existing mixture-of-experts models. By interpolating and selectively merging the weight tensors of parent models, the new model retains excellent characteristics while flexibly adjusting its performance according to actual needs.

Researchers selected DeepSeek-V3-0324 and DeepSeek-R1 as parent models, and through different fine-tuning techniques, both models demonstrated excellent reasoning capabilities and instruction following.

Weight Merging and Optimization

During the process of building the new sub-model, researchers first need to prepare the weight tensors of the parent models and directly manipulate them by parsing the weight files. Then, by defining weight coefficients, researchers can smoothly interpolate and merge the features of the parent models, generating new model variants.

During the merging process, researchers introduced threshold control and difference filtering mechanisms to ensure that only tensors with significant differences are included in the merging scope, thus reducing model complexity and computational costs.

In the MoE architecture, the routing expert tensor is a crucial component, as it determines which expert module is selected for input tokens during reasoning. The AoE method pays special attention to the merging of these tensors. Researchers found that optimizing the routing expert tensor can significantly enhance the reasoning capability of the sub-model.

Finally, using the PyTorch framework, researchers implemented the model merging. The merged weights were saved to new weight files, generating a new sub-model that demonstrates high efficiency and flexibility.

Open source address: https://huggingface.co/tngtech/DeepSeek-TNG-R1T2-Chimera