Today, with the rapid development of artificial intelligence technology, the Natural Language Processing team at the Institute of Computing Technology, Chinese Academy of Sciences, has launched a multi-modal large model called Stream-Omni. The core highlight of this model is its ability to support multiple modalities of interaction simultaneously, providing users with a more flexible and rich experience.

Comprehensive Support for Multi-Modal Interaction

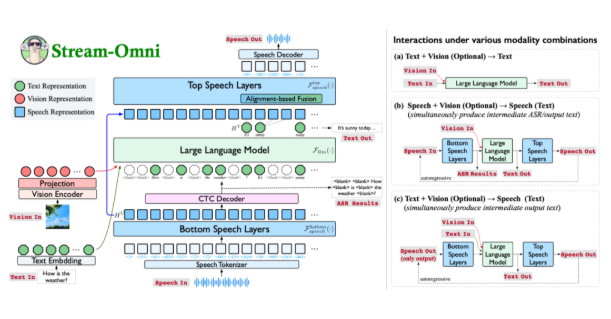

Stream-Omni is a multi-modal large model based on the GPT-4o architecture, demonstrating excellent capabilities in text, vision, and speech modalities. Through online voice services, users can not only interact through speech but also obtain intermediate text results in real-time during the process, making the interaction experience more natural, like "watching and listening at the same time."

Innovative Modal Alignment

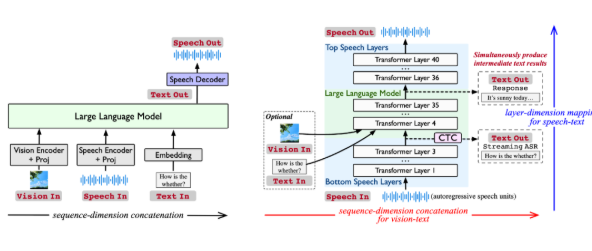

Most existing multi-modal large models typically concatenate representations from different modalities and input them into a large language model to generate responses. However, this approach relies heavily on large amounts of data and lacks flexibility. Stream-Omni reduces dependence on large-scale tri-modal data by modeling modal relationships more specifically. It emphasizes semantic consistency between speech and text and makes visual information semantically complementary to text, achieving more efficient modal alignment.

Powerful Speech Interaction Features

Stream-Omni's unique speech modeling enables it to output intermediate text transcriptions during speech interactions, similar to GPT-4o. This design provides users with a more comprehensive multi-modal interaction experience, significantly improving efficiency and convenience, especially in scenarios requiring real-time speech-to-text conversion.

Flexible Interaction with Any Modal Combination

The design of Stream-Omni allows for flexible combinations of visual encoders, speech layers, and large language models, supporting various modal combinations for interaction. This flexibility enables users to freely choose input methods in different scenarios, whether text, speech, or visual, and receive consistent responses.

In multiple experiments, Stream-Omni's visual understanding capabilities are comparable to those of visual large models of the same scale, while its speech interaction capabilities significantly outperform existing technologies. This hierarchical dimension-based speech-text mapping mechanism ensures precise semantic alignment between speech and text, making responses across different modalities more consistent.

Stream-Omni not only provides new ideas for multi-modal interaction but also promotes the deep integration of text, vision, and speech technologies with its flexible and efficient characteristics. Although there is still room for improvement in terms of human-like performance and voice diversity, it undoubtedly lays a solid foundation for future multi-modal intelligent interaction.

Paper Link: https://arxiv.org/abs/2506.13642

Open Source Code: https://github.com/ictnlp/Stream-Omni

Model Download: https://huggingface.co/ICTNLP/stream-omni-8b