In August 2024, iFlytek officially launched the Spark Ultra-Human-like Interactive Technology, achieving three core breakthroughs in response speed, emotional resonance, and controllable speech expression through end-to-end voice modeling and multi-dimensional emotional decoupling training. This technology can accurately identify emotional fluctuations in user speech, respond with appropriate tone in real time, and support dynamic adjustment of speaking rate, voice, and character settings, marking a leap from "functional implementation" to "emotional connection" in voice interaction.

Currently, the Ultra-Human-like Interactive API is officially available on the iFlytek Open Platform, allowing developers to access the technology at a low cost. In the gaming field, NPCs can dynamically adjust dialogue strategies based on player emotions; in educational scenarios, AI speaking partners can simulate the real responses of foreign teachers; and in the cultural tourism industry, "digital guides" have emerged, engaging in deep interactions with tourists through role-playing. A pilot project at a scenic spot showed that the guide AI equipped with this technology increased visitor dwell time by 40% and repeat consumption rate by 25%.

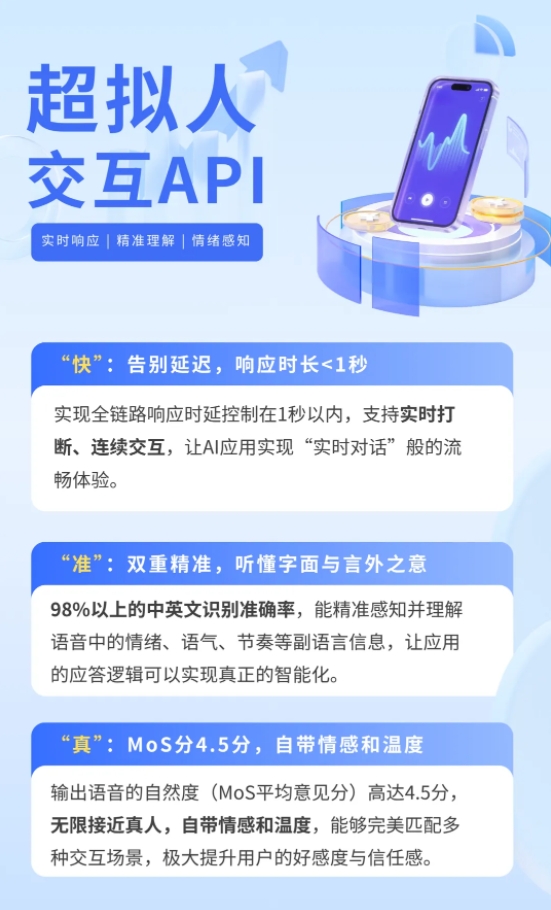

Traditional voice interaction systems use a serial architecture of "speech recognition - large model processing - speech synthesis," resulting in an average response time of more than 3 seconds, and emotional transmission relies on text content, making it difficult to capture paralanguage information such as tone and rhythm in speech. The Spark Ultra-Human-like Interactive Technology adopts a unified neural network framework, achieving end-to-end modeling from speech to speech: after the audio encoder extracts features from the speech signal, they are aligned with text semantic representations, then a multimodal large model predicts the output representation, and finally, the audio decoder generates synthesized speech with natural emotion and precise rhythm. This innovation compresses interaction delay to within 0.5 seconds, upgrading the response mode from "you ask, I answer" to "real-time conversation."

To achieve true emotional resonance, the technology team has built a multidimensional speech attribute decoupling representation system, separating and training elements such as content, emotion, language, voice, and intonation. Through contrastive learning and mask prediction, the system can accurately identify emotions such as joy, anger, and anxiety in speech and automatically adjust response strategies. For example, when a user asks for directions anxiously, the AI will respond with a calm tone and quickly plan the route; when a user shares a funny story, the AI will use a relaxed tone to extend the topic. Additionally, developers can customize AI character settings through the API, enabling them to have specific values, language styles, or even simulate celebrity voices for interaction.

To reduce the application threshold, iFlytek has introduced a tiered pricing strategy: the cost of API calls is as low as 0.1 yuan per minute, and enterprise users who pass certification can get 3 months, 10 hours of free trial quota. Compared to traditional voice interaction systems that require purchasing separate modules such as speech recognition, synthesis, and NLP, the Spark Ultra-Human-like Technology reduces overall costs by over 60%.