In the field of deep learning, recurrent neural networks (RNNs) and Transformer models each have their own strengths. Recent research has found that linear recurrent models, such as Mamba, are gradually challenging the dominance of Transformers due to their superior sequence processing capabilities. Especially in tasks involving extremely long sequences, recurrent models have shown great potential, far exceeding the limitations of traditional Transformer models.

Transformer models often face limitations when processing long contexts, as they are constrained by a fixed context window. Additionally, their computational complexity increases rapidly with the length of the sequence, leading to performance degradation. In contrast, linear recurrent models can handle long sequences more flexibly, which is one of their major advantages. However, previous recurrent models often underperformed compared to Transformers on short sequences, limiting their practical applications.

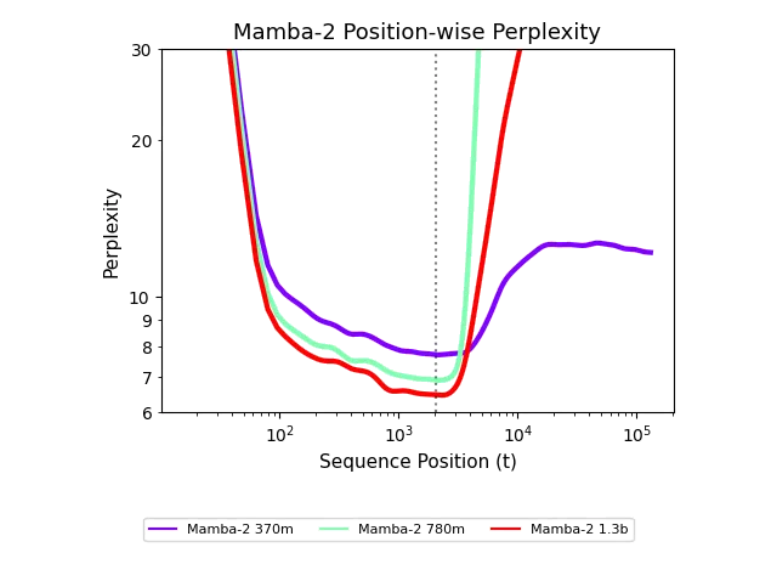

Recently, researchers from Carnegie Mellon University and Cartesia AI proposed an innovative approach to enhance the generalization ability of recurrent models on long sequences. They found that with just 500 steps of simple training intervention, recurrent models could process sequences as long as 256k, demonstrating remarkable generalization capabilities. This study shows that recurrent models do not have fundamental flaws, but rather their potential has not been fully explored.

The research team introduced a new explanatory framework called the "Unexplored States Hypothesis." This hypothesis suggests that during training, recurrent models only encounter limited state distributions, leading to poor performance when dealing with longer sequences. To achieve length generalization, the researchers proposed a series of training interventions, including the use of random noise, noise fitting, and state transfer. These measures enable the model to generalize effectively during long sequence training, significantly improving performance.

Notably, these intervention methods not only improve model performance but also maintain state stability, allowing recurrent models to perform exceptionally well in long-context tasks. The researchers demonstrated the effectiveness of these methods through a series of experiments, opening up new directions for the development of recurrent models.