Alibaba Group has recently officially launched its latest multimodal large language model HumanOmniV2, causing a stir in the AI field. With its powerful global context understanding and multimodal reasoning capabilities, this model marks another major breakthrough in Alibaba's artificial intelligence technology.

Core Capabilities: Global Context Understanding and Multimodal Reasoning

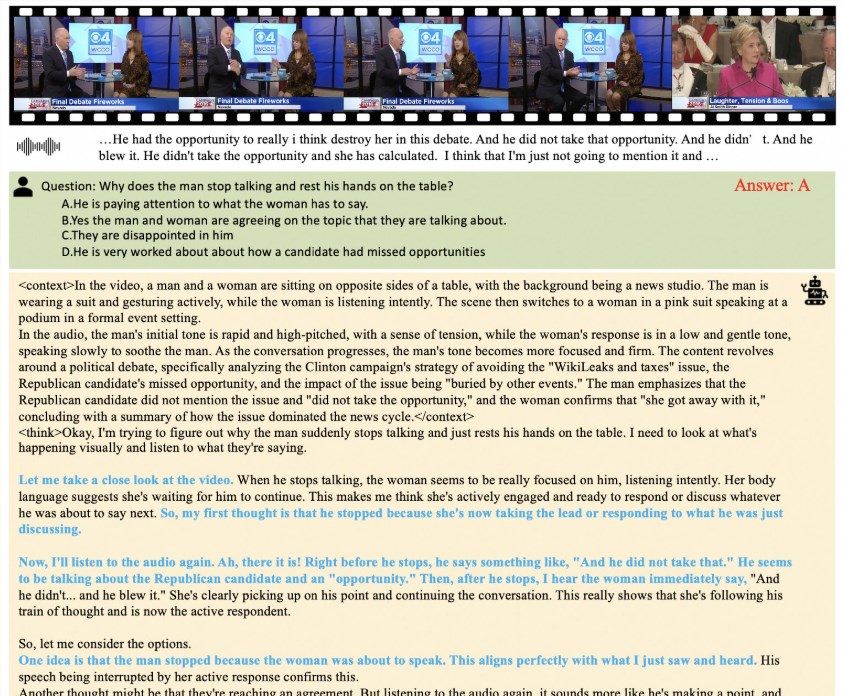

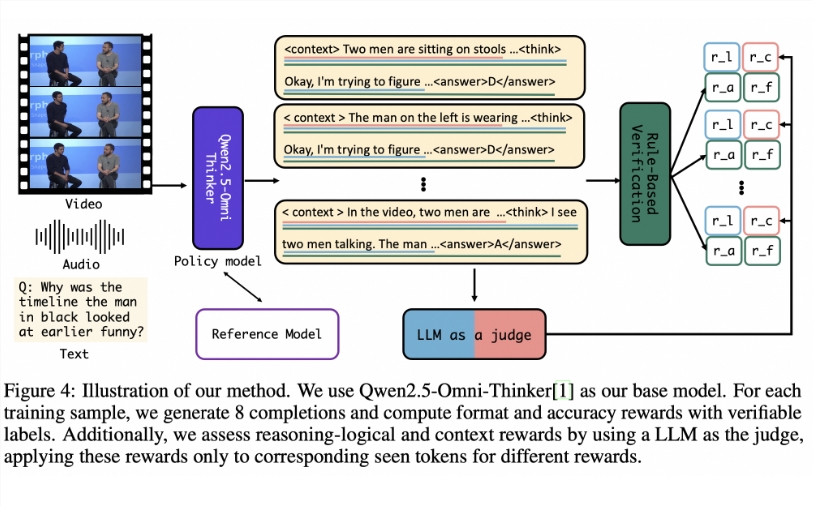

The biggest highlight of HumanOmniV2 is its mandatory context summarization mechanism, which enables multimodal reasoning based on global context, significantly enhancing the model's ability to understand complex scenarios. Compared to traditional large language models, HumanOmniV2 integrates text, images, and other modal data deeply, solving the "shortcut problems" commonly seen in models for complex tasks, thus achieving more accurate intent understanding and reasoning output.

In multiple authoritative benchmark tests, HumanOmniV2 has demonstrated outstanding performance. According to public information, the model achieved an accuracy rate of 58.47% on the Daily-Omni dataset, 47.1% on the WorldSense dataset, and an excellent score of 69.33% on Alibaba's self-developed IntentBench test. These data indicate that HumanOmniV2 has significant advantages in handling daily conversations, complex scenario perception, and user intent understanding.

Technological Innovation: Breaking Through Traditional Model Limitations

The development of HumanOmniV2 was led by Alibaba's Tongyi Lab, focusing on improving the model's performance in multimodal tasks. Traditional models often produce output deviations when processing cross-modal information due to a lack of global context. HumanOmniV2 introduces a new context summarization mechanism, ensuring the model can comprehensively analyze all information in the input data, thus generating results that better match user intent. This technological breakthrough gives it great potential for application in consumer-level scenarios (such as smart customer service and content creation) as well as enterprise-level applications (such as intelligent decision-making systems).

Additionally, HumanOmniV2 also shows strong support for multiple languages, including Chinese and English, greatly enhancing the model's international applicability. This feature makes it more competitive in the global AI market.

Industry Impact: Redefining the Boundaries of AI Applications

With the rise of Chinese AI companies like DeepSeek, Alibaba is further solidifying its position in the global AI field through HumanOmniV2. Social media discussions show that the release of HumanOmniV2 has received a warm response, with industry experts believing that its multimodal reasoning capabilities will drive deeper AI applications in fields such as education, healthcare, and finance. For example, HumanOmniV2 can be used to generate high-quality AI video content or assist doctors in analyzing complex cases in smart medical scenarios.

At the same time, Alibaba's frequent activities in the AI field have also attracted attention. From the Qwen series to Wan2.1VACE, and now HumanOmniV2, Alibaba is accelerating its layout in the AI ecosystem, striving to seize market opportunities through a strategy of open-source and commercialization. However, the competition is intense, with AI models from companies like Huawei and Baidu also developing rapidly. The subsequent performance of HumanOmniV2 is worth watching closely.

The release of HumanOmniV2 not only reflects Alibaba's technical strength but also highlights the rising trend of China's AI industry in global competition. AIbase analysis suggests that as multimodal AI technology continues to mature, HumanOmniV2 is expected to become an important force in driving industry standard innovation. In the future, Alibaba may further open source related technologies, attracting more developers to join its AI ecosystem and jointly explore the endless possibilities of multimodal AI.

github:https://github.com/HumanMLLM/HumanOmniV2

huggingface:https://huggingface.co/PhilipC/HumanOmniV2