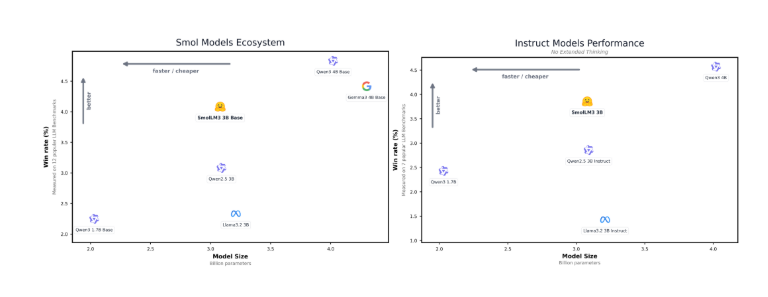

Recently, the renowned global large model open platform Hugging Face officially released its latest open-source model - SmolLM3. This model has 3 billion parameters. Although the number of parameters is relatively small, its performance significantly exceeds that of similar open-source models, such as Llama-3.2-3B and Qwen2.5-3B.

SmolLM3 not only supports a context window of 128k, but also can smoothly process texts in multiple languages such as English, French, Spanish, and German, marking another important advancement in the field of small parameter models.

Multiple Reasoning Modes, Flexibly Meet Needs

SmolLM3 has two reasoning modes: deep thinking and non-thinking. Users can switch between them flexibly according to actual needs. This innovative design allows the model to better leverage its reasoning capabilities when dealing with complex problems, especially in scenarios requiring in-depth analysis.

Open Source Architecture, Supporting Research and Optimization

Notably, Hugging Face has made public the architectural details, data mixing methods, and training process of SmolLM3. This open strategy will greatly promote developers' research and optimization of the model, driving further development of open-source AI models.

Model Architecture and Training Configuration

SmolLM3 adopts an advanced transformer decoder architecture, drawing on the design of SmolLM2, while making key improvements to Llama to enhance efficiency and long-context performance. Specifically, the model uses group query attention mechanisms and document-level masking techniques to ensure the effectiveness of long-context training.

In terms of training configuration, SmolLM3 has 3.08B parameters, a depth structure of 36 layers, and uses the AdamW optimizer. After 24 days of distributed training, a powerful model was finally formed.

Three-Stage Hybrid Training, Enhancing Capabilities

The training process of this model is divided into three stages, using various types of data for hybrid training. In the first stage, the model builds general capabilities through comprehensive training with web, math, and code data; in the second stage, higher-quality math and code data are introduced; and in the third stage, the model further enhances sampling of math and code data, improving its reasoning and instruction-following capabilities.

With the release of SmolLM3, Hugging Face once again solidifies its leading position in the AI field. This model not only has strong reasoning capabilities, but also provides rich application prospects for developers with its efficient performance. In the future, with more open-source research and community collaboration, SmolLM3 is expected to play a greater role in various application scenarios.

Base Model: https://huggingface.co/HuggingFaceTB/SmolLM3-3B-Base

Reasoning and Instruction Following Model: https://huggingface.co/HuggingFaceTB/SmolLM3-3B