Recently, the Alibaba Speech AI team announced the open-source release of ThinkSound, the world's first audio generation model supporting chain-of-thought reasoning. By introducing the chain-of-thought technology, this model breaks through the limitations of traditional video-to-audio technology in capturing dynamic visuals, achieving high-fidelity and strongly synchronized spatial audio generation. This breakthrough marks a leap in AI audio technology from "image-based voice dubbing" to "structured understanding of visuals."

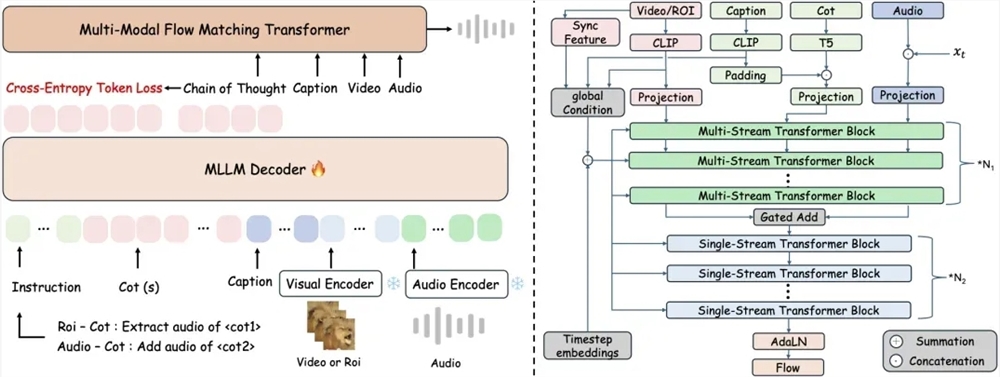

Traditional end-to-end video-to-audio technology often neglects the spatiotemporal correlation between visual details and sound, leading to misalignment between generated audio and visual events. ThinkSound is the first to combine multimodal large language models with a unified audio generation architecture, achieving accurate audio synthesis through a three-stage reasoning mechanism: the system first analyzes overall motion and scene semantics in the visual, generating a structured reasoning chain; then it focuses on specific object sound source areas, refining sound features based on semantic descriptions; finally, it supports real-time interactive editing via natural language instructions, such as "add rustling leaves after bird calls" or "remove background noise."

To support the model's structured reasoning capability, the research team built the AudioCoT multimodal dataset, containing 2,531.8 hours of high-quality samples, integrating real-world audio from sources such as VGGSound and AudioSet, including animal calls and mechanical operations. The dataset ensures quality through multi-stage automated filtering and manual sampling verification, and particularly designed object-level and instruction-level samples, enabling the model to handle complex instructions like "extract owl calls while avoiding wind interference."

Experimental data shows that ThinkSound improves core metrics by over 15% compared to mainstream methods on the VGGSound test set, and performs significantly better than Meta's similar models on the MovieGen Audio Bench test set. Currently, the model's code and pre-trained weights are open-sourced on GitHub, HuggingFace, and the ModelScope community, allowing developers to access them for free.

The Alibaba Speech AI team revealed that future efforts will focus on enhancing the model's understanding of complex acoustic environments and expanding its application to immersive scenarios such as game development and virtual reality. This technology not only provides new tools for film and television sound effects production and audio post-processing but may also redefine the boundaries of sound experiences in human-computer interaction. Industry experts point out that ThinkSound's open-source release will accelerate the technological inclusiveness in the audio generation field, driving the creator economy toward a more intelligent direction.

Open-source address:

https://github.com/FunAudioLLM/ThinkSound

https://huggingface.co/spaces/FunAudioLLM/ThinkSound

https://www.modelscope.cn/studios/iic/ThinkSound