Recently, a research team from NVIDIA, the University of Hong Kong, and MIT jointly released an innovative technology called Fast-dLLM, aimed at improving the reasoning efficiency of diffusion language models. Unlike traditional autoregressive models, diffusion language models generate text by gradually removing text noise, allowing multiple words to be generated in one iteration, resulting in higher overall efficiency. However, in practical applications, the inference speed of many open-source diffusion language models still lags behind autoregressive models, mainly due to the lack of key-value (KV) cache support and a decline in generation quality during parallel decoding.

KV caching is an acceleration technique commonly used in autoregressive models, which reduces redundant computations by storing and reusing previously calculated attention states, thereby improving generation speed. However, because diffusion language models use a bidirectional attention mechanism, directly applying KV caching is not easy. The innovation of the Fast-dLLM architecture lies in dividing the text generation process into multiple blocks, each containing a certain number of tokens. Through this block-based generation approach, the model can precompute and store the KV cache of other blocks before generating a block, thus avoiding redundant calculations.

Although the KV cache mechanism effectively improves the inference speed, the generation quality often declines during parallel decoding. This is because diffusion models assume conditional independence during decoding, while there may be complex dependencies between tokens. To address this issue, Fast-dLLM proposes a confidence-based parallel decoding strategy. At each decoding step, the model calculates the confidence of each token and selects tokens with confidence above a threshold for decoding. This strategy ensures safe parallel decoding under high confidence, maintaining the coherence and accuracy of the generated text.

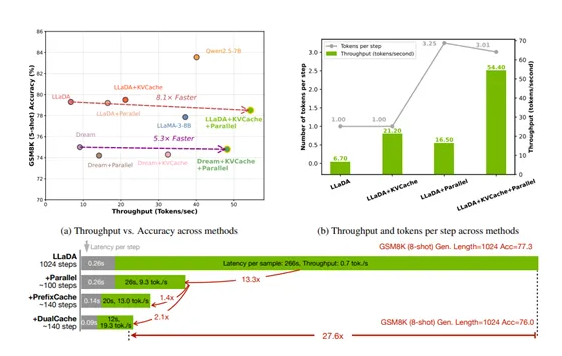

To verify the performance of Fast-dLLM, researchers conducted a comprehensive evaluation on an NVIDIA A100 80GB GPU for two diffusion language models, LLaDA and Dream, covering tasks such as mathematical reasoning and code generation. In the KV cache mechanism test, when the block size was 32, the model's throughput reached 54.4 tokens/s with an accuracy of 78.5%. In the parallel decoding test, the dynamic threshold strategy outperformed the fixed token number baseline. Overall, the LLaDA model achieved a 3.2x speedup with KV Cache and a 2.5x speedup with parallel decoding, resulting in an 8.1x speedup when combined. When the generation length reached 1024, the end-to-end speedup was as high as 27.6 times. All test results show that Fast-dLLM maintains stable generation quality while accelerating.

Key Points:

🌟 Fast-dLLM technology was developed by NVIDIA and the University of Hong Kong, among other institutions, enhancing the reasoning speed of diffusion language models.

⚡ The KV cache mechanism accelerates generation efficiency by storing and reusing attention states, significantly reducing redundant computations.

📈 The confidence-based parallel decoding strategy ensures the coherence and accuracy of text generation, improving the overall performance of the model.