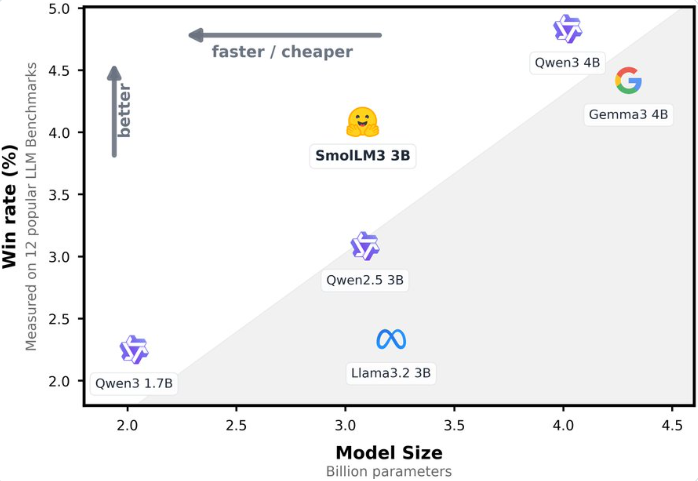

Recently, Hugging Face officially launched the brand-new open-source language model SmolLM3, a lightweight large language model (LLM) with 3B parameters, which has attracted widespread attention in the industry due to its outstanding performance and efficient design. SmolLM3 not only surpasses Llama-3.2-3B and Qwen2.5-3B of the same level in multiple benchmark tests, but also performs comparably to larger 4B parameter models like Gemma3.

Address: https://huggingface.co/blog/smollm3

3B Parameters, Performance Close to 4B Models

SmolLM3 is a decoder-only Transformer model with 3B parameters, optimized using Grouped Query Attention (GQA) and NoPE techniques to ensure efficient inference and long context handling. The model was pre-trained on a diverse dataset of 11.2 trillion tokens, including web pages, code, math, and reasoning data, ensuring strong performance in knowledge, reasoning, math, and coding fields. According to official reports, SmolLM3 ranks among the top in knowledge and reasoning benchmarks such as HellaSwag, ARC, and BoolQ, performing no worse than 4B parameter models like Qwen3-4B and Gemma3-4B, demonstrating the impressive potential of small models.

Dual-mode Inference, Flexible for Diverse Tasks

SmolLM3 introduces a unique dual-mode inference feature, supporting both "thinking" (think) and "non-thinking" (no-think) modes. When the thinking mode is enabled, the model shows significant improvements in complex tasks such as AIME2025 (36.7% vs 9.3%), LiveCodeBench (30.0% vs 15.2%), and GPQA Diamond (41.7% vs 35.7%). This flexible inference mode allows the model to dynamically adjust according to task requirements, balancing speed and deep reasoning, meeting various scenarios from quick question answering to complex problem solving.

Supports 128K Context, Seamless Switching Between Six Languages

SmolLM3 excels in long context processing, supporting 64K context during training and expanding up to 128K tokens through YaRN technology, demonstrating strong long sequence processing capabilities in Ruler64k tests. Additionally, the model natively supports six languages (English, French, Spanish, German, Italian, Portuguese), and has been trained on a small amount of data in Arabic, Chinese, and Russian, showing excellent multilingual performance. In tests such as Global MMLU and Flores-200, SmolLM3's multilingual capabilities rank among the top of models at the same level, providing reliable support for global application scenarios.

Completely Open Source, Empowering the Developer Ecosystem

Hugging Face has always upheld the spirit of open source. SmolLM3 not only publicly released model weights but also fully open-sourced the training data mix, training configuration, and code. Developers can access detailed information through the smollm repository on Hugging Face. This transparent "training blueprint" greatly reduces the barriers for academic research and commercial applications, allowing developers to reproduce or optimize the model based on public datasets and frameworks. AIbase believes this move will further promote the prosperity of the open-source AI ecosystem and provide more possibilities for edge device deployment and customized applications.

Efficient Design, New Choice for Edge Devices

SmolLM3 is designed for efficient inference, significantly reducing KV cache usage during inference with the Grouped Query Attention mechanism, combined with WebGPU support, making it suitable for running in browsers or edge devices. Compared to larger models, SmolLM3 finds a "Pareto optimal" balance between performance and computational cost, offering a cost-effective solution for scenarios such as education, coding, and customer support.

Industry Impact and Future Outlook

The release of SmolLM3 marks a significant breakthrough in performance and efficiency for small-scale language models. Its open-source nature, long context support, and multilingual capabilities make it an ideal choice for academic research, startups, and small and medium-sized enterprises. AIbase expects that SmolLM3 will spark a wave of applications in areas such as education, customer service, and localized deployment, while its fully open-source training process will also inspire more developers to participate in AI model optimization and innovation.

SmolLM3 achieves performance comparable to 4B models with just 3B parameters, showcasing the infinite potential of small models in the field of efficient AI. Hugging Face has set an example of transparency and collaboration in the industry by opening up training details and data. We look forward to seeing SmolLM3's performance in more practical scenarios and will continue to monitor its future updates.

Conclusion