On July 11, PixVerse, the AI video creation platform with over 60 million global users, introduced a major feature upgrade — the addition of the "Multiple Key Frame Generation" function in the start-end frame module. This marks the official transition of AI video creation from single "clip" generation to a new stage of "narrative expression".

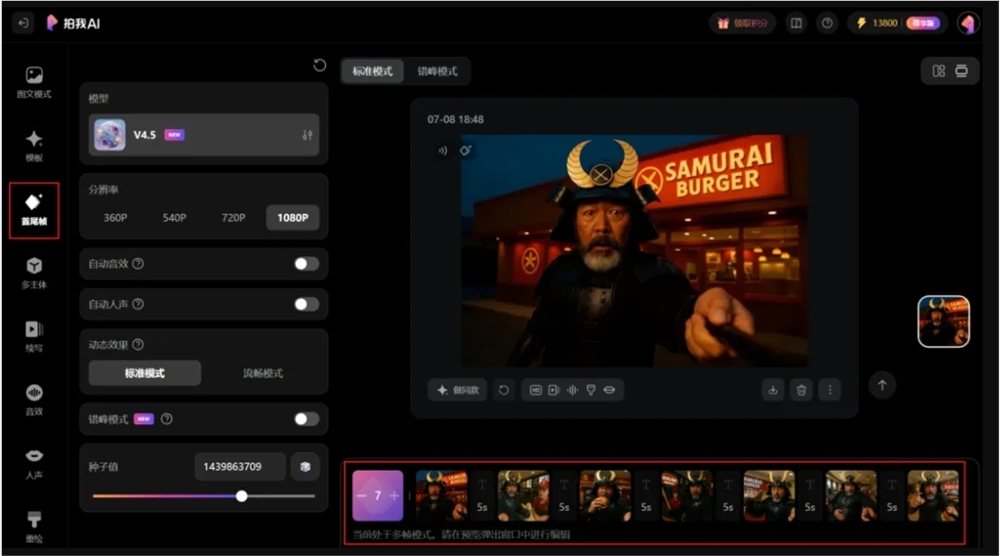

Users can now upload up to seven images as key frames through the web version's start-end frame function. The AI will automatically analyze the semantic relationships between frames and intelligently construct smooth action and scene transition paths. This technological breakthrough enables static images to be presented dynamically, especially suitable for short film storyboards, product demonstrations, and other scenarios requiring strong narrative continuity. For example, users can turn product display images into 360-degree rotation animations or quickly generate complete short films from storyboard sketches.

According to Dr. Wang Changhu, founder and CEO of Aishitech, this feature achieves natural transitions in character actions and scene changes by precisely defining starting, turning, and ending frames. It can also simulate a director's lens language, intelligently switching between close-ups, wide shots, and other shot types and perspectives. In fields with high narrative demands such as movie trailers, novel and anime adaptations, and advertising short films, the creation efficiency has been significantly improved.

Currently, the domestic version of PixVerse was launched on June 6, 2025, and supports nine languages including Chinese, English, Japanese, Korean, and French. Users have already created diverse content such as life memoirs and celebrity growth stories by integrating personal growth photos or family portraits. This feature upgrade will further promote the popularization of AI video generation technology, helping more ordinary users become "life directors".