Liquid AI announced the official open-source release of its next-generation Liquid Foundation Models (LFM2), a groundbreaking move that has sparked heated discussions in the artificial intelligence field. As an efficient hybrid model optimized for edge devices, LFM2 sets a new industry standard in terms of speed, energy efficiency, and performance. AIbase has compiled recent online information to deeply analyze the technical highlights of LFM2 and its profound impact on the AI ecosystem.

LFM2: Redefining the Performance Boundaries of Edge AI

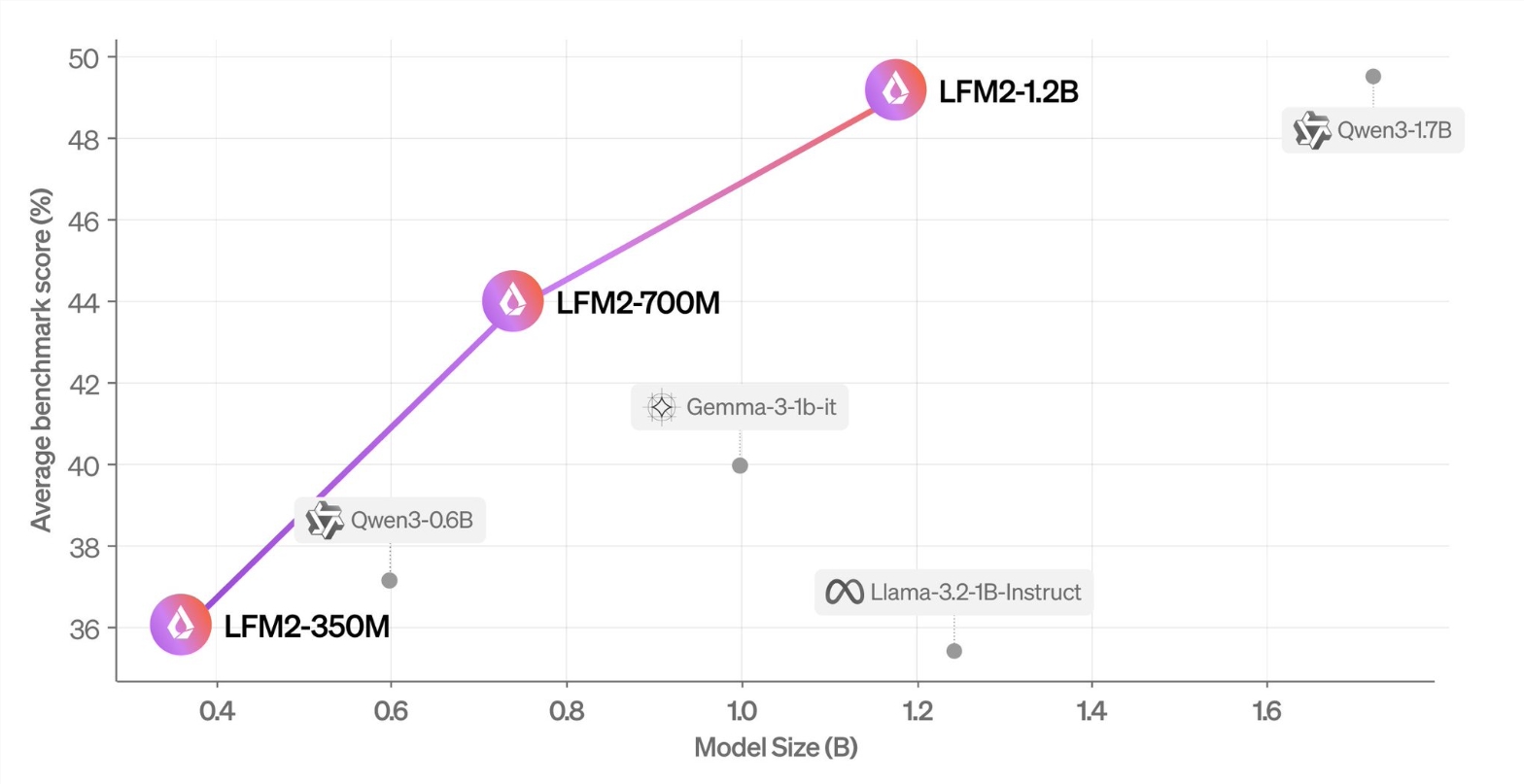

The LFM2 series includes models with 350M, 700M, and 1.2B parameters, specifically designed for edge devices such as smartphones, laptops, cars, and embedded systems. Unlike traditional Transformer-based models, LFM2 adopts an innovative structured adaptive operator architecture. This architecture significantly improves training efficiency and inference speed, especially excelling in long context and resource-constrained scenarios. According to reports, LFM2's inference speed is twice as fast as Qwen3, and its training speed is three times faster than Liquid AI's previous models, demonstrating its great potential in the field of edge computing.

Additionally, LFM2 performs exceptionally well in key tasks such as instruction following and function calling, with average performance exceeding models of similar scale, making it an ideal choice for localized and edge AI applications. This efficiency not only reduces deployment costs but also provides a safer data processing solution for privacy-sensitive scenarios.

Open-Source Strategy: Driving Global AI Innovation

By open-sourcing LFM2, Liquid AI has fully released the model weights, allowing developers to download them through Hugging Face and test them in Liquid Playground. This move not only reflects Liquid AI's commitment to technological transparency but also provides global developers with an opportunity to explore new AI architectures. The open-source nature of LFM2 marks the first time that an American company has publicly surpassed leading Chinese open-source models, such as those developed by Alibaba and ByteDance, in the field of efficient small language models, showcasing its technological confidence in the global AI competition.

Notably, Liquid AI plans to integrate LFM2 into its edge AI platform and its upcoming iOS-native application, further expanding its application scenarios in the consumer market. This strategic move indicates that Liquid AI not only focuses on technological innovation but also strives to promote the popularization of AI through open source and ecosystem development.

Technical Highlights: Efficiency, Privacy, and Long Context

The core advantage of LFM2 lies in its unique technical design. The model supports a 32K context length, bfloat16 precision, and a 65K vocabulary, optimized with a ChatML style to enhance the interaction experience. These features ensure efficiency and accuracy when handling complex tasks. In addition, LFM2's low latency and high energy efficiency make it particularly suitable for resource-constrained edge devices, providing reliable support for privacy-sensitive localized AI applications.

At the same time, the open-source nature of LFM2 offers developers an opportunity to deeply study new AI architectures. Compared to traditional Transformer models, LFM2's hybrid architecture achieves a clever balance between performance and efficiency, indicating a new direction for edge AI technology.

Industry Impact: A Trendsetter for the Future of Edge AI

The release of LFM2 is not only a milestone for Liquid AI but also sets a new benchmark for the edge AI field. In the increasingly competitive global AI landscape, LFM2's efficiency and open-source strategy provide small and medium-sized enterprises and independent developers with cost-effective, high-performance AI solutions. At the same time, its emphasis on privacy and localized computing aligns with the global trend of data sovereignty and privacy protection.

AIbase believes that the open-sourcing of LFM2 not only promotes technological democratization but may also inspire more innovative applications, from smart homes to autonomous driving, and even medical devices. The potential of LFM2 is being gradually explored. In the future, as more developers participate in the LFM2 ecosystem, the development of edge AI will enter a new phase of acceleration.

huggingface:https://huggingface.co/collections/LiquidAI/lfm2-686d721927015b2ad73eaa38