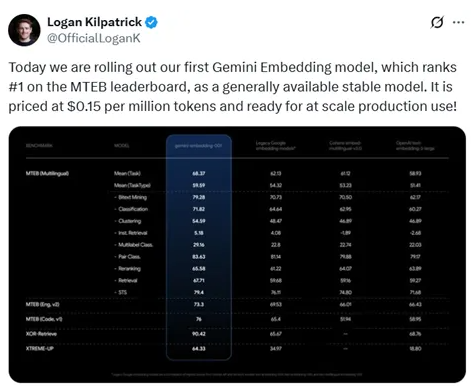

On July 15, 2023, Google officially released its first Gemini embedding model at 1:00 AM. The model ranked first on the Multi-Text Embedding Benchmark (MTEB) platform with a high score of 68.37, surpassing OpenAI's score of 58.93. This achievement not only demonstrates Google's leading position in embedding technology but also provides a more cost-effective option for independent creators and freelancers: the cost of using the Gemini embedding model is only $0.15 per 1 million tokens.

The powerful features of the Gemini embedding model

According to the test results, the Gemini embedding model performed exceptionally well in multiple tasks such as bilingual mining, classification, clustering, instruction retrieval, multi-label classification, pair classification, re-ranking, retrieval, and semantic text similarity, making it the strongest embedding model available. Its multilingual capabilities make it more promising for global applications, especially among a large number of non-English native users.

Model architecture and technological innovation

The Gemini embedding model is designed based on a bidirectional Transformer encoder architecture, retaining the bidirectional attention mechanism of the Gemini model, fully leveraging its pre-trained language understanding capabilities. On top of the underlying 32-layer Transformer, a pooling layer was added to aggregate the token embeddings of the input sequence, generating a single embedding vector. The mean pooling strategy used is simple and effective, enhancing the model's adaptability.

Training methods and data quality control

During training, the Gemini embedding model adopted a multi-stage training strategy, divided into a pre-tuning stage and a fine-tuning stage. In the pre-tuning stage, the model was trained using data from a large-scale Web corpus, primarily aiming to adapt parameters from autoregressive generation tasks to encoding tasks. The fine-tuning stage involved more precise training on specific task data to ensure the model's efficient performance in tasks such as retrieval, classification, and clustering.

To improve data quality, the research team designed a synthetic data generation strategy and used Gemini to filter training data, removing low-quality samples to ensure the effectiveness of the model during training.

The release of the Gemini embedding model marks an important advancement in Google's embedding technology, enhancing its competitiveness in the field of artificial intelligence. With the promotion of this model, it is expected to drive the development of various applications such as search and personalized recommendations.

Experience address: https://aistudio.google.com/prompts/new_chat