In the field of artificial intelligence, large language models (LLMs) have received widespread attention due to their outstanding performance, but they face significant computational and memory overhead when deployed. To overcome this challenge, Google DeepMind recently introduced a new architecture —— Mixture-of-Recursions (MoR), which is considered to have the potential to be the "killer" of traditional Transformer models.

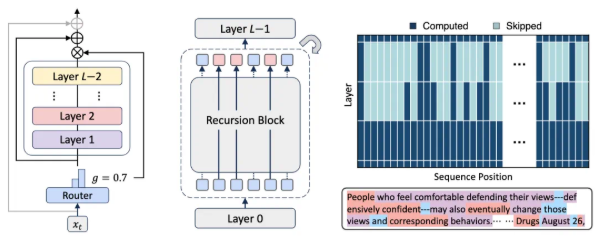

The MoR architecture innovates on recursive Transformers, aiming to achieve parameter sharing and adaptive computation simultaneously. By integrating dynamic token-level routing into an efficient recursive Transformer, MoR can provide performance comparable to large models without increasing model costs. The model assigns a dedicated recursive depth for each token through a lightweight routing system, dynamically determining how many "thoughts" each token needs. This approach effectively allocates computing resources and improves processing efficiency.

In terms of specific implementation, MoR adopts an advanced caching mechanism that selectively caches and retrieves relevant key-value pairs based on the recursive depth of tokens. This innovation significantly reduces memory bandwidth pressure and improves inference throughput. At the same time, MoR also reduces the number of parameters and lowers computational costs through multiple optimization measures such as parameter sharing, computational routing, and recursive-level caching.

In experiments, MoR surpassed the original Transformer and recursive Transformer with fewer parameters under the same computational budget, verifying its superior performance. By comparing with baseline models, MoR also achieved better results in average accuracy for few-shot learning, despite reducing the number of parameters by nearly 50%. This success is attributed to its efficient computing strategy, allowing MoR to process more training tokens.

Additionally, researchers found that MoR consistently outperformed the recursive baseline model under different computational budgets. Especially when the model size exceeds 360M, MoR not only matches the original Transformer but often surpasses the opponent at low to medium budgets. Therefore, MoR is considered a scalable and efficient alternative suitable for large-scale pre-training and deployment.

With the continuous development of AI technology, the introduction of the MoR architecture provides a new solution for the efficiency of large language models, indicating a new breakthrough in the field of AI research.

Paper link: alphaxiv.org/abs/2507.10524

Key points:

🌟 MoR architecture effectively improves the efficiency of large language models by dynamically allocating computing resources and a caching mechanism.

📉 Under the same computational budget, MoR surpasses the traditional Transformer with fewer parameters and better performance.

🚀 MoR is considered a new breakthrough in AI research, suitable for large-scale pre-training and deployment.