Welcome to the "AI Daily" section! This is your guide to exploring the world of artificial intelligence every day. Every day, we present you with the latest content in the AI field, focusing on developers, helping you understand technology trends and innovative AI product applications.

Hot AI products Click for more:https://top.aibase.com/

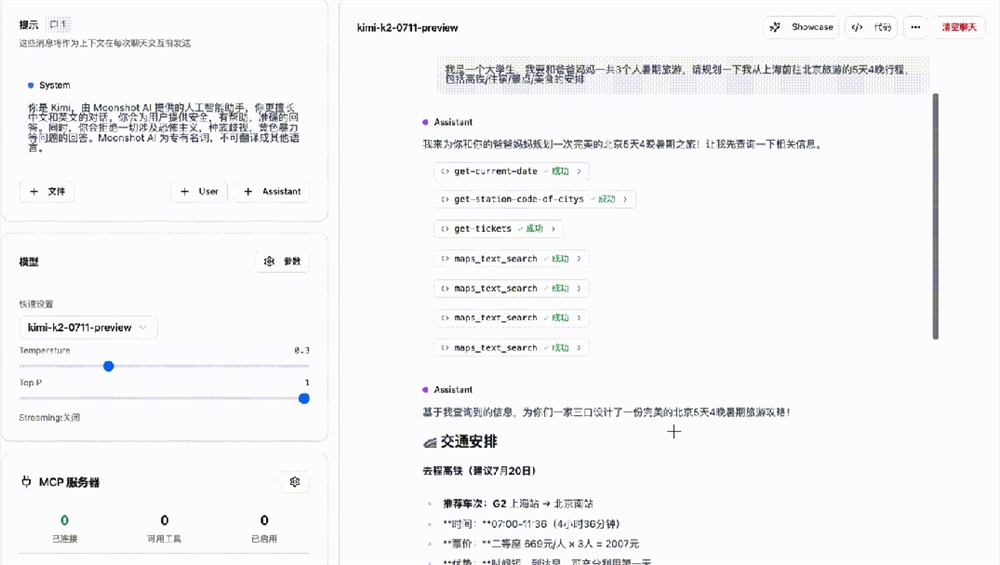

1. Moonshot AI's Kimi Open Platform Launches Kimi Playground

The launch of Kimi Playground marks a shift in AI technology from a conversational assistant to an intelligent assistant. Its tool calling feature enables AI to actively solve problems. The platform provides developers with a one-stop tool calling experience, supporting multiple tools for integration and debugging, thus improving development efficiency.

AiBase Summary:

✨ Kimi Playground allows AI to actively solve problems through its tool calling function, transforming it from a passive information provider to an intelligent assistant.

🛠️ Provides an intuitive tool calling interface, supports built-in and third-party tools, and improves development efficiency.

📊 Demonstrates strong automation capabilities in scenarios such as data analysis and travel itinerary planning, simplifying complex tasks.

Details Link: https://platform.moonshot.cn/playground

2. OpenAI Launches ChatGPT Agent: It Can Think Proactively, Browse, Shop, and Make Presentations!

OpenAI officially launched ChatGPT Agent, marking a major leap in artificial intelligence from a conversational assistant to an autonomous task executor. This tool integrates Operator and Deep Research features, enabling complex tasks to be completed through virtual browsers, terminals, and APIs, enhancing user efficiency.

AiBase Summary:

🚀 ChatGPT Agent has the ability to browse, click, fill forms, and execute code autonomously, capable of handling diverse tasks such as selecting wedding attire or planning travel itineraries.

📈 Performs exceptionally well in multiple benchmark tests, with accuracy far exceeding competitors, demonstrating strong practicality.

🔒 Emphasizes security, requiring user authorization for high-consequence operations, and implements strict protective measures to prevent malicious attacks.

Details Link: https://openai.com/zh-Hans-CN/index/introducing-chatgpt-agent/

3. Suno Releases v4.5+ with Voice Replacement Feature, Allows Replacing Original Vocals with Other Voices

Suno v4.5+ introduces several innovative features, including voice replacement, accompaniment generation, and inspiration generation, significantly enhancing the flexibility and personalized experience of music creation. At the same time, audio quality and creative experience have been comprehensively optimized, providing musicians with more powerful tools.

AiBase Summary:

🎧 The voice replacement feature allows users to upload accompaniments or use built-in instruments and input lyrics to generate complete songs.

🎵 Add Instrumentals allows users' singing or humming to be converted into complete musical works.

🎼 Inspire draws inspiration from playlists to quickly generate new songs that match users' preferences.

4. AI Video Costs Rise? Google Veo3 Now Available via Gemini API

Google's flagship video generation model Veo3 is now available to developers via the Gemini API, offering text-to-video functionality and supporting synchronized audio generation. This marks a new phase in AI video production, but also comes with higher costs. Veo3 is the first model capable of generating high-resolution videos from a single text prompt and synchronizing dialogue, music, and sound effects.

AiBase Summary:

🔥 Google launches the flagship video generation model Veo3, supporting text-to-video and synchronized audio generation.

💰 Veo3 is expensive, costing $0.75 per second for 720p videos, which may lead to high costs.

🚀 Veo3 is mainly applied in professional fields, such as projects by Cartwheel and game studio Volley.

5. First Live Stream Diffusion AI Model MirageLSD Makes a Stunning Debut, Real-Time Video Conversion Opens Infinite Possibilities!

MirageLSD, the world's first artificial intelligence live stream diffusion model, brings revolutionary changes to live streaming, game development, and animation production with its ultra-low latency and real-time video conversion capabilities. This technology breaks through the traditional video generation model's delay and length limitations, while also featuring simple interaction and high flexibility, showing great application potential.

AiBase Summary:

✨ MirageLSD achieves a running speed of 24 frames per second and a response delay of less than 40 milliseconds, breaking through the bottlenecks of traditional video generation models.

🕹️ Supports gesture control and continuous prompt editing, allowing users to change the appearance, scene, or clothing in the video in real time, lowering the technical barrier.

🚀 Shows incredible potential in game development, where developers can quickly build a game within 30 minutes, with the model automatically handling all graphics effects.

Details Link: https://mirage.decart.ai/

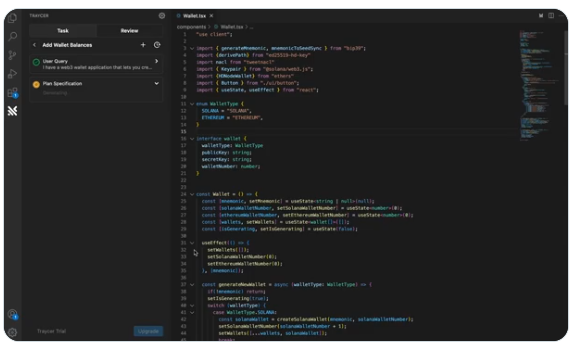

6. VSCode's AI Programming Tool Traycer Excels at Handling Large Codebases

Traycer is an AI programming assistant tool designed specifically for Visual Studio Code. It significantly improves developers' coding efficiency through smart task decomposition, code planning, and real-time analysis. Its multi-agent collaboration and high compatibility with the VSCode Agent mode make it particularly effective when dealing with complex projects.

AiBase Summary:

🧠 Task Decomposition and Planning: Generate detailed coding plans based on high-level task descriptions.

🔄 Multi-Agent Collaboration: Supports multiple AI agents executing tasks asynchronously, improving the efficiency of complex project handling.

🔍 Real-Time Code Analysis: Continuously tracks the codebase, identifies potential errors, and offers optimization suggestions.

Details Link: https://traycer.ai

7. ART Framework Released! Train AI Agents with Python in One Click, from Email Search to Game Control!

The article introduces the release of the ART framework and its value in the field of reinforcement learning. This framework provides developers with convenient tools, supports various language models, and is suitable for multiple scenarios such as email retrieval and game development. Its modular design and ease of use allow small and medium-sized teams and individual developers to quickly build high-performance Agents.

AiBase Summary:

🧠 The ART framework enhances AI Agent performance by integrating GRPO technology, allowing them to learn from experience and optimize task execution.

📦 The framework supports various language models, such as Qwen2.5, Qwen3, Llama, and Kimi, offering a wide range of choices.

🚀 Developers can easily integrate ART and achieve reinforcement learning functions with simple commands, lowering the usage threshold.

Details Link: https://github.com/openpipe/art

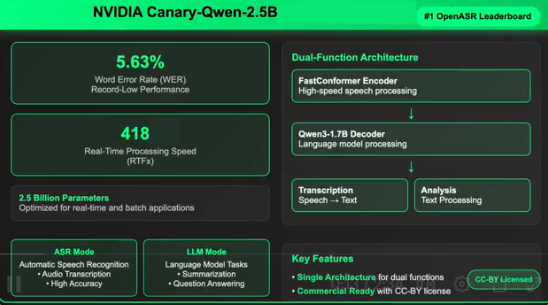

8. 5.63% Error Rate Sets New Record: NVIDIA AI Launches Commercial-Grade Ultra-High-Speed Speech Recognition Model Canary-Qwen-2.5B

NVIDIA's Canary-Qwen-2.5B model achieved a significant breakthrough in automatic speech recognition and language processing, ranking first on the Hugging Face OpenASR leaderboard with a word error rate of 5.63%. The model combines efficient transcription and language understanding capabilities, supporting direct tasks such as summarization and question answering from audio, showing broad commercial application potential.

AiBase Summary:

🧠 Technical Breakthrough: Unifies speech understanding and language processing, achieving a single model architecture.

⚡ Outstanding Performance: 5.63% WER, real-time processing speed reaches 418 times, with only 2.5 billion parameters.

💼 Wide Application: Suitable for enterprise transcription, knowledge extraction, meeting summaries, and compliance document processing scenarios.

Details Link: https://huggingface.co/nvidia/canary-qwen-2.5b

9. Mistral AI Launches New Feature Le Chat, Fully Catching Up with ChatGPT

Mistral AI's new feature Le Chat includes deep research mode, voice interaction, and advanced image editing, aiming to enhance user experience and challenge OpenAI's ChatGPT. Its voice recognition is based on the Voxtral model, offering natural and low-latency characteristics, while the image editing function performs excellently in practical use.

AiBase Summary:

🧠 **Deep Research Mode**: Quickly generates structured research reports to help users track market trends and write business strategy documents.

🗣️ **Voice Interaction Function**: Achieves natural and low-latency voice recognition based on the Voxtral model, making it easy for users to access information anytime, anywhere.

🎨 **Advanced Image Editing**: Create and edit images with simple prompts, outperforming OpenAI's products.

10. Baidu Xiaodu Launches First MCP Server Supporting Interaction with the Physical World

Baidu Xiaodu launched the first MCP Server supporting interaction with the physical world, bringing a new transformation to AI application development and leading the industry towards a new era of "Intelligent Interconnection of All Things."

AiBase Summary:

💡 Xiaodu launched the first MCP Server supporting interaction with the physical world, achieving the MCP upgrade of terminal devices and core IoT capabilities.

🌐 Xiaodu's open platform launched two core services, reducing the development threshold and improving the efficiency of smart device control.

🚀 Xiaodu's MCP Server promotes smart homes from "point control" to "proactive service," ushering in a new era of "Mass Intelligent Development."

Details Link: https://dueros.baidu.com/dbp/mcp/console

11. Lightricks Releases LTXV Model Update: Image-to-Video Generation Breaks 60 Seconds

Lightricks' LTXV model achieved a breakthrough in generating high-quality videos up to 60 seconds from images, using an autoregressive streaming architecture and multi-scale rendering technology, supporting real-time control and creative flexibility, and efficiently running on consumer-grade GPUs.

AiBase Summary:

🎥 LTXV supports generating high-quality AI videos up to 60 seconds, breaking industry conventional limits.

⚙️ Introduces dynamic scene control functionality, allowing users to adjust video content details in real time.

⚡ Efficiently runs on consumer-grade GPUs, significantly reducing computational costs, suitable for a wide range of creators.

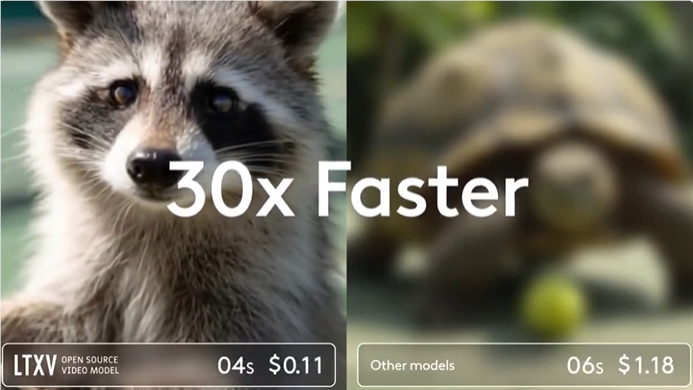

12. LTX-Video 13B Released! Generates HD Videos 30 Times Faster, Open Source AI Enables Unlimited Creativity!

LTX-Video 13B, with its multi-scale rendering technology, efficient generation speed, and open-source nature, provides creators with powerful video generation tools, significantly enhancing the continuity and detail expression of videos.

AiBase Summary:

🚀 Multi-scale rendering technology improves generation speed and image quality, supporting operation on consumer-grade GPUs.

🎨 Supports multiple video generation modes, providing precise control and creative flexibility.

🌐 Open-source model empowers developers, lowers the usage threshold, and promotes AI democratization.

Details Link: https://ltx.studio