According to foreign media reports, a new study has raised doubts about the high math scores of Alibaba's Qwen2.5 model, pointing out that its seemingly outstanding mathematical reasoning ability may mainly come from memorization of training data rather than true reasoning. Researchers found through a series of rigorous tests that data contamination might be the key factor leading to Qwen2.5's excellent performance on certain benchmark tests.

Data Contamination Comes to Light: Performance Drops Sharply on Clean Benchmarks

The core finding of the study is that when the Qwen2.5 model was tested on "clean" benchmarks it had never seen during training, its performance dropped sharply. This suggests that the improvements observed in "contaminated" benchmark tests were largely due to the model having encountered these data during training.

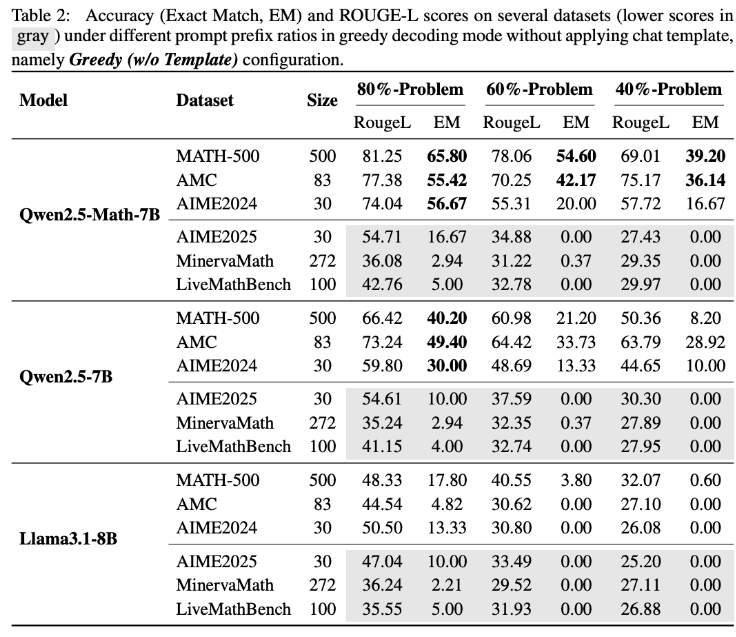

To verify this hypothesis, the research team conducted an innovative experiment: they provided only the first 60% of the MATH500 benchmark test questions to the Qwen2.5-Math-7B model and asked it to complete the remaining 40%. The results were surprising: Qwen2.5-Math-7B successfully reconstructed the missing parts with an accuracy rate of up to 54.6% (53.6% correct). In contrast, Llama3.1-8B achieved only 3.8% accuracy (2.4% correct). This significant difference strongly implies that Qwen2.5 had already "seen" these questions during training.

LiveMathBench Test: Qwen2.5 Completion Rate Drops to Zero

Researchers then tested Qwen2.5 using LiveMathBench (version 202505). LiveMathBench is a "clean" benchmark that emerged after the release of Qwen2.5, meaning that Qwen2.5 could not have encountered its data during training. On this new dataset, Qwen2.5's completion rate dropped to zero, matching the performance of the Llama model, and its answer accuracy also fell to just 2%.

The study points out that Qwen2.5 may have been pre-trained on large online datasets, including GitHub code repositories containing benchmark questions and their solutions. Therefore, even if the model received random or incorrect reward signals during training, it could still improve its performance on MATH-500 due to prior exposure to this data.

Response Template Changes and Synthetic Data Validation

Further experiments showed that when response templates changed, Qwen2.5's performance on MATH-500 dropped sharply, while Llama-3.1-8B was almost unaffected. This further supports the idea that Qwen2.5 relies heavily on specific data patterns.

To completely rule out the memory effect, the research team also created the RandomCalculation dataset, which contains completely synthetic arithmetic problems generated after the release of Qwen2.5. On these new problems, Qwen2.5's accuracy decreased as the complexity of the questions increased. Only correct reward signals could improve the model's performance, while random rewards caused instability in training, and reverse rewards even reduced its mathematical skills. Controlled RLVR (reinforcement learning with verifiable rewards) experiments also confirmed these results: only correct rewards led to stable performance improvements, while random or reverse rewards failed to enhance performance or actively lowered it.

Implications for Future AI Research

These findings raise serious doubts about whether Qwen2.5's mathematical abilities reflect real reasoning, suggesting instead that the model heavily relies on memorized data. Alibaba launched Qwen2.5 in September 2024, followed by the Qwen3 series. It remains to be seen whether these findings apply to the Qwen3 series.

The authors of the study warned that contaminated benchmark tests can lead to misleading conclusions about AI progress. They emphasized that future research should rely on clean, uncontaminated benchmarks and evaluate multiple model series to obtain more reliable results.

The "Rules" of Benchmark Testing

The findings once again highlight the difficulty of distinguishing between real reasoning and memory in large language models, and why rigorous, clear evaluation methods are essential for reliable AI research. Previous studies have shown that benchmark tests can be manipulated or "gamed." For example, Meta submitted a specially tuned Llama4 version that performed well on the LMArena benchmark by using a custom response format. Other studies have also shown that models like Gemini2.5Pro and Claude3.5Sonnet can identify testing scenarios and adjust their responses with up to 95% accuracy, raising broader concerns about the effectiveness of current evaluation methods.