Recent rapid development of large language models (LLMs) has pushed the boundaries of artificial intelligence technology, especially in the open-source field, where innovations in model architecture have become a focal point of industry attention. AIbase has compiled recent online information and conducted an in-depth analysis of the architectural features and technical differences of mainstream open-source large models such as Llama3.2, Qwen3-4B, SmolLM3-3B, DeepSeek-V3, Qwen3-235B-A22B, and Kimi-K2, presenting the latest technical trends in the LLM field for 2025.

The Rise of MoE Architecture: The Competition Between DeepSeek-V3 and Qwen3

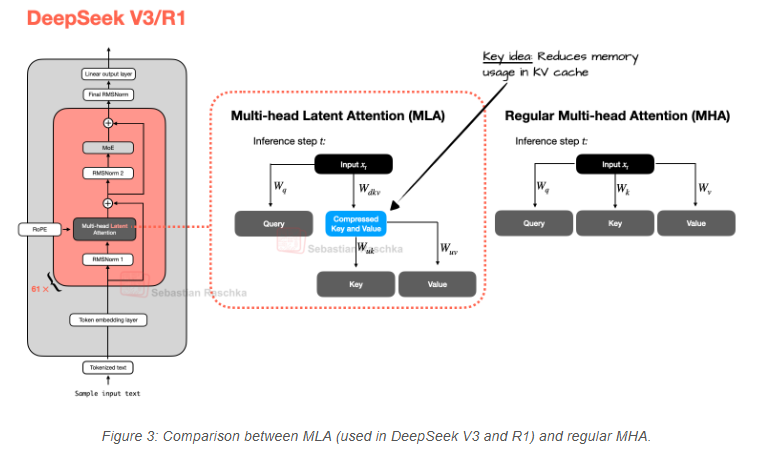

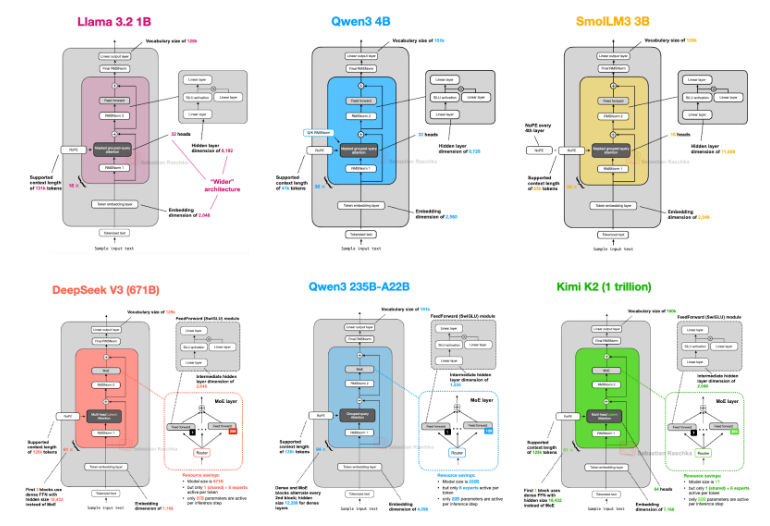

In the open-source large model field of 2025, the mixture of experts (MoE) model has become a hotspot for technological innovation. DeepSeek-V3, with its MoE architecture featuring 67.1 billion total parameters and 37 billion activated parameters, has attracted significant attention. Its characteristics include the use of MoE layers in each Transformer layer (except the first three), with nine active experts (each expert's hidden layer size is 2048), and the retention of shared experts to enhance training stability. In comparison, Qwen3-235B-A22B also adopts the MoE architecture, with 235 billion total parameters and 22 billion activated parameters, but its design chooses to abandon shared experts, instead using eight experts (a significant increase from the two experts in Qwen2.5-MoE). AIbase noticed that the Qwen3 team did not publicly explain the reason for abandoning shared experts, but it is speculated that this might be because the training stability is sufficient with the eight-expert configuration, without the need for additional computational costs.

DeepSeek-V3 and Qwen3-235B-A22B have highly similar architectures, but subtle differences show different considerations by the development teams regarding performance and efficiency balance. For example, DeepSeek-V3 performs exceptionally well in inference speed (about 50 tokens/s), while Qwen3 excels in output structuring, especially in coding and mathematical tasks. This indicates that the flexibility of the MoE architecture provides developers with space to optimize models according to task requirements.

Breakthroughs in Small and Medium-Sized Models: SmolLM3-3B and Qwen3-4B

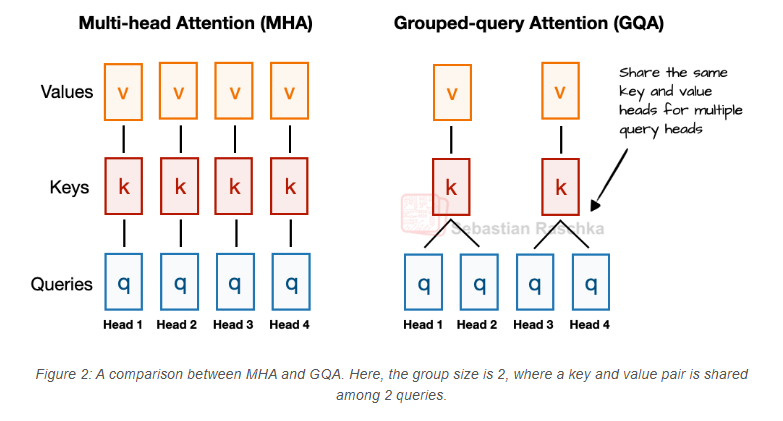

In the field of small and medium-sized models, SmolLM3-3B and Qwen3-4B have gained attention for their efficient performance. SmolLM3-3B uses a decoder-style Transformer architecture with grouped query attention (GQA) and a no-position encoding (NoPE) design. It has been pre-trained on up to 11.2 trillion tokens of data, covering web content, code, math, and reasoning data. Its unique NoPE design comes from a 2023 study aiming to remove traditional position encoding (such as RoPE) to improve long-sequence generalization capabilities. Although SmolLM3-3B has a parameter scale between Qwen3-1.7B and 4B, its performance stands out among 3B-4B-scale models, especially in multilingual support (six languages) and long context processing.

Qwen3-4B showcases strong potential in lightweight deployment, with a context length of 32,768 tokens and a 36-layer Transformer architecture. Qwen3-4B used a dataset of about 36 trillion tokens during pre-training (double that of Qwen2.5) and optimized inference and coding capabilities through a four-stage training pipeline. AIbase observed that Qwen3-4B even outperformed the larger-parameter Qwen2.5 model in STEM, coding, and reasoning tasks, demonstrating the great potential of small and medium-sized models in efficiency and performance.

Llama3.2 and Kimi-K2: A Clash of Classic and Innovation

Llama3.2 (with 3 billion parameters) continues Meta AI's classic design, adopting a hybrid architecture of alternating MoE and dense layers, with two active experts (each expert's hidden layer size is 8192). Compared to DeepSeek-V3's nine-expert design, Llama3.2 has fewer but larger experts, reflecting a conservative strategy in computational resource allocation. AIbase noticed that Llama3.2 performs well in information retrieval and creative writing tasks but lags slightly behind Qwen3 and DeepSeek-V3 in complex reasoning tasks.

Kimi-K2, with its MoE architecture featuring 1 trillion total parameters and 32 billion activated parameters, has become a "giant" in the open-source field. It performs excellently in self-programming, tool calling, and mathematical reasoning tasks, with some metrics even surpassing DeepSeek-V3. Kimi-K2's open-source strategy (under the Apache 2.0 license) makes it a popular choice for developers and researchers, although its deployment requires high hardware requirements. AIbase believes that the emergence of Kimi-K2 further promotes the application of MoE architecture in large-scale models, marking a step forward toward higher performance and lower inference costs in open-source LLMs.

Technical Trends and Future Outlook

AIbase analyzes that open-source LLMs in 2025 show the following trends: first, the MoE architecture is replacing traditional dense models due to its efficient parameter utilization and advantages in inference speed; second, small and medium-sized models achieve performance close to large models through optimized training data and architecture design; finally, innovative technologies such as NoPE and improvements in long-context processing are paving the way for multi-modal and multi-language applications of LLMs.

Although there are minor differences in the architecture of these models, such as the number of experts, position encoding methods, and training data scale, the impact of these differences on final performance still needs further research. AIbase recommends that developers weigh performance, inference cost, and deployment difficulty when choosing models. For example, users pursuing inference speed can choose DeepSeek-V3, while those focusing on output quality and multi-task capabilities may prioritize Qwen3-235B-A22B.

The Golden Age of Open-Source LLMs

From the solid design of Llama3.2 to the extreme MoE architecture of Kimi-K2, open-source large models achieved dual breakthroughs in technology and application in 2025. AIbase believes that with the continuous contributions of the open-source community and advancements in hardware technology, LLM architecture innovation will further reduce the barriers to AI development, bringing more intelligent solutions to global users. In the future, AIbase will continue to track the latest developments in open-source LLMs and provide readers with cutting-edge insights.