Recently, the Kimi team officially released the technical report of Kimi K2, revealing the training secrets behind this new model. Kimi K2 has a total of 1 trillion parameters, and its activated parameters reach as high as 3.2 billion. With its powerful capabilities, Kimi K2 won the championship in the global open-source model competition just one week after its launch, surpassing DeepSeek and comparable to top closed-source models such as Grok4 and GPT4.5.

The success of Kimi K2 is attributed to its innovative training methods and technical architecture. First, the team introduced the MuonClip optimizer, replacing the traditional Adam optimizer. This new optimizer combines efficient token usage with stability, allowing Kimi K2 to process 15.5 trillion tokens of data without loss during the pre-training phase. In addition, the team developed a large-scale Agentic Tool Use data synthesis pipeline, covering multiple fields and tools, providing rich training scenarios for the model.

Notably, Kimi K2 used a "restatement method" during training to improve data efficiency. This method is not simply repeating content but re-expressing knowledge in different ways, ensuring that the model can truly understand the information. Especially when dealing with mathematical and knowledge-based texts, Kimi K2 enhances the training effect by rewriting complex content into an easy-to-understand study note style. Data shows that training one round with rewritten data achieves higher accuracy than training ten rounds with original data.

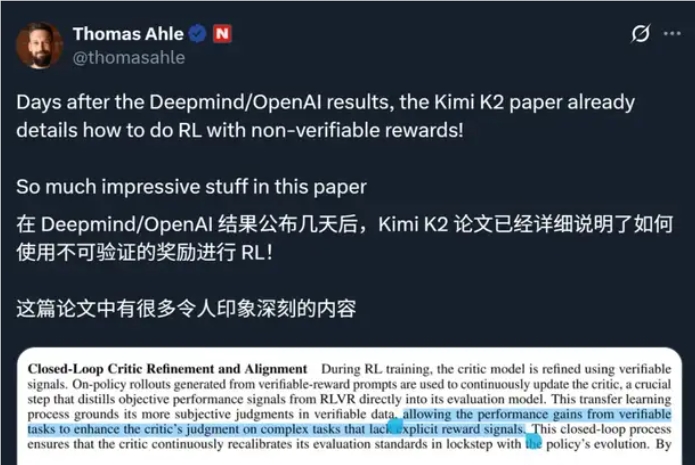

In the post-training phase, Kimi K2 also underwent supervised fine-tuning and reinforcement learning. By building verifiable reward environments and self-assessment mechanisms, it ensured continuous optimization of performance across diverse tasks. During training, budget control and temperature decay strategies were introduced to enhance the quality and stability of generated text.

To support such a massive training demand, Kimi K2 relies on a large-scale high-bandwidth GPU cluster composed of NVIDIA H800s, ensuring efficient training and data transmission.

With the continuous advancement of technology, the release of Kimi K2 undoubtedly injects new vitality into the development of open-source models and deserves attention from both inside and outside the industry.