Renowned AI entrepreneur Li Mu and his team Boson.ai recently launched a new open-source text-to-speech (TTS) large model - Higgs Audio v2. This model can not only convert text into speech, but also has multiple functions such as multilingual dialogue generation, automatic rhythm adjustment, and voice cloning, marking a major breakthrough in the field of speech synthesis.

The strength of Higgs Audio v2 lies in its multimodal capabilities. It can not only process text information, but also understand and generate speech to complete complex tasks. For example, it can write a song and sing it with a specific voice, while also adding background music, which was unimaginable in previous TTS technologies.

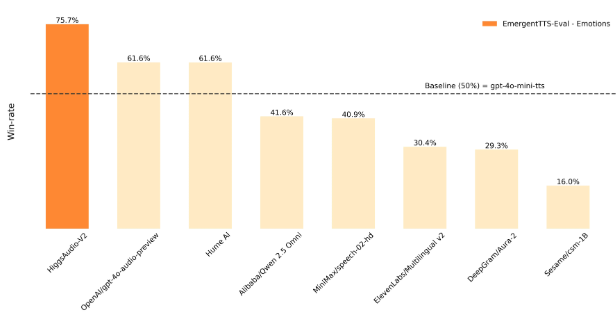

The model was trained using up to 10 million hours of speech data, ensuring excellent performance in various benchmark tests. According to the EmergentTTS-Eval test, Higgs Audio v2 exceeded GPT-4o-mini-tts by 75.7% and 55.7% respectively in the "emotion" and "question" categories. In traditional TTS tests, the model also demonstrated extraordinary performance, becoming an industry benchmark.

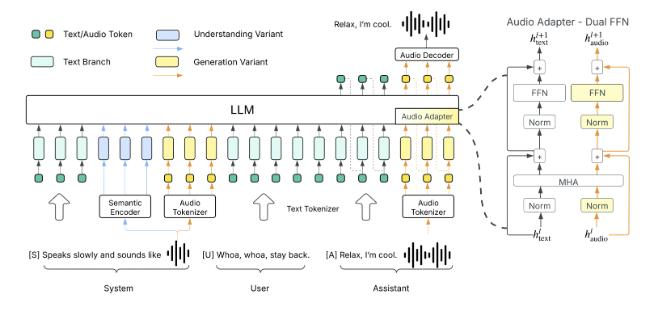

In terms of technology, Higgs Audio v2 uses advanced data processing methods. The audio signal at 25 frames per second is converted into a sequence of numbers through a discrete audio tokenizer, accurately capturing semantic and acoustic features. At the same time, the model architecture utilizes a pre-trained large language model, giving it strong capabilities in understanding language and context. In addition, the model has contextual learning ability, and can quickly adapt to new tasks with simple prompts, achieving zero-shot voice cloning.

In application scenarios, Higgs Audio v2 can enable real-time voice chat, providing natural interaction with low latency and emotional expression, making it very suitable for use in virtual anchors and real-time voice assistants. At the same time, in terms of audio content creation, it can generate natural dialogues and narration, providing strong support for audiobooks, interactive training, and dynamic storytelling. Finally, the voice cloning function allows it to replicate the voice of a specific person, opening up new possibilities in the entertainment and creative fields.

The code of this model has been fully open-sourced, and users can find it on GitHub and Hugging Face platforms. It supports local installation, and users need to prepare GPU versions of PyTorch or use Docker for simplified installation.