ByteDance Seed team officially announced its latest research achievement - Seed LiveInterpret 2.0, an end-to-end simultaneous interpretation large model. The release of this model marks a major breakthrough in machine simultaneous interpretation technology. Its translation accuracy is close to that of professional interpreters, with extremely low latency of only 3 seconds. Additionally, it features real-time voice cloning, capable of outputting the translated speech in the speaker's tone, greatly enhancing the naturalness and fluency of cross-lingual communication.

Simultaneous interpretation has always been regarded as the pinnacle skill in the field of translation. It requires the interpreter to complete language conversion within a very short time, listening and speaking at the same time. For researchers in translation technology, it is highly challenging. The emergence of Seed LiveInterpret 2.0 not only achieves top industry level (SOTA) in Chinese-English simultaneous interpretation quality but also realizes extremely low voice latency, bringing new technical benchmarks to the field of simultaneous interpretation.

Seed LiveInterpret 2.0 is based on a full-duplex end-to-end speech generation and understanding framework, supporting bidirectional Chinese-English translation and real-time processing of multiple voice inputs. Like human interpreters, it can "listen and speak" simultaneously with extremely low latency, receiving source language voice input while directly outputting the target language translation. In addition, the model supports zero-shot voice cloning, enabling synthesis of "original voice" translations without prior voice sample collection, just through real-time conversation, making communication more smooth and natural.

In testing, Seed LiveInterpret 2.0 demonstrated its powerful capabilities. Facing a 40-second long Chinese expression, the model can smoothly output English translation with the same voice color at low latency. Moreover, it can quickly learn voice characteristics, whether it's Zhu Bajie from "Journey to the West" or Lin Daiyu from "Dream of the Red Chamber," even if it has never "heard" the character's voice before, it can still perform on-site interpretation through real-time interaction.

Compared to traditional machine simultaneous interpretation systems, Seed LiveInterpret 2.0 shows significant advantages in multiple aspects. First, it has translation accuracy close to that of human interpreters, with precise speech understanding ensuring translation accuracy. In complex scenarios such as group meetings, the bidirectional Chinese-English translation accuracy exceeds 70%, and the translation accuracy for single-speaker speeches exceeds 80%, approaching the level of professional human interpreters. Second, its extremely low-latency "listen and speak" capability uses a full-duplex speech understanding and generation framework, reducing translation latency to as low as 2-3 seconds, decreasing by over 60% compared to traditional machine simultaneous interpretation systems, achieving true "listen and speak" translation. In addition, the zero-shot voice cloning feature allows the model to "speak" foreign languages in the speaker's voice characteristics in real time, enhancing the immersion and warmth of communication. Finally, the model can intelligently balance translation quality, latency, and voice output rhythm, adjusting the output rhythm according to voice clarity, fluency, and complexity, and adapting to different language characteristics. Even with extremely long information, it ensures the natural and smooth rhythm of the interpreted speech.

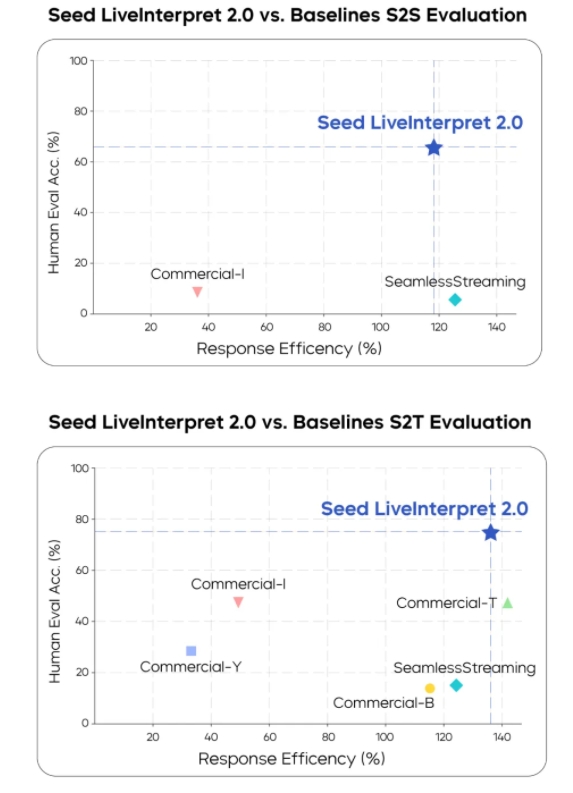

In professional human evaluations, Seed LiveInterpret 2.0 performed particularly outstandingly. The evaluation was based on the RealSI dataset, an open test set containing 10 domains in each direction for Chinese-English bidirectional translation. The human evaluation team used the proportion of valid information conveyed (Valid Information Proportion) as the metric, testing multiple leading simultaneous interpretation systems in the industry, including Seed LiveInterpret 2.0, in both Chinese-English directions. The evaluation results showed that in the simultaneous interpretation task from speech to text, the average human score for Chinese-English translation quality of Seed LiveInterpret 2.0 reached 74.8 (out of 100, evaluating translation accuracy), exceeding the second-ranked baseline system (47.3 points) by 58%. In the Chinese-English simultaneous interpretation task from speech to speech, only three evaluation systems supported this capability. Among them, the average translation quality of Seed LiveInterpret 2.0 in Chinese-English translation reached 66.3 points (out of 100, evaluating not only translation accuracy but also output delay, speaking speed, pronunciation, and fluency), far surpassing other baseline systems and reaching a level close to that of professional human interpreters. At the same time, most baseline systems do not support voice cloning functionality.

In terms of latency performance, in the speech-to-text scenario, Seed LiveInterpret 2.0 had an average delay of only 2.21 seconds for the first character output, and in the speech-to-speech scenario, the output delay was only 2.53 seconds, achieving a balance between translation quality and latency.

Technical Report:

https://arxiv.org/pdf/2507.17527

Project Homepage:

https://seed.bytedance.com/seed_liveinterpret