In the field of multimodal embedding learning, researchers have continuously worked to connect different data forms in order to better understand and process diverse information. Recently, a research team from Salesforce Research, University of California, Santa Barbara, University of Waterloo, and Tsinghua University jointly proposed VLM2Vec-V2, a new multimodal embedding learning framework aimed at unifying retrieval tasks for images, videos, and visual documents.

Existing multimodal embedding models are typically trained on specific datasets such as MMEB and M-BEIR, mainly focusing on natural images and photographs. These datasets mostly come from MSCOCO, Flickr, and ImageNet, failing to cover a broader range of visual information types, such as documents, PDFs, websites, videos, and slides. This limitation leads to unsatisfactory performance of existing embedding models in practical tasks, such as article search, website search, and YouTube video search.

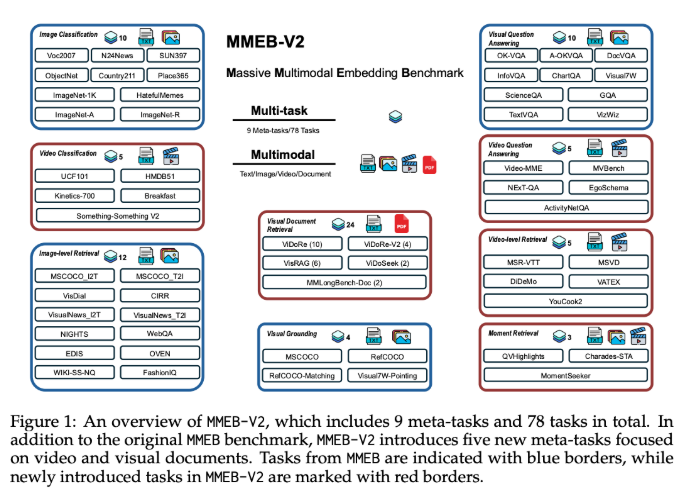

The introduction of VLM2Vec-V2 first expands the MMEB dataset, adding five new task types, including visual document retrieval, video retrieval, temporal localization, video classification, and video question answering. This expansion provides more comprehensive evaluation criteria for multimodal learning. In addition, as a general embedding model, VLM2Vec-V2 supports various input formats and performs excellently in both new tasks and traditional image benchmark tests, laying a more flexible and scalable foundation for research and practical applications.

VLM2Vec-V2 adopts Qwen2-VL as its core architecture, which has unique advantages in multimodal processing. Qwen2-VL has three key features: simple dynamic resolution, multi-modal rotation position embedding (M-RoPE), and a unified framework combining two-dimensional and three-dimensional convolution. To achieve effective multi-task training across various data sources, VLM2Vec-V2 also introduces a flexible data sampling pipeline, using a pre-set sampling weight table and interleaved sub-batch processing strategies to enhance the stability of contrastive learning.

In the comprehensive evaluation across 78 datasets, VLM2Vec-V2 achieved the highest average score of 58.0, outperforming multiple strong baseline models and demonstrating excellent performance in both image and video tasks. Although it slightly lags behind the ColPali model in visual document retrieval, VLM2Vec-V2 provides a direction for future research in the unified framework of multimodal learning.

Project: https://github.com/TIGER-AI-Lab/VLM2Vec

huggingface: https://huggingface.co/VLM2Vec/VLM2Vec-V2.0

Key points:

📊 VLM2Vec-V2 is a newly launched multimodal embedding learning framework that can unify retrieval tasks for images, videos, and visual documents.

📝 The new model's evaluation dataset MMEB-V2 adds various task types, enhancing the richness and accuracy of multimodal learning.

🚀 VLM2Vec-V2 performs excellently in multiple tasks and has become an important benchmark model in the field of multimodal learning.