Alibaba officially open-sources the video generation model "Tongyi Wanxiang Wan 2.2" tonight. This release includes three core models: text-to-video (Wan 2.2-T2V-A14B), image-to-video (Wan 2.2-I2V-A14B), and unified video generation (Wan 2.2-IT2V-5B) models, marking a major breakthrough in video generation technology.

Industry-first MoE Architecture, 50% Improvement in Computational Efficiency

Tongyi Wanxiang 2.2 is the first to introduce the MoE (Mixture of Experts) architecture in video generation diffusion models, effectively solving the problem of excessive computational resource consumption caused by long tokens in video generation. The text-to-video and image-to-video models have a total parameter count of 27B, with 14B active parameters, making them the first video generation models in the industry to use the MoE architecture.

This architecture consists of high-noise expert models and low-noise expert models, which are responsible for the overall layout and detailed refinement of videos, respectively. Under the same parameter scale, it can save about 50% of the computational resources while achieving significant improvements in complex motion generation, character interaction, and aesthetic expression.

First-of-its-kind Film Aesthetics Control System

A major highlight of Wan 2.2 is its first-of-its-kind "film aesthetics control system," achieving professional cinematic levels in lighting, color, composition, and micro-expressions. By inputting keywords such as "sunset," "soft light," "rim light," "warm tones," and "center composition," the model can automatically generate romantic scenes of golden sunset glows. Using combinations like "cool tones," "hard light," "balanced composition," and "low angle," it can generate visuals resembling science fiction films.

5B Unified Model Deployable on Consumer Graphics Cards

Tongyi Wanxiang also open-sources a 5B small-sized unified video generation model that supports both text-to-video and image-to-video functions. The model uses a high-compression 3D VAE architecture, achieving time and space compression ratios of 4×16×16, with an information compression rate of 64, which are the highest levels achieved by open-source models.

This model requires only 22GB of VRAM (on a single consumer-grade graphics card) to generate a 5-second high-definition video within minutes. It is currently the fastest base model for generating 24 frames per second, 720P-level videos, significantly lowering the technical barriers for AI video generation.

Open Access Through Multiple Channels

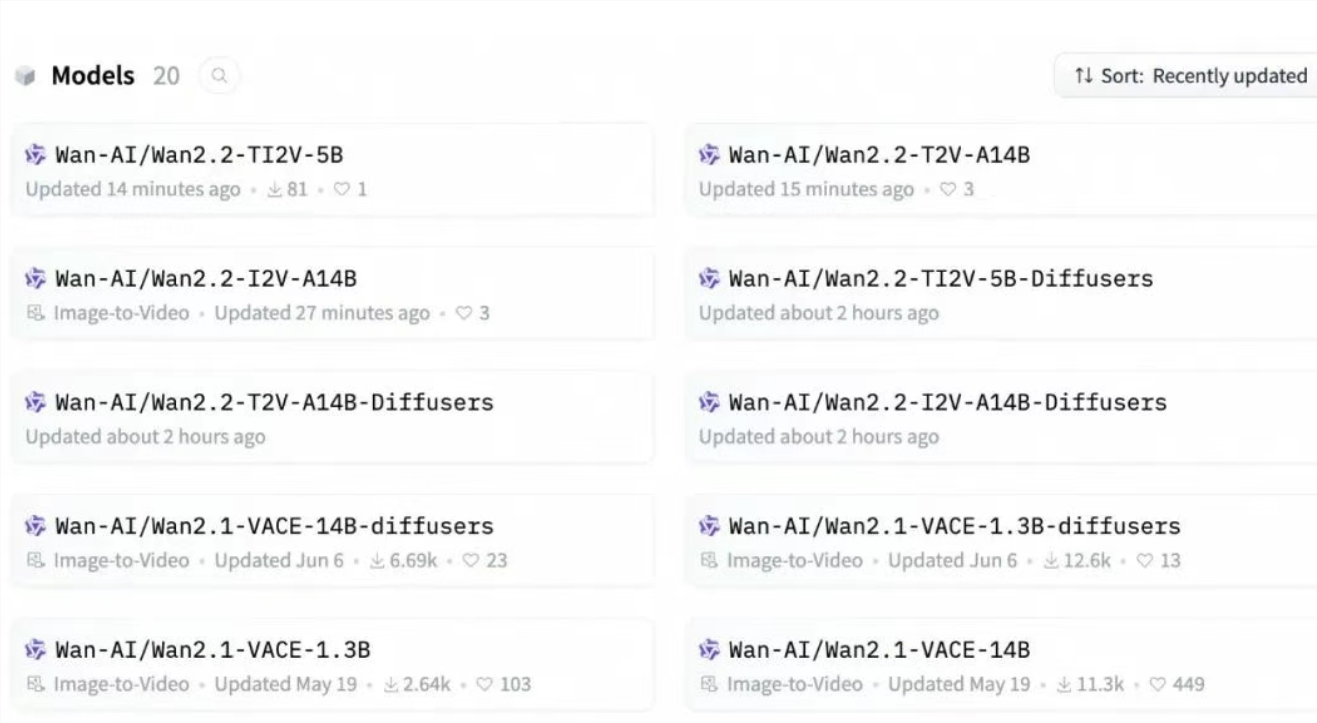

Developers can download the model and code on GitHub, HuggingFace, and the Moda Community, while enterprises can call the model API through Alibaba Cloud BaiLian. Ordinary users can also experience it directly on the Tongyi Wanxiang website and the Tongyi APP.

Since February this year, Tongyi Wanxiang has continuously open-sourced multiple models, including text-to-video, image-to-video, first-and-last-frame-to-video, and all-in-one editing models. The download count in the open-source community has exceeded 5 million times, making an important contribution to the popularization and development of AI video generation technology.