Zhipu AI officially launched the next-generation flagship model GLM-4.5 today. This is a foundational model specifically designed for agent applications and is now open-sourced on the Hugging Face and ModelScope platforms, with model weights licensed under the MIT License.

Open Source SOTA Performance, Domestic Models Leading

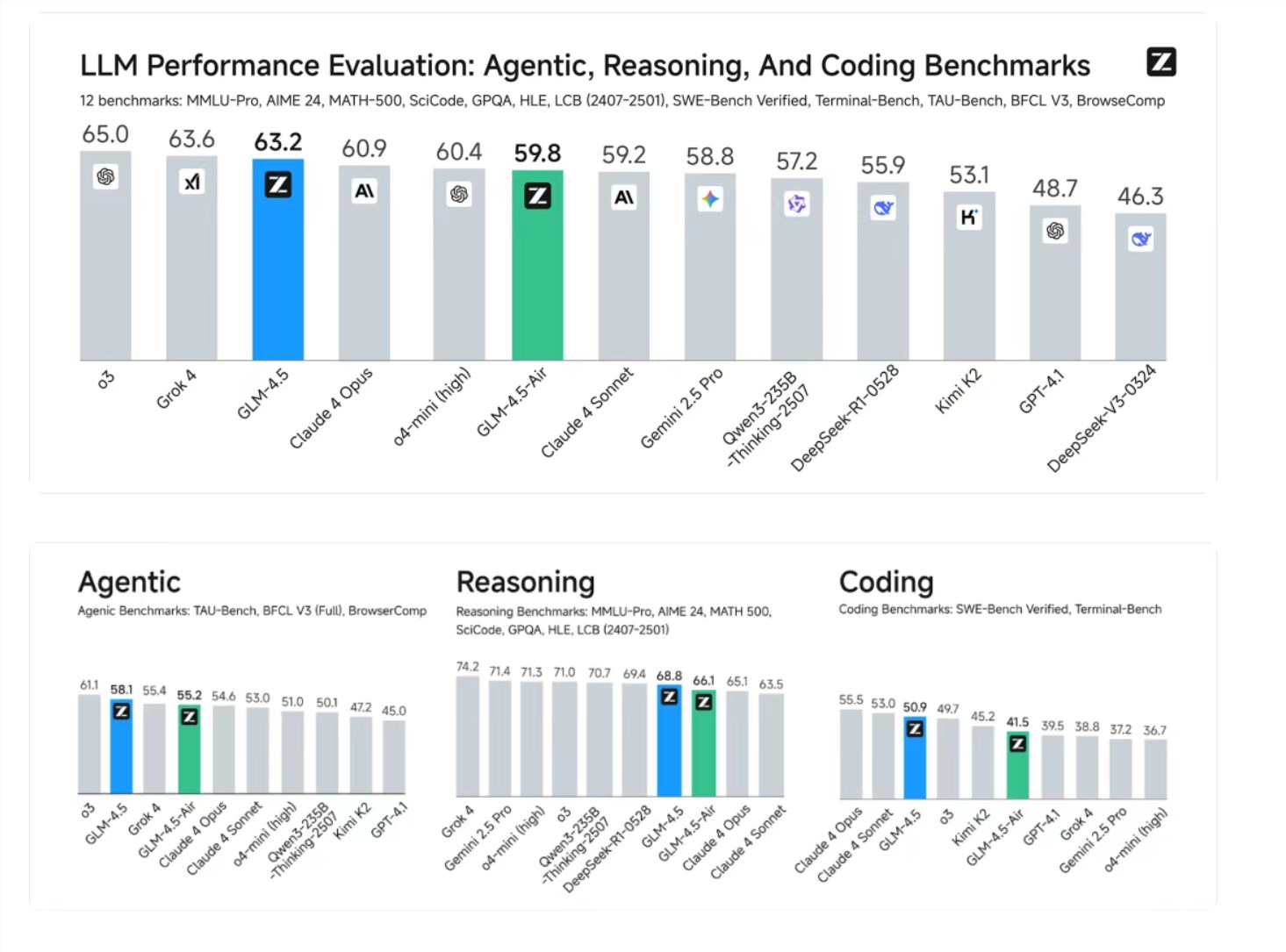

GLM-4.5 achieves state-of-the-art (SOTA) performance in comprehensive capabilities including reasoning, coding, and agents. In real-world agent evaluations, it demonstrated the best performance among domestic models. Through a comprehensive assessment of 12 most representative benchmark datasets, including MMLU Pro, AIME24, MATH500, SciCode, and others, GLM-4.5 achieved an outstanding result: third globally, first domestically, and first among open-source models.

Natively Integrated Core Capabilities

GLM-4.5 is the first model to achieve native integration of reasoning, coding, and agent capabilities, meeting the complex needs of agent applications. This technological breakthrough reflects Zhipu AI's complete embodiment of the first principles of AGI: integrating more general intelligence capabilities without sacrificing existing ones.

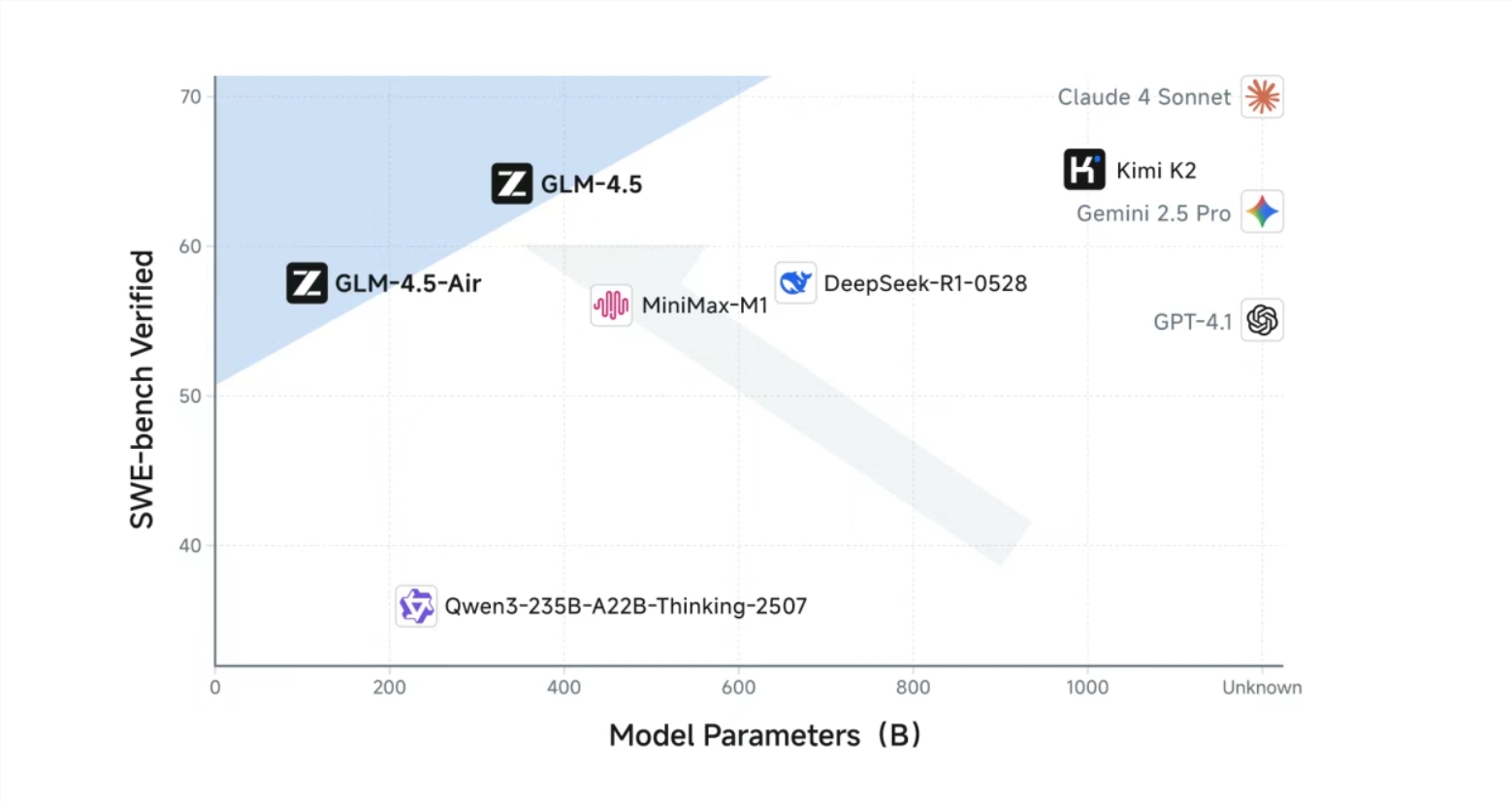

High-Parameter-Efficiency MoE Architecture

GLM-4.5 adopts a mixture of experts (MoE) architecture, offering two versions: GLM-4.5 has a total parameter count of 355 billion, with 32 billion activated parameters; GLM-4.5-Air has a total of 106 billion parameters, with 12 billion activated parameters. Notably, GLM-4.5 has only half the parameters of DeepSeek-R1 and one-third of Kimi-K2, yet it performs better in multiple standard benchmarks, sitting on the Pareto frontier for performance-to-parameter ratio on the SWE-bench Verified leaderboard.

Two-Mode Design and Training Process

The model supports two operation modes: a thinking mode for complex reasoning and tool usage, and a non-thinking mode for instant responses. The training process includes pre-training on 15 trillion token general data, followed by targeted training on 8 trillion tokens of data in areas such as code, reasoning, and agents, and finally enhancing model capabilities through reinforcement learning.

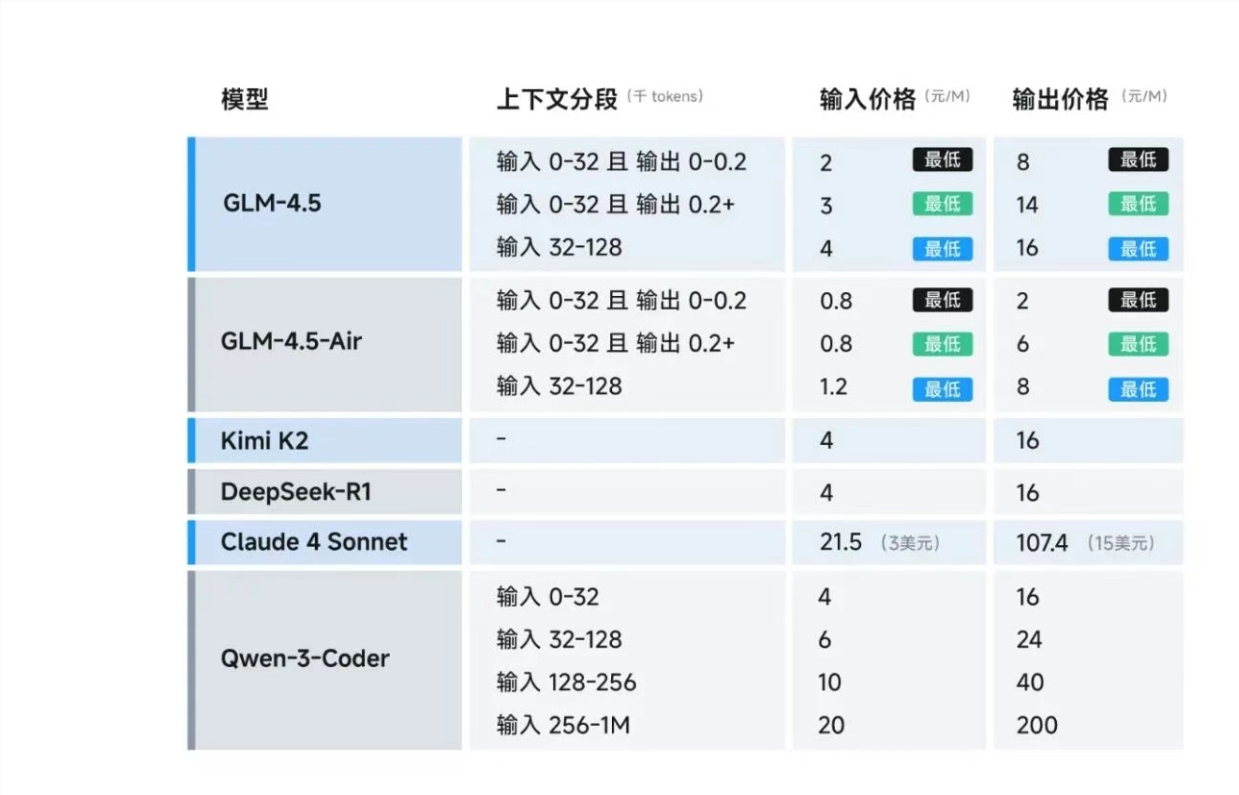

Ultimate Cost-Performance Breaking Industry Pricing

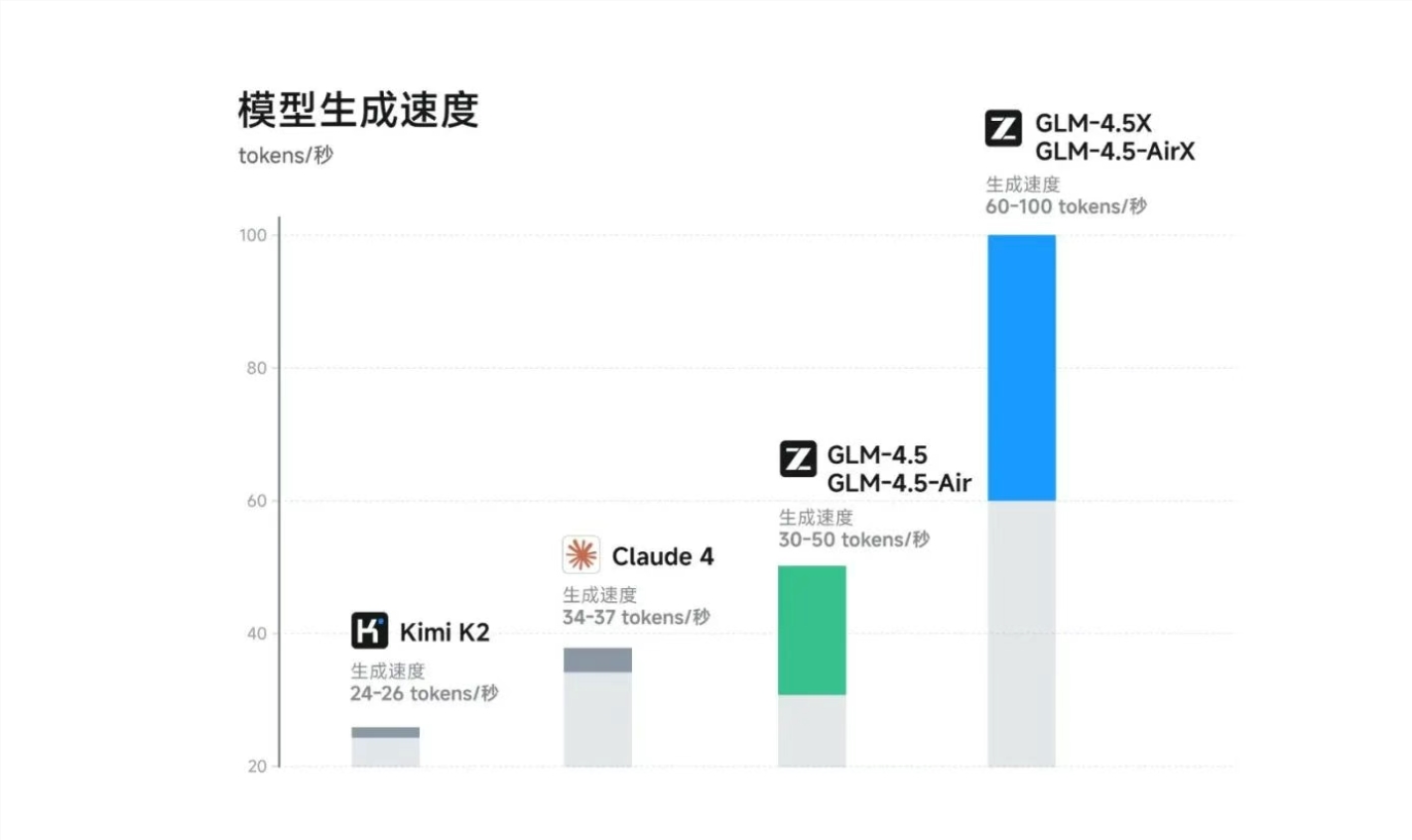

The GLM-4.5 series has made significant breakthroughs in cost and efficiency, with API call prices far lower than mainstream models: input costs only 0.8 yuan per million tokens, and output costs 2 yuan per million tokens. The high-speed version has a measured generation speed of up to 100 tokens per second, supporting low-latency and high-concurrency practical deployment needs.

Full-Stack Development Capabilities and Ecosystem Compatibility

The GLM-4.5 series can handle full-stack development tasks, with capabilities including front-end website writing, back-end database management, and support for tool calling interfaces. The model is deeply optimized for full-stack programming and tool calling, compatible with major code agent frameworks such as Claude Code, Cline, and Roo Code, and offers one-click compatibility with the Claude Code framework.

Multi-Platform Experience and Transparent Evaluation

Users can call the API through the BigModel.cn open platform or experience the full features for free on Zhipu Qingyan (chatglm.cn) and z.ai. To ensure evaluation transparency, Zhipu AI has published 52 questions and Agent trajectories for industry verification and replication.

The release of GLM-4.5 marks a new technical foundation for agent application development. Its native integrated core capabilities, ultimate cost-performance, and extensive ecosystem compatibility are expected to drive the large-scale application and commercial value of AGI technology across various industries.