Recently, Zhipu officially launched its latest flagship model GLM-4.5, which is called "a foundational model specifically designed for Agent applications" and has achieved state-of-the-art (SOTA) performance in reasoning, code generation, and comprehensive agent capabilities among open-source models. The release of GLM-4.5 marks the rapid progress of domestic large model manufacturers in the open-source field and provides developers with powerful tools.

GLM-4.5 adopts a mixture of experts (MoE) architecture, with two versions: GLM-4.5 with a total of 355 billion parameters and GLM-4.5-Air with a total of 106 billion parameters. It is worth noting that both versions have been simultaneously open-sourced on the Hugging Face and ModelScope platforms and are licensed under the MIT license, allowing for commercial use without any obstacles.

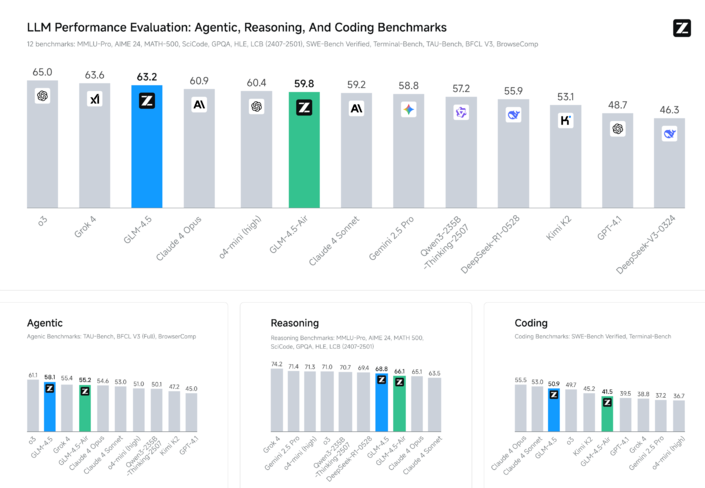

In terms of evaluation, Zhipu selected 12 representative benchmarks for testing. The results showed that GLM-4.5 ranked second globally, first among domestic models, and first among open-source models. Although there is room for improvement compared to other models like Claude-4-Sonnet, it performed exceptionally well in the reliability of tool calls and task completion.

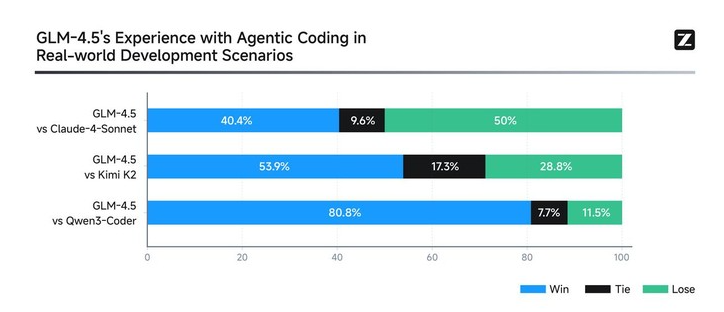

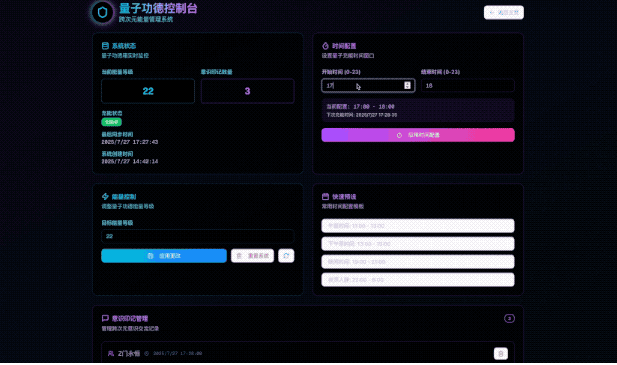

Zhipu also conducted a series of programming development tasks by integrating models such as Claude Code, proving the strong capabilities of GLM-4.5 in real-world scenarios. For example, simply inputting "build a Google search website" allows GLM-4.5 to generate a practical search engine. Additionally, the model can create an interactive 3D Earth page where users can click on locations to get detailed information.

From a technical perspective, although GLM-4.5 has only half the parameters of DeepSeek-R1 and one-third of Kimi-K2, it achieves comparable performance due to higher parameter efficiency. Its training process used 15 trillion token general data for pre-training, followed by targeted training in areas such as code and reasoning.

Notably, the API call price of GLM-4.5 is very affordable, with input costing only 0.8 yuan per million tokens and output at 2 yuan per million tokens. The high-speed version can generate up to 100 tokens per second. This makes GLM-4.5 highly competitive in the market.

Zhipu's steady growth and continuous innovation in the AI field have made it a strong contender for the first IPO among the "Six Small Dragons of AI."