Xiaohongshu Hi Lab has recently released and open-sourced its first self-developed multimodal large model dots.vlm1. This model is based on the 1.2 billion parameter NaViT visual encoder and the DeepSeek V3 large language model, and was fully trained from scratch. Its outstanding performance in multimodal visual understanding and reasoning is now close to the leading closed-source models, such as Gemini2.5Pro and Seed-VL1.5, marking a new height in the performance of open-source multimodal models.

Innovation in Self-Development, Leading Performance

The core highlight of dots.vlm1 lies in its native self-developed NaViT visual encoder. Unlike traditional methods that fine-tune mature models, NaViT is trained from scratch and supports dynamic resolution, better adapting to diverse real-world image scenarios. The model also enhances its generalization ability by combining pure visual and text-visual dual supervision, especially excelling in handling non-traditional structured images such as tables, charts, formulas, and documents.

In terms of data, the Hi Lab team has built a large and well-cleaned training dataset. They improved the quality of image-text alignment by rewriting web data themselves and using their self-developed dots.ocr tool to process PDF documents, laying a solid foundation for the model's cross-modal understanding capabilities.

Evaluation Performance, Comparable to Top Closed-Source Models

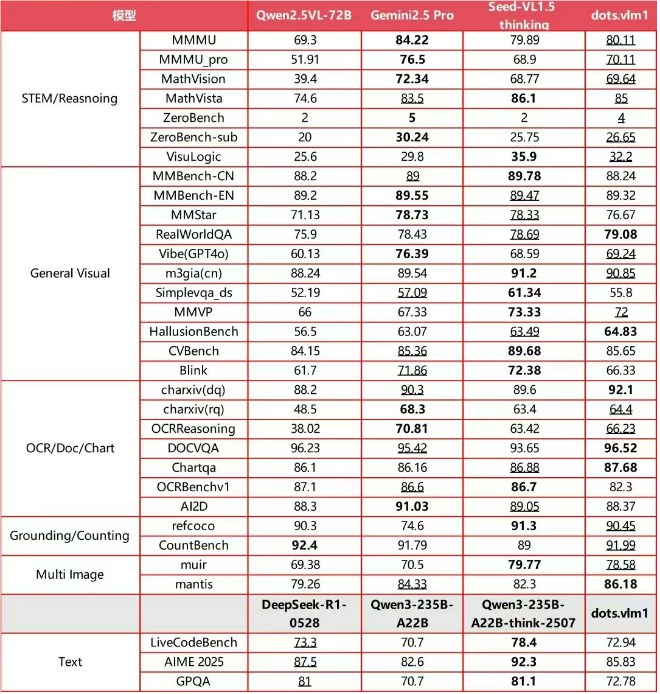

On mainstream international multimodal evaluation sets, dots.vlm1's overall performance is impressive. It has reached levels comparable to Gemini2.5Pro and Seed-VL1.5 in several benchmarks such as MMMU, MathVision, and OCR Reasoning. In complex chart reasoning, STEM mathematical reasoning, and long-tail specific scenario recognition, dots.vlm1 demonstrates excellent logical reasoning and analytical capabilities, fully capable of handling high-difficulty tasks like Olympiad math.

Although it still lags behind SOTA closed-source models in extremely complex text reasoning tasks, its general mathematical reasoning and coding capabilities are already on par with mainstream large language models.

The Hi Lab team stated that they will continue to optimize the model. They plan to expand the scale of cross-modal data and introduce advanced algorithms such as reinforcement learning to further improve the model's reasoning generalization capability. By open-sourcing dots.vlm1, Xiaohongshu is committed to bringing new momentum to the multimodal large model ecosystem and promoting industry development.