Intel announced the latest software update for its "Battle Matrix" project in August 2025 and launched the LLM-Scaler1.0 container to optimize AI inference support for Intel Arc B series GPUs.

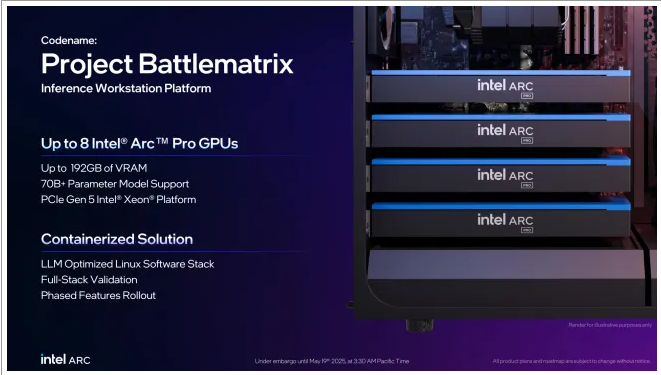

Earlier this May, Intel announced the "Battle Matrix" project, aiming to support up to eight Intel Arc Pro GPUs for AI inference, and introduced new features such as SR-IOV support, improved vLLM performance, and more. Intel's goal is to achieve product availability in the third quarter and full functionality by the end of the year.

The released LLM-Scaler1.0 is described as "a new containerized solution built for Linux environments, optimized to provide exceptional inference performance, supporting multi-GPU scaling and PCIe point-to-point data transfer, and designed with enterprise-level reliability and manageability features including ECC, SR-IOV, telemetry, and remote firmware updates." The release also integrates new vLLM performance optimizations, various new vLLM features, and better multimodal model support.

The LLM-Scaler1.0 container also includes oneCCL benchmark support and XPU manager integration, making it convenient for various GPU telemetry functions. Additionally, other enhanced features have been updated.

In the official announcement on Intel's website, they mentioned that a more stable version of LLM Scaler and other new features will be released, expected to be completed by the end of the third quarter. The full feature release is still planned for the fourth quarter.

Key Points:

🌟 Intel released the LLM-Scaler1.0 container, optimizing AI inference performance for Arc B series GPUs.

💻 The new version supports multi-GPU scaling and PCIe point-to-point data transfer, enhancing enterprise-level reliability features.

📈 Future plans include a more stable version and new features, with a full release planned for the fourth quarter.