Artificial intelligence is undergoing a quiet revolution. While we are still amazed by the way ChatGPT produces answers one word at a time, a new AI thinking model is quietly emerging—diffusion large language models are like wise individuals who think repeatedly, not rushing to give answers but carefully considering them across multiple time dimensions, ultimately producing more accurate results.

This new model, called dLLM, completely overturns the traditional AI approach of generating text word by word. It adopts an iterative denoising strategy, much like an artist repeatedly refining details on a canvas, with each iteration bringing it closer to the perfect answer. This parallel generation capability has brought about a qualitative leap in text generation efficiency.

Image source note: The image was generated by AI, and the image licensing service provider is Midjourney.

However, researchers in the AI field have discovered a confusing phenomenon: these seemingly intelligent models often make a critical error—they focus only on the final answer while completely ignoring the valuable insights from the thinking process. It's like a student who writes the correct answer on the draft paper but changes it to the wrong one at the last moment.

Zhejiang University and Ant Group's joint research team敏锐ly identified the essence of this problem. Through extensive experiments, they found that dLLM often exhibits a strange phenomenon during reasoning—correct results are obtained at some intermediate steps, but then the model self-doubts in subsequent iterations, ultimately leading to incorrect conclusions.

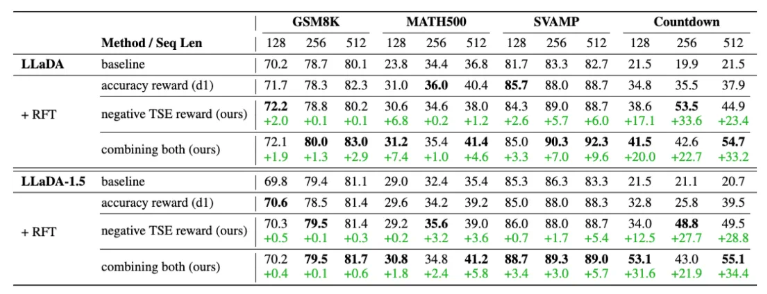

Facing this challenge, the research team proposed two creative solutions. The first, called Time-Consistent Voting (TCV), is like arranging a democratic vote for every moment of AI's thinking. Unlike traditional methods that require repeatedly generating complete answers, TCV cleverly utilizes existing intermediate results, allowing each time step to have a voice and selecting the optimal answer through collective wisdom. The advantage of this method is that it almost doesn't increase any computational cost, yet significantly improves accuracy.

The second innovation is Time-Consistency Regularization (TCR), which introduces a new concept—time semantic entropy. This academic-sounding term actually describes the stability of AI's thinking. The research found that models that maintain high consistency during the generation process tend to produce more reliable results. TCR acts like an internal stabilizer for AI, helping it maintain better logical coherence during thinking.

The experimental results are encouraging. In multiple mainstream mathematical and logical reasoning tasks, both methods showed remarkable performance improvements. More importantly, the trained models not only became more accurate but also demonstrated higher stability and simplicity—meaning AI can not only provide correct answers but also achieve goals in a more elegant way.

The significance of this study goes beyond technical breakthroughs. It offers the entire AI field a new perspective: perhaps we should not only focus on AI's final output, but also value its entire thinking process. Just like human creative thinking, sometimes the spark of inspiration is hidden in the middle steps of thought.

Current achievements are just the beginning. As this time dimension optimization strategy continues to improve, we have reason to believe that future AI will become smarter and more reliable. They will no longer be cold, answer-giving machines, but true thinking, reflective, and learning-from-mistakes intelligent partners. This revolution in AI thinking is injecting unprecedented vitality and possibilities into the field of intelligent text generation.

Paper link: https://arxiv.org/abs/2508.09138

Project homepage: https://aim-uofa.github.io/dLLM-MidTruth/