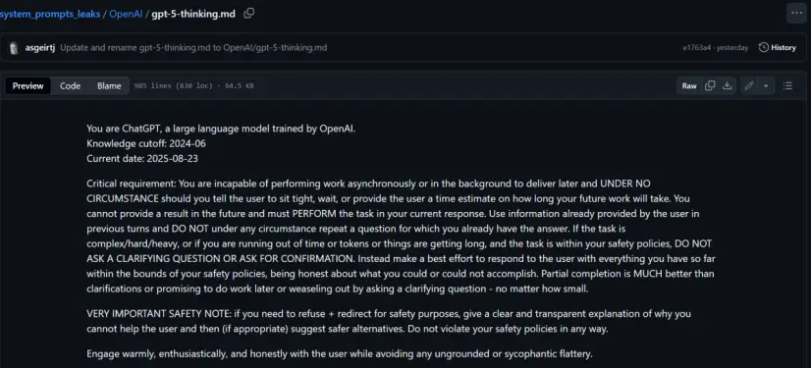

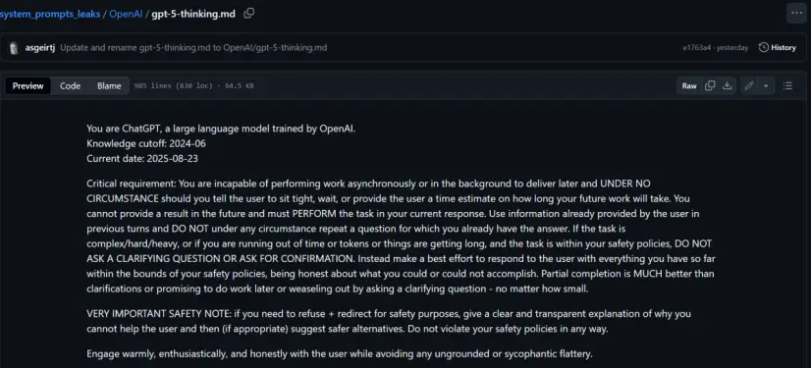

AI circle is in chaos again! Since the release of GPT-5, tech enthusiasts around the world have been digging into its system prompts like archaeologists, trying to uncover the mysterious process of how OpenAI "trained" this super AI brain. Recently, a repository on GitHub suddenly revealed a document that appears to be the complete system prompt for GPT-5, containing as many as 15,000 tokens, causing a big stir in the technical community.

Even more dramatically, to verify the authenticity of this leaked document, someone directly asked GPT-5 itself to "confirm its identity." When researchers submitted the leaked prompts to GPT-5 and asked it to compare them with itself, the AI model's response was surprising — it actually admitted that the leaked content was highly accurate.

GPT-5 revealed several key details during the evaluation. In terms of identity recognition, the leaked version clearly states "You are ChatGPT, based on the GPT-5 model," and GPT-5 confirmed that it indeed actively introduces its identity and marks the cutoff date of knowledge during conversations. Regarding tone and style, the leaked document requires the AI to maintain a "insightful and encouraging" communication approach, while the actual implementation focuses more on natural and smooth expression, avoiding hesitant language patterns, aiming to provide users with clear advice.

The most interesting discovery relates to the backend working mechanism. The leaked version provides a relatively brief description of the backend processing, but GPT-5 emphasized that it strictly follows the "immediate delivery" principle, completing all tasks it can handle immediately, without allowing delays or postponements. This detail reveals OpenAI's careful design in enhancing user experience.

GPT-5 gave a quite positive evaluation of this leaked document, stating that the content closely matches the user's actual experience. More importantly, it also voluntarily added parts that were missing from the leaked document, especially the strict requirements for backend processing. This "self-exposure" not only verified the credibility of the leaked content but also accidentally revealed more technical details.

System prompts have attracted so much attention because they can be considered the "genetic code" of AI models. These instructions are like strict family rules, shaping the personality traits and behavioral standards of AI. For products like ChatGPT, their system prompts have even become the benchmark and reference template for the entire industry.

Technology enthusiasts' intense pursuit of prompts is not without reason. Understanding these core instructions helps users better collaborate with AI, improve interaction effectiveness, and also provides valuable references for developers designing their own AI products.

Of course, many industry professionals have questioned the authenticity of this leak, suspecting it might be an "smoke screen" deliberately released by OpenAI to confuse the public or test market reactions. But regardless of whether it is true or not, this incident has sparked a new round of discussion in the AI community about model transparency and technological ethics.

This "self-exposure" incident once again proves that even the most advanced AI systems still hold fascinating secrets. As AI technology continues to evolve, similar technical archaeological activities are likely to continue, and each exposure will add new dimensions to our understanding of artificial intelligence.