Recently, the Institute of Automation, Chinese Academy of Sciences, in collaboration with Muxi MetaX, successfully launched the world's first brain-inspired spiking large model "Shunxi 1.0" (SpikingBrain-1.0), marking an important step forward for China in the field of large model technology. This innovation not only achieves full domestic process but also demonstrates significant efficiency improvements in long-sequence reasoning.

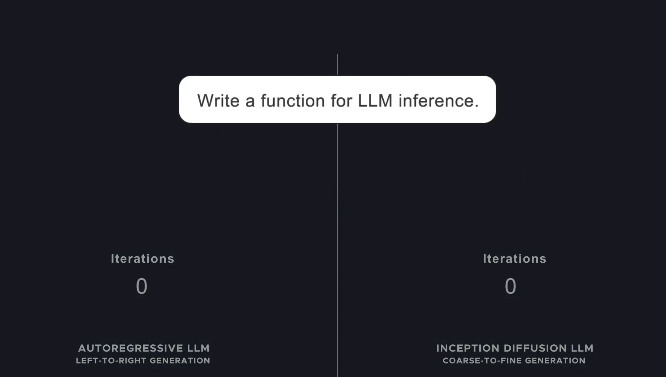

"Shunxi 1.0" is based on in-depth research into the complex workings of brain neurons, and proposes a large model architecture called "Intrinsic Complexity". The model establishes a connection between the intrinsic dynamics of spiking neurons and linear attention models, pointing to a new path for the development of large models. Professor Li Guoqi stated that the release of this achievement not only demonstrates China's innovative capabilities in combining brain-inspired computing with large models, but also provides new ideas for future neuromorphic computing and chip design.

The research team has also open-sourced the "Shunxi 1.0-7B" model and opened the test website for "Shunxi 1.0-76B". These models have been widely verified by the industry and have the potential for efficient application in fields such as law and medicine. In addition, the team has developed an efficient training and inference framework for the Muxi MetaX Xi Cloud C550 GPU cluster, making the application of large models more smooth and efficient.

With the launch of "Shunxi 1.0", breakthroughs in large model technology in China will greatly improve the modeling efficiency in various industries for long-sequence scenarios, promoting further development of related technologies. In the future, we can expect this technology to be widely applied in multiple fields such as law, medicine, and scientific simulation, bringing more possibilities for innovation.