Anthropic is pushing the huge potential of its large language model (LLM) in the field of cybersecurity, and has demonstrated with real-world data the rapid progress of AI in discovering software vulnerabilities. The company cites results from the CyberGym rankings, indicating that its latest model has made a key step forward in improving network defense efficiency.

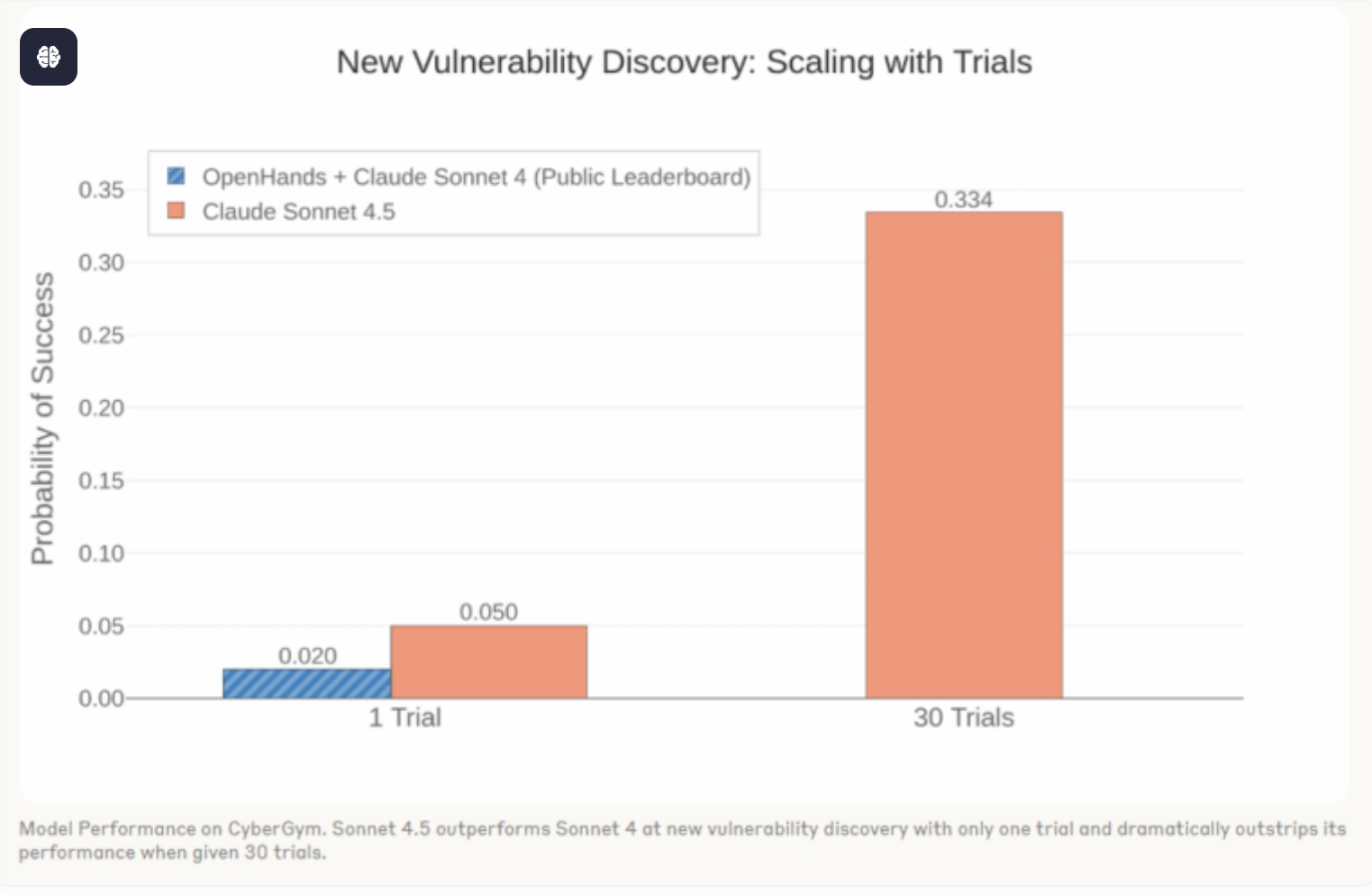

Core data shows that Claude Sonnet4 has a probability of about 2% in discovering new software vulnerabilities, while the upgraded version Sonnet4.5 has increased this probability to 5%. More notably, in repeated tests, Sonnet4.5 successfully discovered new vulnerabilities in more than one-third of the projects, demonstrating its strong power as a **"vulnerability discovery engine."**

Anthropic believes that this performance leap marks a turning point where **"artificial intelligence impacts cybersecurity."**

The company further states that in recent DARPA AI network challenges, participating teams have started using large language models such as Claude to build advanced **"network reasoning systems."** These systems can efficiently check millions of lines of code to find and locate critical vulnerabilities that need to be fixed.

Anthropic's data and observations indicate that LLMs are no longer just content generation tools; they are rapidly becoming essential analytical and defensive forces in the field of cybersecurity, and have the potential to fundamentally change the process of discovering and fixing software vulnerabilities.