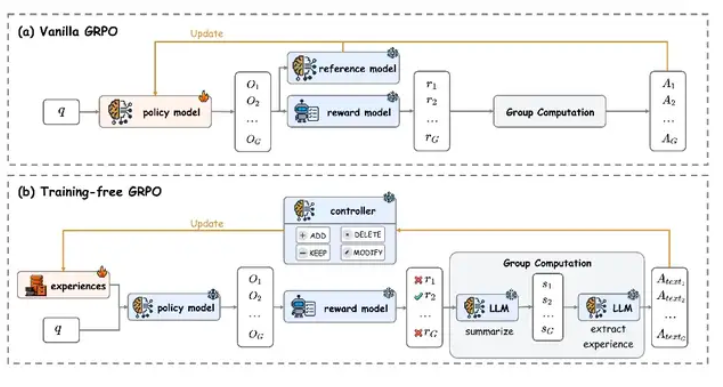

Tencent AI Lab recently released a new model optimization technology called "Training-Free GRPO" (Training-Free Gradient-based Policy Optimization). This method replaces traditional parameter fine-tuning with external knowledge base updates, significantly reducing training costs while achieving performance improvements comparable to expensive fine-tuning solutions.

The core innovation of this technology lies in converting experiential knowledge into token-level prior information, enabling performance optimization of large models without changing any parameters. Experiments conducted by the Tencent research team on the DeepSeek-V3.1-Terminus model showed significant results in tasks such as mathematical reasoning and web search.

From a technical implementation perspective, traditional large language models often perform poorly when handling complex tasks that require external tool calls. Training-Free GRPO improves capabilities by keeping the main model parameters unchanged and dynamically maintaining an external experience knowledge base. This design not only significantly reduces computational resource consumption but also enhances the model's cross-domain generalization ability.

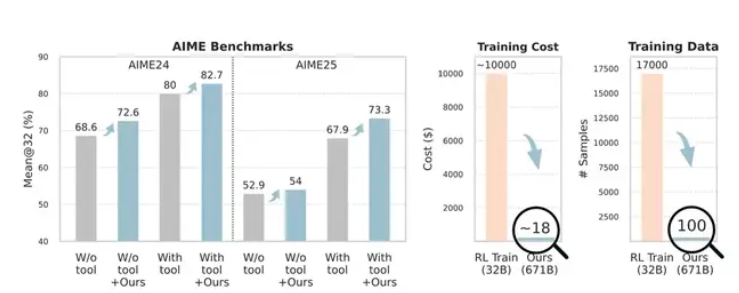

Experimental data clearly demonstrates the effectiveness of this approach. In math competition-level tests AIME24 and AIME25, the accuracy of the DeepSeek-V3.1-Terminus model optimized by Training-Free GRPO increased from 80% and 67.9% to 82.7% and 73.3%, respectively. More importantly, this improvement was achieved using only 100 cross-domain training samples, while traditional reinforcement learning methods usually require thousands of samples to achieve similar results, with costs often reaching tens of thousands of dollars.

In web search tasks, the method also performed well, with the Pass@1 metric increasing from 63.2% to 67.8%. These test results indicate that Training-Free GRPO can achieve stable performance improvements across various task types while maintaining low-cost investment.

From a cost comparison perspective, official data shows that optimizing a model using Training-Free GRPO costs approximately 120 RMB, while traditional parameter fine-tuning typically requires about 70,000 RMB in computing resources. This cost difference mainly stems from the fact that this method does not involve computationally intensive operations such as gradient backpropagation and parameter updates.

The release of this technology provides a new direction for AI model optimization. Especially for small and medium-sized enterprises and research institutions with limited resources, this low-cost and high-efficiency optimization solution lowers the barrier to applying large models. However, it should be noted that the scope of application of this method and its performance in more scenarios still need further verification. The current published test data mainly focuses on specific tasks such as mathematical reasoning and information retrieval.

Paper URL: https://arxiv.org/abs/2510.08191