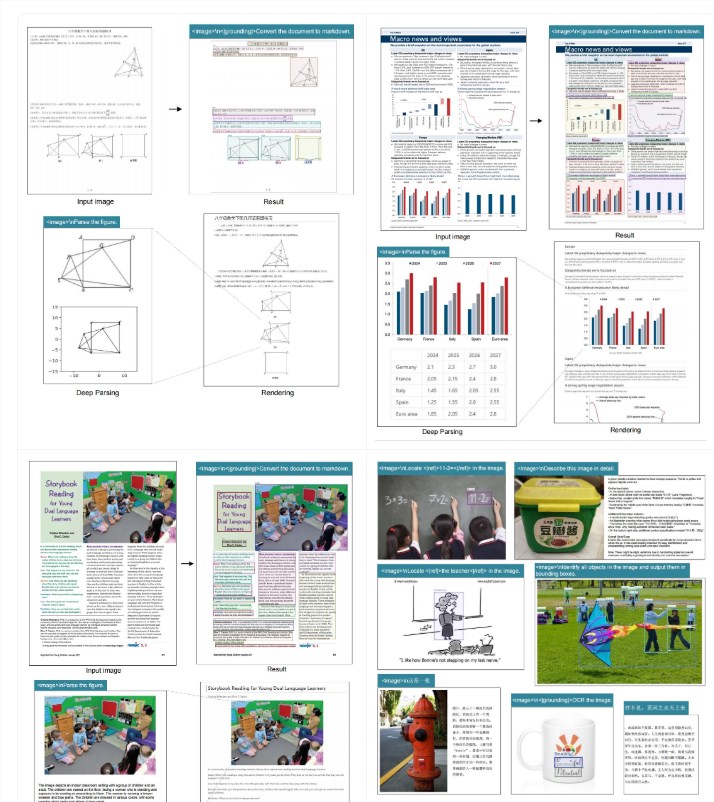

AI technology company DeepSeek recently launched a new optical character recognition (OCR) model called "DeepSeek-OCR." This model is an end-to-end vision-language model (VLM) designed to efficiently parse documents by compressing long text into a small set of visual tokens and then decoding them using a language model.

The research team stated that the model achieved 97% decoding accuracy on the Fox benchmark. Even when the ratio of text tokens to visual tokens was 10 times, the accuracy remained good, and it still showed useful characteristics at 20 times compression. Additionally, DeepSeek-OCR performed well on the OmniDocBench benchmark, using far fewer visual tokens than traditional models.

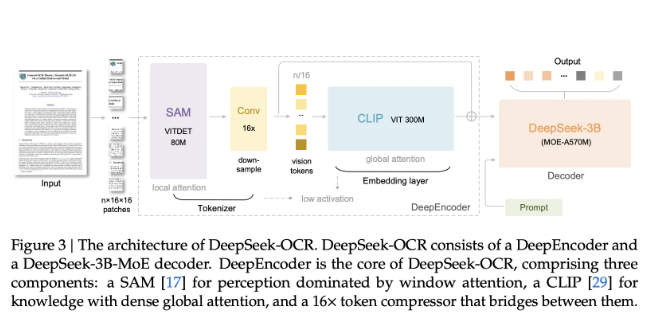

DeepSeek-OCR's architecture consists of two main components: a visual encoder for high-resolution input called DeepEncoder and an expert mixture decoder named DeepSeek3B-MoE-A570M. The encoder uses a local perception window attention mechanism based on SAM and a convolutional compression algorithm, which effectively controls activation memory at high resolutions and reduces the number of output tokens. The decoder is a model with 3 billion parameters, with about 570 million active parameters per token.

When using different modes, DeepEncoder provides multiple resolution options, including Tiny, Small, Base, and Large modes, each corresponding to different numbers of visual tokens and resolutions. There are also dynamic modes called Gundam and Gundam-Master, which can flexibly adjust the token budget based on page complexity.

During training, the DeepSeek team used a phased training process, first training DeepEncoder for next-token prediction, and then conducting full-system training on multiple nodes. Finally, it can generate over 200,000 pages of documents daily. For practical applications, the team recommends starting with the Small mode, and if the page contains dense small fonts or a high number of tokens, the Gundam mode can be selected.

The release of DeepSeek-OCR marks a significant advancement in the field of document artificial intelligence. Its efficiency and flexibility make it adaptable for processing various types of documents.

Paper: https://github.com/deepseek-ai/DeepSeek-OCR/blob/main/DeepSeek_OCR_paper.pdf

Huggingface: https://huggingface.co/deepseek-ai/DeepSeek-OCR

Key points:

🌟 DeepSeek-OCR is a newly released 3B vision-language model with efficient OCR and document parsing capabilities.

📊 The model achieved 97% decoding accuracy on the Fox benchmark and maintains good performance even with significant compression.

🔧 DeepEncoder supports multiple modes and resolution choices to adapt to different document complexities and needs.