Today, Tencent officially released and open-sourced the Hunyuan World Model 1.1 (WorldMirror). This new version has made significant upgrades in multi-view and video input support, single-card deployment, and generation speed, opening up new possibilities for the popularization and application of 3D reconstruction technology.

The Hunyuan World Model 1.1 aims to turn professional 3D reconstruction technology into a tool that ordinary users can easily use. The model can generate professional-grade 3D scenes from videos or images in just a few seconds, greatly improving the efficiency and convenience of 3D reconstruction. Its predecessor, the Hunyuan World Model 1.0, was released in July this year and became the first open-source navigable world generation model in the industry that is compatible with traditional CG pipelines. The new version builds upon this foundation by achieving end-to-end 3D reconstruction with multimodal prior injection and unified output for multiple tasks.

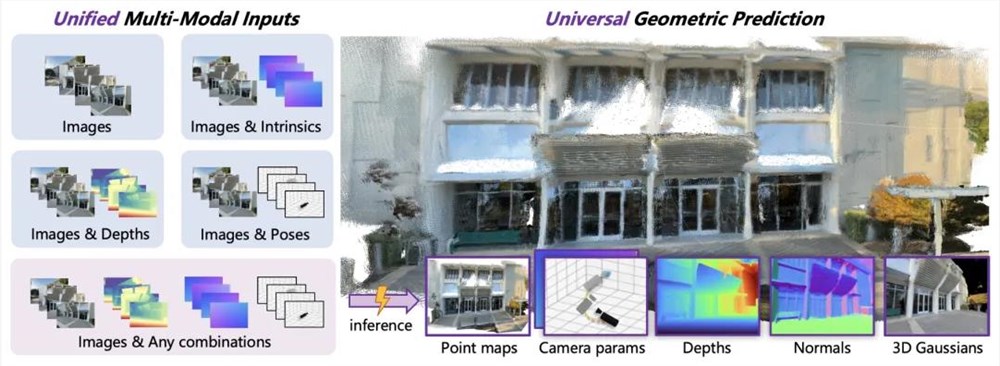

The three main features of the model include flexible processing of different inputs, general 3D visual prediction, and single-card deployment for second-level inference. The Hunyuan World Model 1.1 adopts a multimodal prior guidance mechanism, supporting the injection of various information such as camera pose, camera internal parameters, and depth maps, ensuring that the generated 3D scenes are more geometrically accurate. At the same time, the model achieves various 3D geometry predictions, including point clouds, depth maps, camera parameters, surface normals, and novel view synthesis, demonstrating significant performance advantages.

Compared to traditional 3D reconstruction methods, the Hunyuan World Model 1.1 uses a pure feedforward architecture to directly output all 3D attributes in a single forward pass, significantly reducing processing time. For typical input of 8-32 views, the model can complete inference in just 1 second, meeting the needs of real-time applications.

In terms of technical architecture, the Hunyuan World Model 1.1 uses a multimodal prior prompt and a general geometric prediction architecture, combined with a curriculum learning strategy, enabling the model to maintain efficient and accurate parsing capabilities in complex real environments. Through a dynamic injection mechanism, the model can flexibly handle various prior information, enhancing the consistency of 3D structures and the quality of reconstruction.

Currently, the Hunyuan World Model 1.1 is open-sourced on GitHub, allowing developers to easily clone the repository and deploy it locally. At the same time, ordinary users can also experience it online through HuggingFace Space, uploading multi-view images or videos to preview the generated 3D scenes in real time. The release of this technology marks an important advancement in the field of 3D reconstruction and will further promote the development of industries such as virtual reality and game development in the future.

Project Homepage: https://3d-models.hunyuan.tencent.com/world/

Github Project Address: https://github.com/Tencent-Hunyuan/HunyuanWorld-Mirror

Hugging Face Model Address: https://huggingface.co/tencent/HunyuanWorld-Mirror