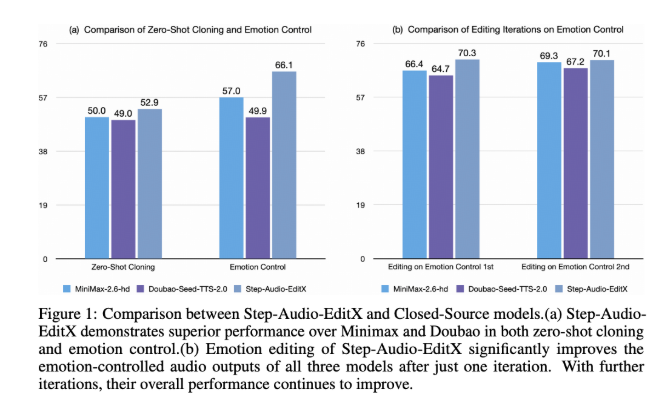

StepFun AI recently released its open-source audio editing model Step-Audio-EditX, an innovative 3B parameter model that makes audio editing as direct and controllable as text editing. By converting audio signal editing tasks into token-level operations, Step-Audio-EditX makes expressive voice editing much simpler.

Currently, most zero-shot text-to-speech (TTS) systems have limited control over emotion, style, accent, and tone. Although they can generate natural speech, they often fail to precisely meet user needs. Previous research has attempted to separate these factors through additional encoders and complex architectures, while Step-Audio-EditX achieves control by adjusting data and training objectives.

Step-Audio-EditX uses a dual-codebook tokenizer, mapping speech into two token streams: one language stream recorded at 16.7Hz, and another semantic stream recorded at 25Hz. The model is trained on a mixed corpus of text and audio tokens, allowing it to handle both text and audio tokens simultaneously.

The key to the model is the use of large-margin learning, where the subsequent training phase enhances the model's performance using synthesized large-margin triplets and quadruplets. With high-quality data from approximately 60,000 speakers, the model shows excellent performance in emotional and stylistic editing. In addition, the model uses human ratings and preference data for reinforcement learning to improve the naturalness and accuracy of speech generation.

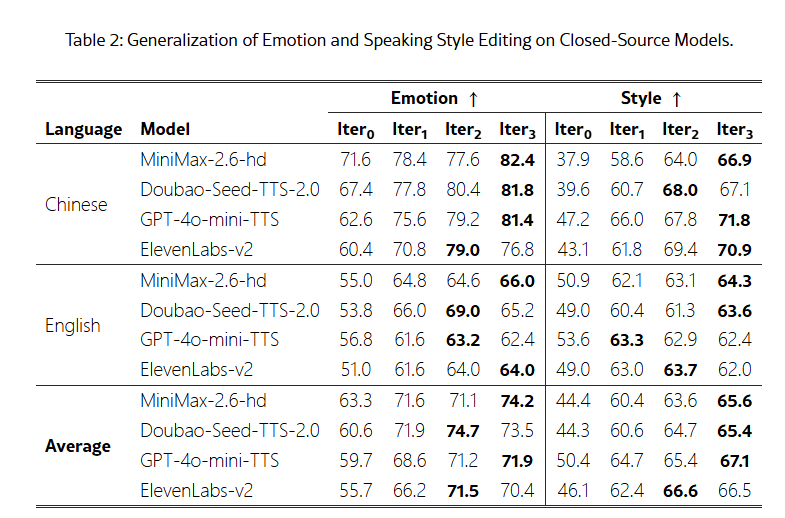

To evaluate the model's effectiveness, the research team introduced the Step-Audio-Edit-Test benchmark, using Gemini2.5Pro as the evaluation tool. Test results showed significant improvements in the accuracy of emotional and speaking style editing after multiple rounds of editing. In addition, Step-Audio-EditX can effectively enhance the audio quality of other closed-source TTS systems, bringing new possibilities to audio editing research.

Paper: https://arxiv.org/abs/2511.03601

Key Points:

🎤 **StepFun AI launches the Step-Audio-EditX model, making audio editing easier.**

📈 **The model uses large-margin learning to improve the accuracy of emotional and stylistic editing.**

🔍 **Introduces the Step-Audio-Edit-Test benchmark, significantly improving audio quality evaluation.**