The long-standing "catastrophic forgetting" problem in artificial intelligence has finally reached a breakthrough. Recently, the Google research team officially announced Nested Learning at the NeurIPS 2025 conference—a new machine learning paradigm inspired by human neural plasticity. For the first time, AI models can almost completely retain old knowledge while continuously learning new tasks, with forgetting rates approaching zero. This breakthrough marks a key turning point for AI, shifting it from a "one-time expert" to a "lifelong learner."

Why does AI always "forget what it learns"?

Traditional neural networks, when learning new skills (such as programming), overwrite existing knowledge (such as writing) through parameter updates, causing a sharp decline in previous abilities—this "catastrophic forgetting" severely limits AI's practicality in dynamic environments. Existing methods such as freezing some parameters or applying regularization constraints are just temporary fixes and cannot simulate the coordinated mechanism of flexible short-term memory and stable long-term memory found in the human brain.

Layered Memory Like an Onion: The Revolutionary Architecture of Nested Learning

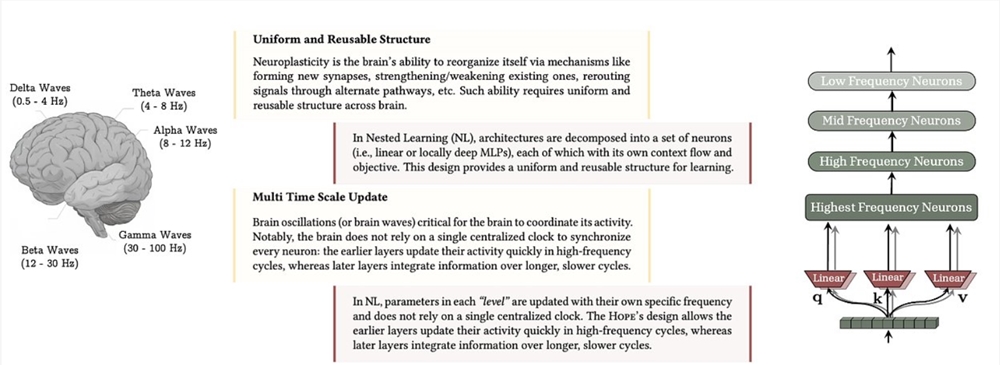

Google's solution completely restructures the learning framework: instead of viewing the model as a single whole, it is a set of nested optimization subsystems, forming a "memory onion" with multiple time scales:

High-frequency layer: quickly responds to immediate tasks, such as temporary context in conversations;

Mid-frequency layer: integrates recent experiences to ensure smooth knowledge transitions;

Low-frequency layer: locks in core long-term memories, such as basic language rules or physical common sense, almost unaffected by new data.

This architecture enables each layer to autonomously coordinate its update rhythm through a unified optimization mechanism, achieving "adaptive modification"—new knowledge is absorbed, and old knowledge is protected, completely avoiding memory erasure caused by gradient conflicts.

Testing Results: Forgetting Rates Approach Zero

The prototype system HOPE (Hierarchical Optimization with Persistent Evolution) based on this paradigm has shown impressive results in multiple benchmarks:

In the Needle-In-A-Haystack long-context retrieval task, accuracy improved by over 20%;

In multi-task continuous learning scenarios (such as alternating training for programming and writing), the performance retention rate of old tasks reached as high as 98%, while traditional methods only achieved 70%;

The model's forgetting curve shows a gradual decay rather than sudden collapse, more closely resembling human learning behavior.

Potential Applications: From Gemini to Robots, AI Will Truly "Come Alive"

The implementation of Nested Learning will reshape multiple fields:

Large models (such as Gemini): no need for repeated retraining, they can continuously absorb new knowledge online, achieving "lifelong evolution";

Medical AI: learn from new cases without forgetting decades of medical knowledge;

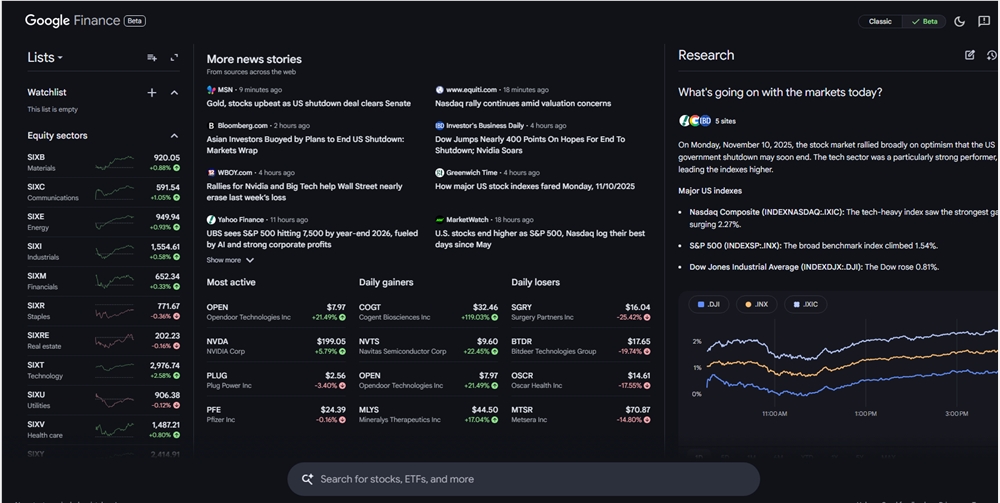

Financial systems: adapt to short-term market fluctuations while retaining long-term judgment of economic cycles;

Embodied robots: learn new actions in complex environments without "forgetting" how to walk safely.