Tencent Hunyuan officially announced the open source of its new OCR model, HunyuanOCR, on November 25. The model has only 1 billion (1B) parameters and is built upon the native multimodal architecture of Hunyuan. It has achieved SOTA (state-of-the-art) performance in multiple industry OCR application rankings, providing a lightweight and efficient solution for the deployment of OCR technology.

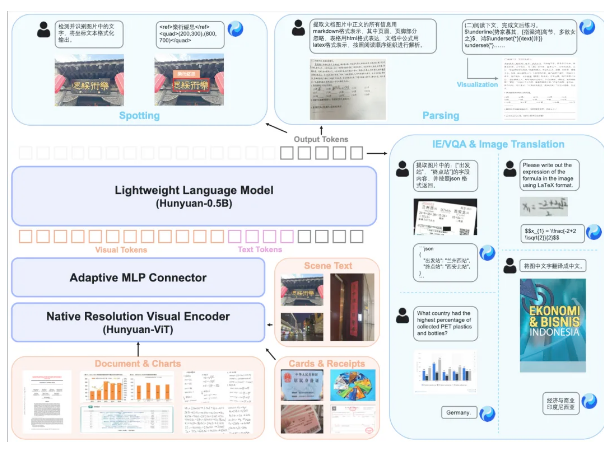

HunyuanOCR is designed with a fully end-to-end paradigm, consisting of a native resolution video encoder, an adaptive visual adapter, and a lightweight Hunyuan language model. Its core advantage lies in "efficiency and convenience": it is compact and easy to deploy, achieving optimal output with a single forward inference, which is far more efficient than industry cascading solutions.

In terms of performance, HunyuanOCR has shown remarkable results. In the complex document parsing evaluation of OmniDocBench, it scored 94.1, surpassing leading models such as Google's Gemini3-Pro; in self-built benchmark tests covering nine scenarios including documents, handwriting, and street scenes, its text detection and recognition capabilities significantly outperformed other open-source and commercial models; on the OCRBench ranking, it achieved SOTA among models with less than 3B parameters, scoring a total of 860 points. In the field of small language translation, the model supports bidirectional translation between 14 high-frequency small languages and Chinese/English, and also won the championship in the small model track of the ICDAR2025 end-to-end document translation competition.

In terms of application scenarios, HunyuanOCR can achieve multilingual complex document analysis, JSON format extraction of ticket fields, and automatic extraction of bilingual subtitles from videos, and has been applied in areas such as ID card processing, video creation, and cross-border communication. Currently, users can download and experience it through web and mobile links or the open-source addresses on GitHub and Hugging Face, and quickly try it by directly accessing the Hugging Face space.