OpenAI announced the cancellation of the independent "voice mode" entry, integrating real-time voice and visual output directly into the main ChatGPT chat window. Users can press the microphone icon to speak and view maps/charts/images at the same time, with the conversation transcript appearing in real time, eliminating the need to switch pages.

Core Updates

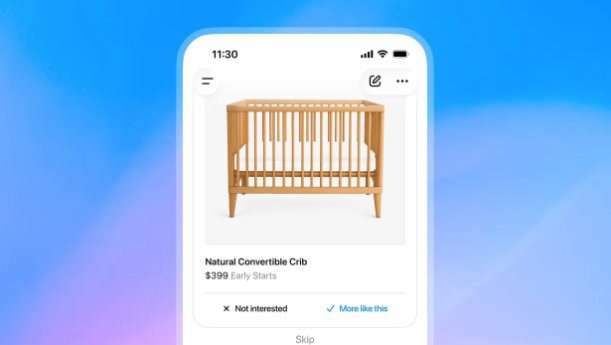

- Multimodal Display: When asking questions through voice, the interface displays related visual results (route maps, data charts, product images, etc.) in real time, and automatically scrolls the text transcription

- Zero Interruption Interaction: Continuous follow-up questions are supported, with the model updating the visuals while providing voice responses, with an average latency of less than 300ms

- Regret Button Switch: In Settings → Voice → "Immersive Audio Mode", you can switch back to the old standalone interface, meeting the preference for pure audio

Technical Foundation

The new voice is powered by GPT-5.1-large + multimodal visual encoder, with a context window of 100k tokens; voice processing uses on-device VAD + cloud ASR, with a transcription accuracy of 96%, supporting 12 languages.

Release and Coverage

- Immediate Push: Available across all platforms for Plus/Pro/Team users, with free version opening gradually later

- Hardware Compatibility: Optimized for iPhone 15 series and Pixel 9, with less than 4% impact on battery life in low-power mode

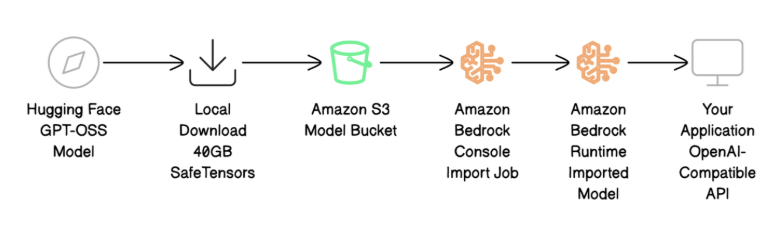

- API Plan: The RealtimeMultimodal interface will be open to developers in Q1 2026, supporting the use of the same voice and visual capabilities within third-party apps

OpenAI stated that this integration is the first step of the "ChatGPT 6.0 experience," and future updates will include scenarios such as price comparison shopping and group voice chats, continuously expanding the boundaries of multimodal capabilities.