Last night, a 1024×1024 neon Hanfu image was rendered in just 2.3 seconds on an RTX 4090, with the VRAM pointer stably at 13GB — Z-Image-Turbo from Alibaba Tongyi Lab stunned the audience: with only 6B parameters, it matched and even slightly surpassed 20B+ closed-source flagship models.

Z-Image speaks with results, without any flashy slogans:

- It can deliver print-quality images with just 8 sampling steps, runs on consumer-grade GPUs like the 3060 6G, and is capped at 16GB VRAM;

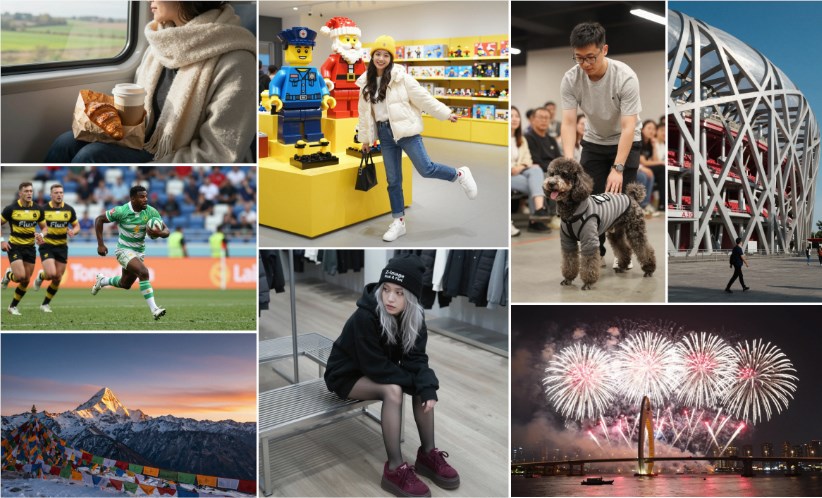

- It can understand long nested Chinese prompts in one go, automatically correcting from "sunlight at night" to "a milk tea in the left hand and a mobile screen displaying today's news," making Chinese and English letters no longer look like scribbles;

- Online features include skin pores, glass reflections, rain fog backlighting, and cinematic depth of field. Z-Image-Turbo has been ranked among the top open-source models on the Elo human preference list.

The secret lies in the new S3-DiT architecture: text, visual semantics, and image tokens are connected as a single stream, reducing the parameter count to one-third of competitors while maximizing inference efficiency. The team also released Z-Image-Edit, allowing users to "change heads and scenes" of original images with natural language, making it immediately playable for community users.

Alibaba has not officially announced whether it will fully open source, but the model is already available on ModelScope and Hugging Face. Pull requests have been merged into the diffusers main branch, and it can be loaded with a single pip command. Once enterprise API pricing is released, Midjourney and Flux may need to start thinking about price cuts earlier.

Z-Image's emergence is like a starting gun: the image generation field has officially entered the "lightweight and high-quality" era, making compute democratization no longer just a slogan — who doesn't have a 16G GPU?

Project address: https://github.com/Tongyi-MAI/Z-Image