How to maintain the freshness and diversity of synthetic data in modern AI models without a single scheduling pipeline becoming a bottleneck? Researchers at Meta AI recently introduced Matrix, a decentralized framework that serializes control and data flows into messages, processed in different queues.

As large language model (LLM) training increasingly relies on synthetic dialogues, tool trajectories, and reasoning chains, existing systems often depend on central controllers or domain-specific setups, which waste GPU resources, increase coordination overhead, and limit data diversity. Matrix adopts a peer-to-peer agent scheduling based on Ray clusters. Compared to traditional methods, it provides 2 to 15 times higher token throughput in real workloads while maintaining similar quality.

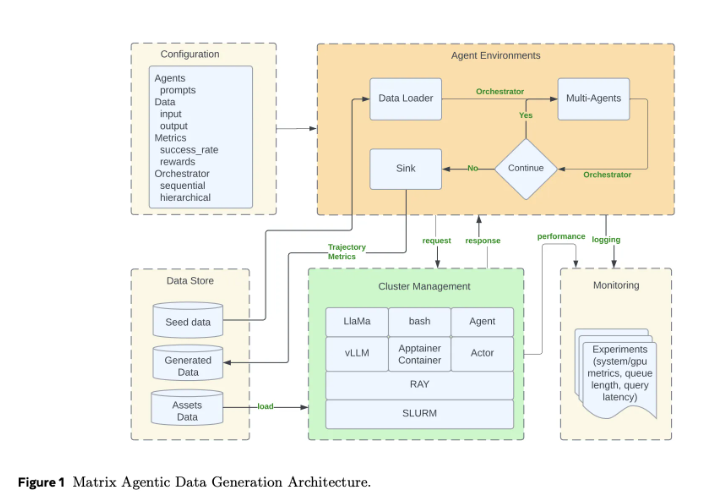

Traditional agent frameworks usually keep workflow states and control logic in a central scheduler, requiring all agent and tool calls to go through this controller. While this model is easy to understand, it is difficult to scale when handling thousands of concurrent synthetic dialogues. Matrix's design serializes control and data flows into a "scheduler" message object. Each stateless agent acts as a Ray actor, retrieving the scheduler from a distributed queue, applying its specific logic, and directly sending the updated state to the next agent. This design reduces idle time caused by varying trajectory lengths and makes fault handling more localized.

Matrix runs on a Ray cluster, typically launched via SLURM. Ray provides distributed agents and queues, while Hydra manages agent roles, scheduler types, and resource configurations. The framework also introduces message offloading, storing large loads in Ray's object store when the conversation history exceeds a threshold, retaining only object identifiers in the scheduler, thus reducing cluster bandwidth.

Through three case studies, Matrix demonstrates its strong performance: in dialogue generation for Collaborative Reasoner, Matrix achieves 200 million tokens, compared to 62 million with traditional methods; in building the NaturalReasoning dataset, Matrix improves throughput by 2.1 times; in the Tau2-Bench tool usage trajectory evaluation, Matrix provides 15.4 times throughput. Matrix's design not only enhances throughput but also maintains output quality, demonstrating efficient synthetic data generation capabilities.

Paper: https://arxiv.org/pdf/2511.21686

Key points:

🌟 Matrix framework uses a decentralized design to avoid bottlenecks of traditional centralized schedulers.

🚀 Matrix shows 2 to 15 times improvement in token throughput across multiple case studies.

🔧 The framework fully leverages the distributed features of Ray clusters to achieve efficient synthetic data generation and processing.