2 Alibaba Tongyi Lab has officially open-sourced Z-Image-Turbo-Fun-Controlnet-Union, a new ControlNet model that marks a significant expansion of its Z-Image series image generation ecosystem. As an open-source AI update exclusively tracked by AIbase, this release further strengthens the leading advantages of Z-Image-Turbo in precise control and creative generation, quickly sparking discussions among global developers and creators.

The model is now available on the Hugging Face platform, under the Apache 2.0 license, and is suitable for commercial use. Background and Technical Foundation Since the launch of the Z-Image series at the end of November, it has quickly topped the Hugging Face trend list, with over 500,000 downloads on the first day.

This series is based on a single-stream diffusion architecture, achieving photorealistic rendering with only 6 billion parameters, including skin texture, hair details, and lighting aesthetics optimization. Z-Image-Turbo, as a fast inference version, can generate images of 1024x1024 resolution in just 8 sampling steps, with an inference time as low as 9 seconds (RTX4080 hardware), and supports mixed Chinese and English text rendering in prompt understanding, significantly improving the efficiency of creation.

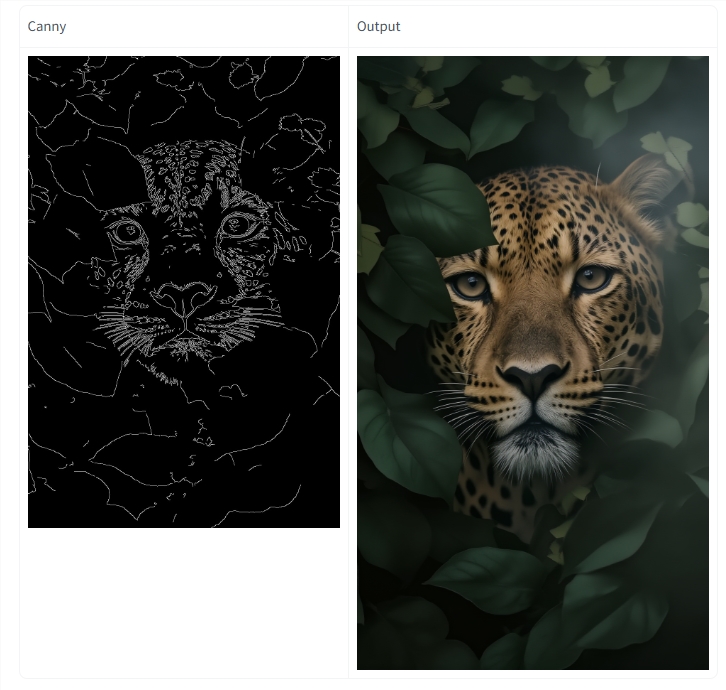

The release of Z-Image-Turbo-Fun-Controlnet-Union represents a deep extension of Z-Image-Turbo. It integrates the ControlNet structure into six core blocks of the model, supporting multiple modal control conditions such as Canny edge detection, HED boundary extraction, and Depth mapping. This model is specifically designed for complex scenarios, such as precise generation of human poses and architectural rendering based on line drawings. Currently, it is implemented through Python code, and support from workflows like ComfyUI is coming soon.

Core Features and Application Highlights

- Multi-condition Control Fusion: Supports joint input of posture, edge, and depth information, enabling "zero-distortion" image manipulation. Developers can easily build automated pipelines from sketches to final products, applicable to e-commerce visual design, film and television special effects, and game prototypes.

- High Compatibility: Inherits the lightweight architecture of Z-Image-Turbo, running with only 6GB VRAM, far below the hardware requirements of traditional ControlNet models. Testing shows that on low-end GPUs, the generation speed reaches 250 seconds per 5 steps, balancing quality and real-time performance.

- Open Source Ecosystem Empowerment: The model provides a 4-bit quantized version (such as MFLUX compatibility), making it easy to deploy on consumer-grade devices like Mac. At the same time, the Z-Image-Edit variant enhances the understanding of composite editing instructions, maintaining consistency in the image.

These features not only lower the barrier to AI image generation but also open the door to professional-level creation for non-professional users. Community feedback shows that the model exceeds competitors like OVIS Image in terms of prompt fidelity in generating advertising materials.

Community Response and Future Outlook The open-source community has responded enthusiastically to Z-Image-Turbo-Fun-Controlnet-Union, with numerous benchmark tests emerging on Reddit and X, including celebrity face recognition and K-pop idol generation experiments. The results show excellent performance in terms of recognizability and naturalness. Developers praised its efficiency advantage, especially stable output under low CFG Scale (2-3). According to AIbase analysis, this release strengthens Alibaba's global competitiveness in the open-source AI field.

In the future, it is expected to work in conjunction with the Z-Image-Base version to form a complete image generation-editing-control loop.

Hugging Face :https://huggingface.co/alibaba-pai/Z-Image-Turbo-Fun-Controlnet-Union