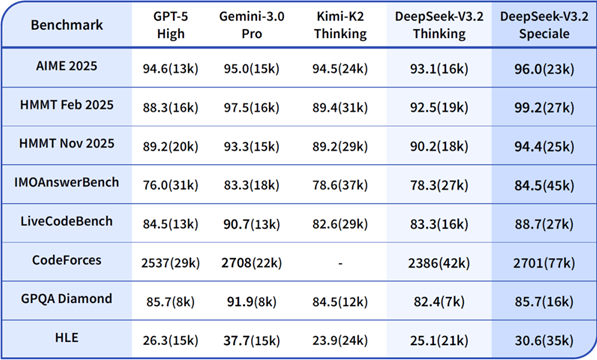

DeepSeek releases V3.2 (Standard Edition) and V3.2-Speciale (Deep Thinking Edition), according to official evaluations:

- V3.2 is evenly matched with GPT-5 in scenarios with 128k context

- V3.2-Speciale ties with Gemini3Pro on benchmarks such as MMLU and HumanEval, and scores a gold medal line of 83.3% in the IMO2025 blind test

The sparsified attention (DSA) is the core upgrade: by routing tokens in a "directory" style, it reduces the computational complexity of long texts from O(n²) to O(n), decreases memory usage by 40%, and improves inference speed by 2.2 times, achieving single-card million-token inference for the first time in an open-source model.

In the post-training phase, the team invested more than 10% of cluster computing power into reinforcement learning, using group-based reinforcement learning (GRPO) combined with majority voting, allowing the model to approach closed-source competitors in code, math, and tool call tasks. V3.2-Speciale removes the "thinking length penalty," encouraging longer chain reasoning, with an average output token count 32% higher than Gemini3Pro, while improving accuracy by 4.8 percentage points.

The model is now available on GitHub and Hugging Face, with weights licensed under Apache 2.0, allowing commercial use. DeepSeek stated that the next step will be to open-source the long-text DSA kernel and RL training framework, continuing to transform "closed-source advantages" into community infrastructure. Industry comments suggest that if subsequent versions maintain the iteration pace, the open-source community may achieve dual leadership in "long text + reasoning" by 2026.